Has la risen again today? I estimate it will be 0.4 in a few days. 🤔

Many ideas are stuck on two issues: on-chain computation is insufficient and cross-chain trust is lacking. Lagrange's solution is straightforward—offload heavy computations to the off-chain ZK Coprocessor, and after running, provide a zero-knowledge proof, with on-chain performing only quick verification. You can think of it as adding a 'computing graphics card' to smart contracts: the contract no longer bears the burden of large computations, but there is verifiable evidence on-chain regarding the correctness of the results. The official 1.0 version has already gone live, focusing on a very 'down-to-earth' capability: proving the results of custom SQL queries within contracts (for example, aggregating across many blocks and slots), safely bringing processed 'big data' back on-chain for use.

This co-processor does not operate in isolation. Lagrange has connected it to EigenLayer, running in the form of AVS (Active Verification Service): there is a decentralized verification network combined with economic constraints to ensure activity and honest execution. In simple terms, it outsources the question of 'who supervises whether off-chain calculations are correct' to a network of nodes with collateral and penalties; this is particularly crucial for cross-chain messaging and cross-chain read/write.

Specifically for cross-chain, Lagrange has a frequently mentioned component: State Committees. It is essentially a ZK light client protocol aimed at optimistic Rollups: a group of unrelated nodes + ZK coprocessors that provide proofs of the finality and state of target chain blocks for cross-chain bridges, messaging layers, and even applications to reference directly. This means providing cross-chain calls with an 'audited reconciliation statement', reducing additional trust assumptions and making latency and costs more controllable.

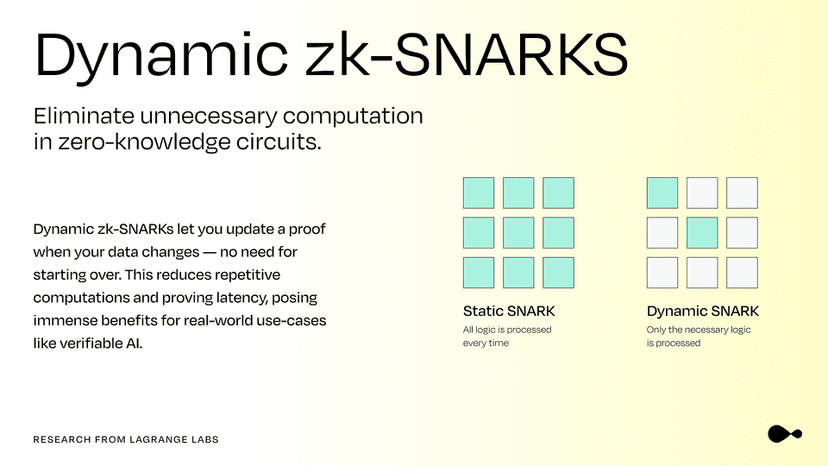

Lagrange's approach to 'large-scale data' is also not about 'hard confrontation'. They have made the classic MapReduce paradigm ZK-enabled: ZK MapReduce (ZKMR) first parallelizes computation in shards, each generating sub-proofs, and then recursively aggregates them into a total proof that can be quickly verified on-chain. The benefit is that even if you need to perform statistics/filtering/feature engineering on millions of storage slots, what ultimately returns to the chain is just a 'small but precise' proof, with stable verification costs.

These capabilities are not just 'concepts'. For example, in the practice of Fraxtal, applications can verify 'the results of SQL queries made on the Ethereum mainnet or L2 veFXS contracts', reading holdings and weights dispersed across different chains directly into contract logic. Cross-chain state reading, which used to be either slow or untrustworthy, is now 'prove first, then use'.

The developer onboarding path is also improving. Lagrange has launched the Euclid public test network, with the core idea of 'verifiably' indexing on-chain data into a database, and then performing high-concurrency proofs and queries on it; the team has disclosed some engineering details (such as the number of storage slots that can be processed in a single batch and end-to-end latency) to demonstrate the scalability of the approach. For you, the experience is closer to 'querying a database within a contract', rather than extracting data from raw logs bit by bit.

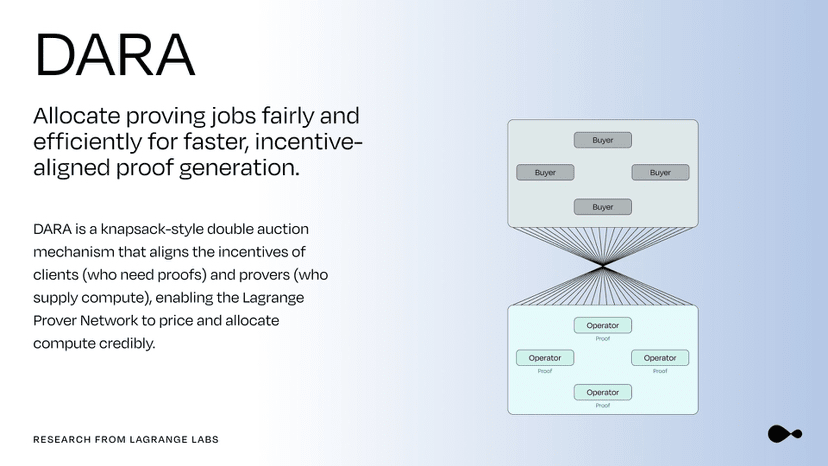

In the matter of 'who generates proofs', Lagrange has built the ZK Prover Network (proof network). It is not a standalone point but is run by a group of leading verification/infrastructure teams operating production-grade provers; the network has explicit activity constraints and economic penalty designs for 'timely delivery of proofs', avoiding the situation where 'the timing of proofs solely depends on luck'. This allows upper-layer applications not to worry about 'queue stalls'.

An even more advanced area is verifiable AI (zkML). Lagrange's DeepProve aims to 'make every step of AI inference provable', and it recently announced the completion of a milestone for generating encrypted proofs for a complete LLM inference. For teams looking to send model results into contracts for settlement, compensation, or access control, this effectively turns 'black box conclusions' into 'white box certificates'.

By putting these clues together, you'll find that it is not about 'reinventing a chain', but standardizing the concept of verifiable computation: running heavy computations on the data of one or more chains; generating proofs off-chain; quick acceptance on-chain, and can be directly consumed by cross-chain components.

If public chains are compared to 'engines', Lagrange is more like an external 'booster': allowing cross-chain, data-intensive, AI-combined applications to no longer be hindered by performance and trust thresholds.

Lagrange does not want you to 'compute everything on-chain', but rather to save on the necessary computing power while bringing back 'trustworthiness'—this is the key to scaling complex Web3 applications.