Welcome to the (Web3 Trend Indicator) series, a comprehensive analysis and interpretation of recent new technologies, protocols, and products in the Web3 industry by Shijun.

The reason is that AI has doubled my usual research speed for new projects. I think the value of future humans will focus more on thinking, judgment, and inspiration.

Therefore, this series will help you grasp core changes and assess the trends they might trigger from three perspectives: industry background → technical principles → potential impacts.

The author's views are mostly tinged with pessimism and do not constitute any investment trading advice, nor are they targeted at any project party.

Jito BAM | 'Block Sorting + Pluginized Block Building Market' on Solana

What is it:

In simple terms, BAM is a platform for 'block building' on Solana. Similar to Ethereum's builder net doing PBS (separating block builders and validators), the goal is to organize transaction order more orderly, combat MEV, and prevent the risk of centralized malfeasance.

Who launched it and what background:

The leading party is the Jito camp, the largest transaction auction platform on Solana, occupying 90% of the validator client market, with strong leadership influence. I have previously conducted detailed research that can be referred to: A Comprehensive Report on the Evolution and Controversies of MEV on Solana.

The lineup of participants is also very strong: Triton One, SOL Strategies, Figment, Helius, Drift, Pyth, DFlow, etc. Clearly, this is a joint action by Solana's official and mainstream projects.

And such motivation is actually easy to understand: on one side, Solana faces the pressure of explosive development from Hyperliquid, a 'native order book chain', while the core value of Hyperliquid is very beneficial for market maker operations. However, the development nature of Solana itself makes it difficult to implement targeted optimizations. But if the transactions in an entire block can be customized, it can break through the limitations of Solana's linear block production, thus benefiting the optimization of various DeFi scenarios.

The official deployment plan is: initially operated by Jito Labs with a small number of validators participating; expanded to more node operators in the mid-term, aiming to cover 30%+ of network staking; ultimately, the code will be open-sourced and governed in a decentralized manner.

In addition, the industry's narrative trend toward 'verifiable fairness' makes it easy for BAM to gain support from validators and protocol parties. Therefore, I believe it is more grounded in the pursuit of fairness optimizations like TEE + PBS, which is why it was introduced.

What principle is used to achieve it:

Additionally, to understand its value, it is necessary to understand a characteristic of Solana's POH algorithm.

That is, its block production is actually stepwise linear (under one slot of 400ms, there are 64 tips time slots, and when each time slot arrives, the current transaction is sent out, and it will not change unless it is rolled back), which is different from Ethereum's model of 'arranging the entire block, reaching consensus, and then synchronizing'.

Through this BAM system, as Jito, it can easily upgrade a large number of validator clients, thus increasing the acceptance ratio of the BAM system among validators.

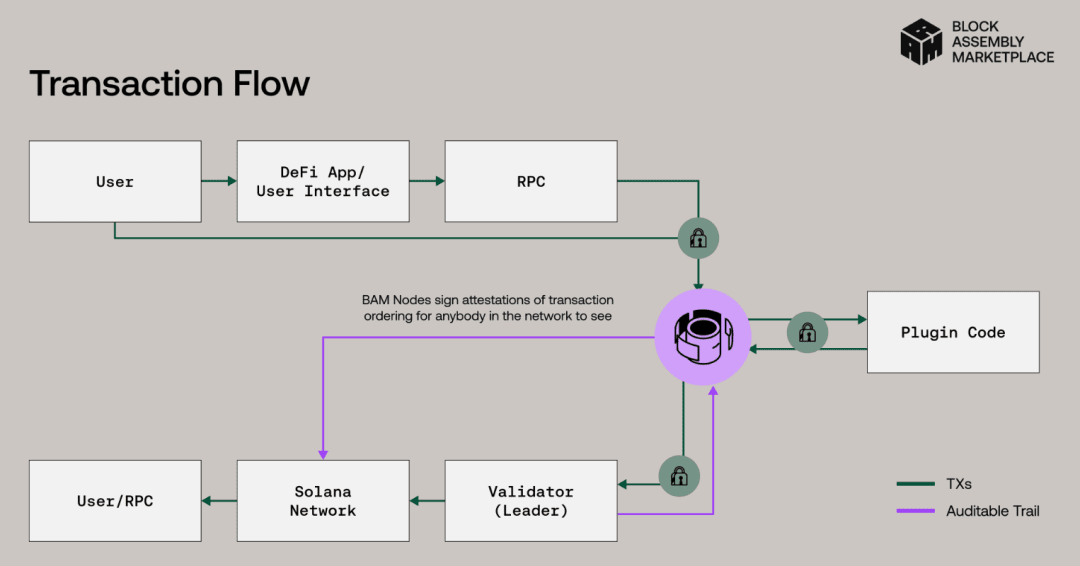

Looking again at the system structure of BAM as shown in the figure below, the middle purple part and the right side Plugin code part are BAM.

It will allow transactions on Solana not to be sent to the Leader one by one, but to first arrange the transaction order of 'this entire block' in TEE (according to some fixed sorting rules implemented with Plugin code), and then hand it over to the validators in one go.

The validators must ultimately provide proof to TEE, confirming that they have indeed allocated all the block space (exclusivity) to this order flow market.

Here, a notable feature is the plugin function, which allows rules to be 'hardcoded' into Tee's transaction order sorting. This is actually very practically significant:

For example: Oracle platforms need to have their price updates fixed as the first transaction in a block, which can reduce the randomness of updating on-chain prices and avoid problems arising from untimely price updates. For DEX, a plugin can be written to identify high-probability failed transactions and not package them directly in Tee, but gradually wait for the transactions to expire, thereby reducing the fees generated by failures.

It can coexist with the existing Solana block production process: still ordinary order flow, Jito bundle, and BAM operating in parallel. BAM is 'only receiving entire blocks of BAM in a certain block'.

How to evaluate it:

I believe: This is a path that is 'strong in lineup, strong in narrative, and focused on scenarios', but I am not optimistic about it becoming a mainstream market path.

Similar to the reasons for the long-term difficulty of development for the Builder net on Ethereum and the highly anticipated mev share.

Because in reality, the cost of TEE is high, and the QPS limit is at the thousand level (back in 2013, Tee was only 128M in memory, and although it has developed a lot since then, it can still only achieve QPS in the thousand range). However, currently, 40% of Ethereum's blocks are constructed by TEE.

However, Solana's data and computational throughput are significant. You need to stack a lot of TEE to handle the load, along with complete disaster recovery, memory, and bandwidth operations. If this project lacks sustained economic incentives, it will be very difficult to achieve positive returns.

In fact, Jito's earnings are not high (compared to high-yield protocols on-chain). For example, in the second quarter of 2025, Jito earned only 22,391.31 SOL (about 4 million USD) through tips. Once Solana's massive transaction volume migrates over, Tee's downtime is inevitable, and Tee also has many characteristics such as memory crashes and storage clearance, which will increase the risk of downtime and lead to a large-scale disappearance of transactions.

However, it has the potential for 'killer high-priced selling points': for example, oracle sequencing and failure exemption are all 'visible' experiential dividends. Market makers and enterprise-level trading terminals will pay for it. Moreover, there is also Solana's official conceptual support involved, making it a good avenue for gaining buzz.

Finally: The positioning of BAM itself is not to handle 7x24 around-the-clock throughput; it is a tool for 'providing deterministic guarantees for key blocks'. However, many deterministic guarantees rely on absolute certainty rather than 30% certainty. In a non-100% scenario, even if it is 99%, it is 0%. This is the key factor in the decisions of major Web3 projects.

BRC 2.0 | 'Mapping EVM': Programmable capabilities driven by BTC

What is it:

On September 2, 2025, it will be activated. I understand this as a 'BTC-driven, EVM-executed' dual-chain shadow system. Note that it is not BRC20, but means the second generation of BRC. For the background on BRC20, you can refer to: Interpretation of Bitcoin Ordinals Protocol and BRC20 Standard Principles, Innovations, and Limitations.

The core of 2.0 is that you write 'instructions' on BTC using inscription or commit-reveal, running a 'modified version of EVM' in the indexer to execute the corresponding deployment and calls. Gas is not charged in EVM (parameters are kept but not priced), and transaction fees are calculated within the BTC transaction.

Basically similar to the Alkanes protocol (methane), methane writes transaction instructions based on the BTC op-return field and runs in the WASM virtual machine, while it runs in the EVM.

Who launched it and what background:

The initiator's background is: the bestinslot platform that gained popularity during the BTC inscription era, continuing the idea of BRC-20: not changing BTC consensus but trying to overlay 'programmability'.

The industry background is: in the past two years (actually the past two years), the narrative around BTC programmability/L2 has been hot, and everyone is looking for an engineering path that works, but the gap between market trends and development progress has been too large, resulting in the emergence of models like brc2.0 and alkanes only this year.

The market buzz is somewhat limited because the BTC stage has always lacked a cohesive force to guide it, and many protocols can derive on other protocols. Therefore, brc2.0 is very likely unrelated to brc20, as these two have no actual relationship.

What principle is used to achieve it:

It operates the logic of EVM within the indexer, not on the BTC chain or as a separate chain. Note that it is not considered a chain, as there is no consensus.

The address on the EVM that users need to control is obtained by hashing the user's BTC address and mapping it to a 'virtual EVM address'.

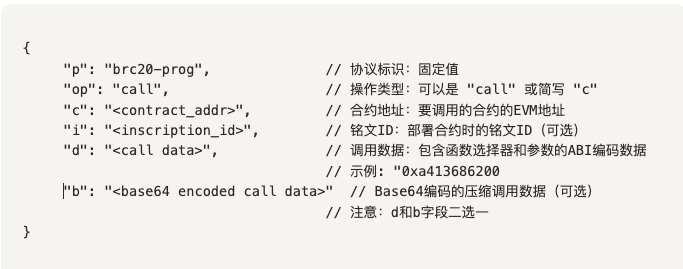

To operate this system, it is actually quite similar to the logic of asset control in BRC20, both being just a json string. In brc2.0, it is defined as follows:

It can be seen that you are encoding instructions on BTC, with various bytecode/call data being sent, and it is replayed and executed in EVM.

Moreover, the signatures and Gas have also changed: making the EVM layer gasPrice=0, only serving as a resource limit; actual transaction fees are reflected in the BTC transaction fee.

In fact, this is very risky. I specifically had AI search through their node code, and found no protection for 'call depth/step limit'. So theoretically, a contract with 'infinite recursion/self-calling' could crash this VM (of course, this protection isn’t hard to add: just set a maximum depth).

How to evaluate it:

First, he knows how to name things. At least the buzz around brc2.0 will be better than creating a new protocol name, which is similar to the recent resurgence of RGB.

Secondly, it is not completely unrelated to brc20, since its protocol design philosophy and field patterns are fundamentally similar, but this is not a matter of copyright. However, I have not seen the original author of brc20 standing behind it, so the correlation should not be significant.

Finally, all platforms exploring programmability may want to share the value of this world-class consensus. However, I believe that BTC should not pursue programmability, as any pursuit will inevitably fall behind the optimizations of various high-speed chains in terms of functionality and experience.

Moreover, once programmability is even built into BTC itself, it will break its valuation trap. A project that can be practically applied can be valued based on PE, but currently, BTC's strength lies in its limited supply-demand model. Limited supply-demand cannot be valued, so with a price, along with a consensus on the price, it is precisely BTC's inherent limitations that have contributed to its success.

EIP-7999 | Ethereum Multidimensional Fee Market Proposal

What is it:

The proposal led by Vitalik is certainly worth a look. Additionally, in the latest EIP, it has been renamed from EIP-0000 to EIP-7999, so this article will keep both names in use.

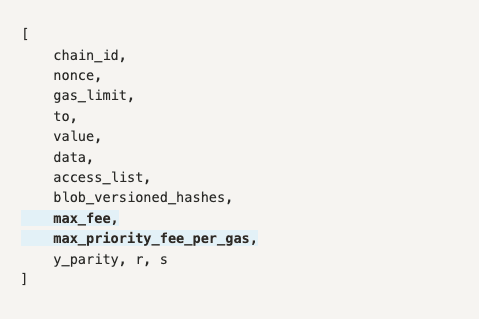

This is a new type of transaction proposed in the context of 'transaction fee splitting' (i.e., a single transaction containing a price for blobs, a price for calldata, and an execution price from eip-1559) after EIP-4844, consisting of a 'total price cap + multiple resource price vectors'.

You can understand it as: packaging all resource quotes at once, making a unified semantic bid, with the aim of solving the problem of too many pricing dimensions on-chain.

Who launched it and what background:

The direction has been promoted by Vitalik through multiple articles. Previously, there was a segment of EIP with '4 zeros', which was later renumbered to 7999. The name is not as stunning, and this direction has also been a topic of Vitalik's thoughts, as seen from posts in 2022 and 2024.

Why propose it now?

Because wallets, routers, and auction price systems have clearly felt the fragmented experience of the 'multi-price system': each block only has 6 blobs, so to use blob transactions, bidding is necessary; while the transactions themselves are still in eip-1559; there are also calldata prices starting from 2015 with different prices for 0/non-0 bytes... L2 developers have been pushed to a corner because they must set independent fee caps for each resource dimension. If any dimension is set too low, the entire transaction may fail, even if the user's total fee budget is sufficient, it may be unable to execute the transaction due to a sudden increase in the base fee of a particular resource.

What principle is used to achieve it:

The proposal plans to introduce a unified multidimensional fee market, with the core design allowing users to set a single max_fee parameter (replacing multiple max_fee_per_gas on different fields). During the EVM execution process, this fee will be dynamically allocated among different resources (EVM gas, blob gas, calldata gas).

To achieve this, it will certainly not be easy. The plan is to introduce a new type of transaction with the following fields:

Clearly, this design is somewhat better, after all, the author found the gas fee design of ERC-4337 too complex to manage.

For details, see: From 4337 to 7702: An In-depth Interpretation of the Past and Future of the Ethereum Account Abstraction Track

How to evaluate it:

I believe this direction is promising and has a unified fee significance, making it much easier for future L2/L3 developments, aligning well with Ethereum's overall strategic direction.

However, complexity will clearly increase, and engineering advancement also requires a more stable rhythm. This proposal will change block headers, RLP encoding, limits, etc., which is not just a hard fork level change; it will also require full-chain adaptation from other platforms, especially many wallets.

Although they can choose not to support this type of transaction, they need to analyze the state of these transactions.

Thus, it certainly won't land in the short term, at least not until 1-2 major hard forks later. However, Vitalik's two articles on the fee market offer very profound economic reflections worth close examination.