Why do we need an open robot era

In the next 5–8 years, the number of robots on Earth will exceed 1 billion, marking the turning point from 'single-machine demonstrations' to 'social division of labor'. Robots will not only be mechanical arms on assembly lines but also 'colleagues, teachers, and partners' that can perceive, understand, decide, and collaborate with humans.

In recent years, robotic hardware has grown rapidly like muscle, with more dexterous hands, steadier gaits, and richer sensors. But the real bottleneck lies not in metal and motors, but in how to equip them with the mentality of sharing and collaboration:

Software from different manufacturers is incompatible, and robots cannot share skills and intelligence;

Decision logic is locked within closed systems, making external verification or optimization impossible;

A centralized control architecture means that the speed of innovation is limited, and the cost of trust is high.

This separation makes it difficult for the robotics industry to translate the progress of AI models into replicable productivity: while there are endless single-machine demos of robots, there is a lack of cross-device migration, verifiable decisions, and standardized collaboration, making it hard to scale. What OpenMind aims to solve is this 'last mile'. Our goal is not to create a robot that dances better, but to provide a unified software foundation and collaboration standards for a vast number of heterogeneous robots globally.

Enable robots to understand context and learn from each other;

Allow developers to quickly build applications on an open-source, modular architecture;

Ensure safe collaboration and settlement between humans and machines under decentralized rules.

In short, OpenMind is building a universal operating system for robots, enabling them not only to perceive and act but also to collaborate safely and at scale in any environment through decentralized cooperation.

Who is betting on this path: $20M funding and a global lineup

Currently, OpenMind has completed $20 million (Seed + Series A) funding, led by Pantera Capital, with a lineup of the world's top technology and capital forces:

Western technology and capital ecosystem: Ribbit, Coinbase Ventures, DCG, Lightspeed Faction, Anagram, Pi Network Ventures, Topology, Primitive Ventures, and Amber Group, etc., have long focused on crypto and AI infrastructure, betting on the underlying paradigm of 'agent economy and machine internet';

Eastern industrial energy: Sequoia China and others deeply involved in the robotics supply chain and manufacturing system understand the full difficulty and barriers of 'producing a machine and delivering it at scale';

Meanwhile, OpenMind is also in close communication with traditional capital market participants like KraneShares to jointly explore incorporating the long-term value of 'robots + intelligent agents' into structured financial products, thus achieving a dual-channel connection between coins and stocks. In June 2025, when KraneShares launched the global humanoid and embodied intelligence index ETF (KOID), they chose the humanoid robot 'Iris', co-customized by OpenMind and RoboStore, to ring the opening bell at Nasdaq, marking the first time in exchange history that a humanoid robot completed this ceremony.

As Pantera Capital partner Nihal Maunder said:

If we expect intelligent machines to operate in open environments, we need an open intelligent network. What OpenMind is doing for robots is akin to what Linux does for software and Ethereum does for blockchain.

Teams and Consultants: From Laboratory to Production Line

Jan Liphardt, founder of OpenMind, is an associate professor at Stanford University and a former Berkeley professor, with long-term research in data and distributed systems, deeply engaged in both academia and engineering. He advocates for promoting open-source reuse, replacing black boxes with auditable and traceable mechanisms, and integrating AI, robotics, and cryptography through interdisciplinary approaches.

OpenMind's core team comes from institutions like OKX Ventures, Oxford Robotics Institute, Palantir, Databricks, and Perplexity, covering key aspects of robotic control, perception and navigation, multi-modal and LLM scheduling, distributed systems, and on-chain protocols. Meanwhile, an advisory team composed of experts from academia and industry (such as Stanford robotics head Steve Cousins, Bill Roscoe from the Oxford Blockchain Center, and Alessio Lomuscio, professor of secure AI at Imperial College) also ensures the 'safety, compliance, and reliability' of robots.

OpenMind's solution: Two-layer architecture, one set of order

OpenMind has built a reusable infrastructure that enables robots to collaborate and communicate across devices, manufacturers, and even national borders:

Device-side: Provide an AI-native operating system OM1 for physical robots that connects the entire link from perception to execution into a closed loop, allowing different types of machines to understand the environment and complete tasks;

Network-side: Build a decentralized collaboration network FABRIC, providing identity, task allocation, and communication mechanisms to ensure that robots can recognize each other, allocate tasks, and share states during collaboration.

This combination of 'operating system + network layer' allows robots not only to act independently but also to collaborate, align processes, and complete complex tasks together within a unified collaboration network.

OM1: An AI-native operating system for the physical world

Just as smartphones need iOS or Android to run applications, robots also need an operating system to run AI models, process sensor data, make reasoning decisions, and execute actions.

OM1 is born for this purpose; it is an AI-native operating system for real-world robots, enabling them to perceive, understand, plan, and complete tasks in various environments. Unlike traditional, closed robotic control systems, OM1 is open-source, modular, and hardware-agnostic, capable of running on various forms such as humanoid, quadruped, wheeled, and robotic arms.

Four core links: From perception to execution

OM1 breaks down robotic intelligence into four general steps: Perception → Memory → Planning → Action. This process is fully modularized by OM1 and connects through a unified data language, achieving composable, replaceable, and verifiable intelligent capability construction.

The architecture of OM1

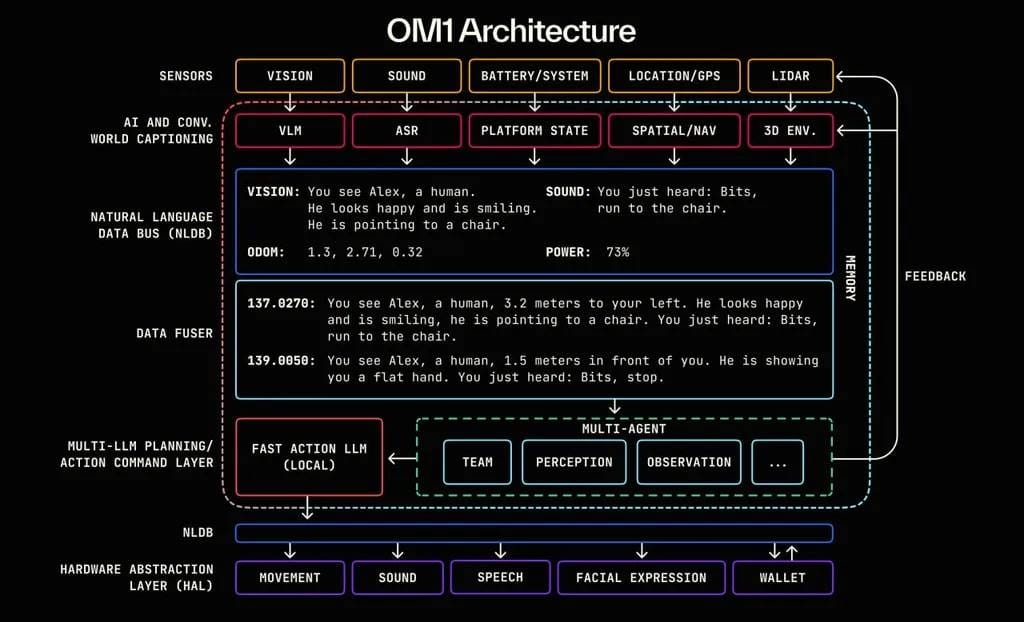

Specifically, the architecture of OM1 consists of seven layers:

Sensor Layer collects information: Cameras, LIDAR, microphones, battery status, GPS, and other modalities serve as perception inputs.

AI + World Captioning Layer translates information: Multi-modal models convert visual, audio, and state data into natural language descriptions (e.g., 'You see a person waving').

Natural Language Data Bus transmits information: All perceptions are converted into timestamped language fragments and passed between different modules.

Data Fuser combines information: Integrates multi-source inputs to generate the complete context needed for decision-making (prompt).

Multi-AI Planning/Decision Layer generates decisions: Multiple LLMs read the context and generate action plans based on on-chain rules.

NLDB downstream channel: Transmits decision results to the hardware execution system via a language intermediary layer.

Hardware Abstraction Layer takes action: Converts language instructions into low-level control commands, driving hardware execution (movement, voice broadcasting, transactions, etc.).

Quick to start, widely implemented

To turn an idea into a task executed by robots as quickly as possible, OM1 comes with these built-in tools:

Rapidly add new skills: Using natural language and large models, new behaviors can be added to robots in a matter of hours instead of months of hard coding.

Multi-modal integration: Easily combines LiDAR, vision, sound, and other perceptions, allowing developers not to write complex sensor fusion logic themselves.

Pre-configured large model interfaces: Built-in language/vision models like GPT-4o, DeepSeek, VLMs support voice interaction.

Wide software and hardware compatibility: Supports mainstream protocols like ROS2 and Cyclone DDS, seamlessly integrating with existing robot middleware. Whether it's Unitree G1 humanoid, Go2 quadruped, or Turtlebot, robotic arms can be directly connected.

Connect to FABRIC: OM1 natively supports identity, task coordination, and on-chain payments, allowing robots not only to complete tasks independently but also to participate in a global collaboration network.

Currently, OM1 has been deployed in multiple real-world scenarios:

Frenchie (Unitree Go2 quadruped robotic dog): Completed complex site tasks at the USS Hornet Defense Technology Exhibition 2024.

Iris (Unitree G1 humanoid robot): Conducted live human-robot interaction demonstrations at the EthDenver 2025 Coinbase booth and plans to enter college curricula across the U.S. through RoboStore's educational program.

FABRIC: A decentralized human-robot collaboration network

Even with a powerful brain, if robots cannot safely and reliably collaborate with each other, they can only fight alone. In reality, robots from different manufacturers often build their own systems and govern themselves, making skills and data impossible to share; collaboration across brands or even countries lacks trustworthy identities and standard rules. As a result, some difficult problems have arisen:

Identity and location proof: How do robots prove who they are, where they are, and what they are doing?

Skills and data sharing: How to authorize robots to share data and call upon skills?

Defining control: How to set the frequency, range, and conditions for data feedback of skill usage?

FABRIC is designed to solve these problems. It is a decentralized human-machine collaboration network built by OpenMind, providing a unified infrastructure for identity, tasks, communication, and settlement for robots and intelligent systems. You can think of it as:

Like GPS, allowing robots to know where each other are, whether they are close, and whether they are suitable for collaboration;

Like VPN, allowing robots to connect securely without requiring public IPs and complex network settings;

Like a task scheduling system, automatically publishing, receiving, and recording the entire process of task execution.

Core application scenarios

FABRIC is now adaptable to various practical scenarios, including but not limited to:

Remote control and monitoring: Safely control robots from anywhere without a dedicated network.

Robot-as-a-Service market: Call upon robots like hailing a ride, completing tasks such as cleaning, inspection, and delivery.

Crowdsourced mapping and data collection: Fleets or robots upload real-time traffic conditions, obstacles, and environmental changes to generate shareable high-precision maps.

On-demand scanning/mapping: Temporarily call nearby robots to complete 3D modeling, architectural mapping, or evidence collection in insurance scenarios.

FABRIC ensures that 'who is doing what, where, and what has been completed' can be verified and traced, establishing clear boundaries for skill calls and task executions.

In the long run, FABRIC will become the App Store of machine intelligence: skills can be authorized and called upon globally, and the data generated from calls will feed back into the models, driving the continuous evolution of the collaboration network.

Web3 is writing 'openness' into the machine society

In reality, the robotics industry is accelerating consolidation, with a few platforms controlling hardware, algorithms, and networks, while external innovations are blocked at the door. The significance of decentralization lies in the fact that regardless of who manufactures the robot or where it operates, collaboration, skill exchange, and reward settlement can occur in an open network without relying on a single platform.

OpenMind uses on-chain infrastructure to write collaboration rules, skill access permissions, and reward distribution methods into a public, verifiable, and improvable 'network order'

Verifiable identity: Each robot and operator will register a unique identity on-chain (ERC-7777 standard), with hardware features, responsibilities, and permission levels being transparent and traceable.

Public task allocation: Tasks are not assigned in a closed black box, but rather published, auctioned, and matched under public rules; all collaboration processes will generate encrypted proofs with time and location, stored on-chain.

Automated settlement and profit sharing: After completing a task, the distribution of profits, release or deduction of insurance and deposits will be automatically executed, allowing any participant to verify the results in real-time.

Skill free flow: New skills can be set through on-chain contracts for call frequency and applicable devices, protecting intellectual property while allowing skills to flow freely worldwide.

This is a collaborative order that all participants can use, supervise, and improve. For Web3 users, this means that the robotic economy has the genes of anti-monopoly, composability, and verifiability from its inception—this is not only a racing opportunity but also an opportunity to engrave 'openness' into the underlying layer of machine society.

Bringing embodied intelligence out of isolation

Whether patrolling hospital wards, learning new skills in schools, or completing inspections and modeling in urban neighborhoods, robots are gradually stepping out of 'showroom demonstrations' to become a stable part of human daily work. They operate 24 hours a day, adhere to rules, have memory and skills, and can naturally collaborate with humans and other machines.

To truly scale these scenarios, what is needed behind them is not only smarter machines but also a foundational order that allows them to trust, communicate, and collaborate with each other. OpenMind has already laid the first 'roadbed' on this path with OM1 and FABRIC: the former enables robots to truly understand the world and act autonomously, while the latter allows these capabilities to flow within a global network. Next, the goal is to extend this path into more cities and networks, making machines reliable long-term partners in the social network.

OpenMind's route is clear:

Short-term: Complete the core function prototype of OM1 and the MVP of FABRIC, and launch on-chain identity and basic collaboration capabilities;

Mid-term: Implement OM1 and FABRIC in education, homes, and enterprises, connecting early nodes and building a developer community;

Long-term: Build OM1 and FABRIC into global standards, allowing any machine to connect to this open machine collaboration network just like accessing the internet, forming a sustainable global machine economy.

In the Web2 era, robots were often locked into closed systems of a single manufacturer, with functions and data unable to flow across platforms; however, in the world built by OpenMind, they are equal nodes of an open network: able to join, learn, collaborate, and settle freely, forming a trustworthy global machine society together with humans. What OpenMind provides is the powerful capability to scale this transformation.