For more than a decade, blockchains have been built on an assumption so deeply embedded that most people never noticed it: every transaction has a human behind it. Every wallet implies a person. Every signature implies intent, awareness, and responsibility. Even when bots entered the picture, they were treated as crude extensions of humans, borrowing private keys, API access, or centralized permissions that ultimately pointed back to someone clicking a button somewhere.

That assumption is quietly collapsing.

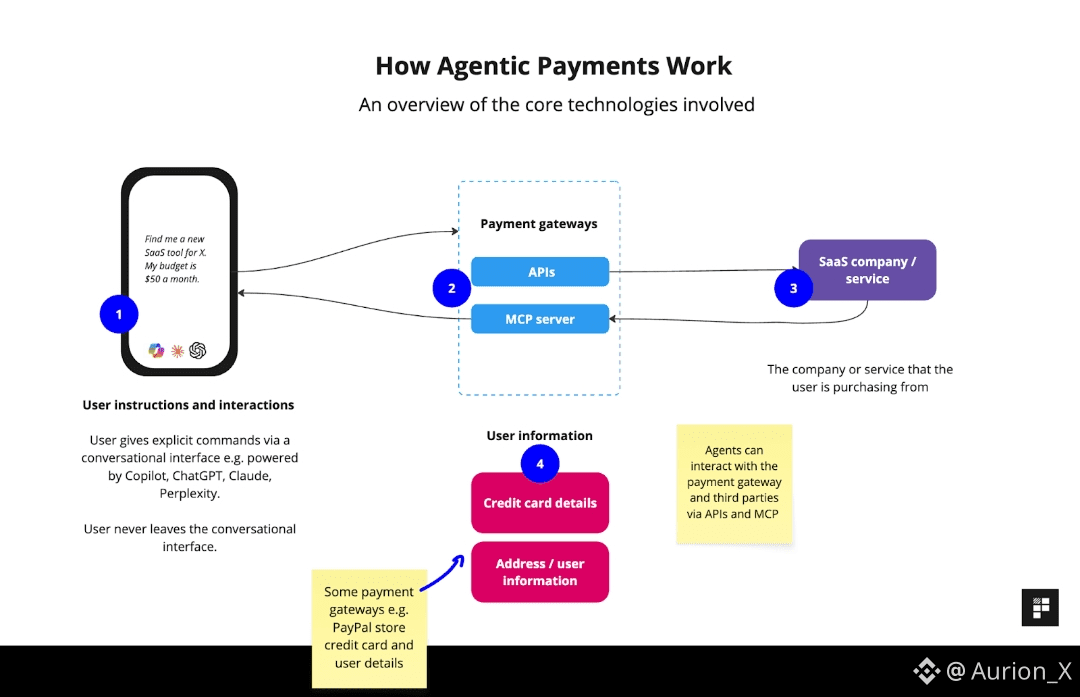

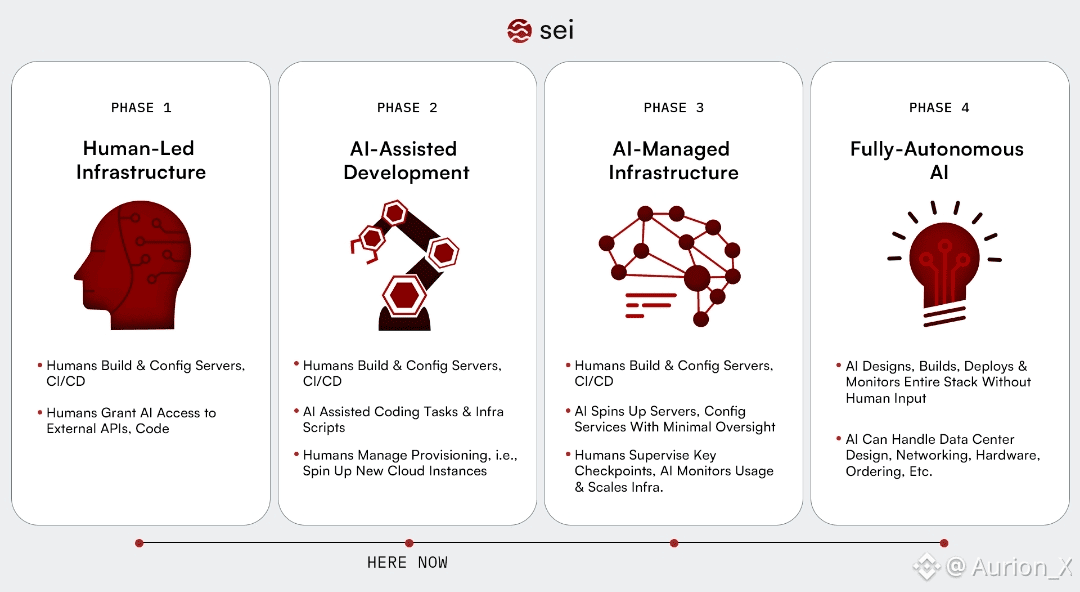

Software is no longer just assisting humans. It is acting. Autonomous agents already trade, route liquidity, monitor markets, purchase data, call APIs, rebalance portfolios, and coordinate tasks continuously. They do not sleep, hesitate, or ask for confirmation. They operate at a pace and scale that human-centered infrastructure was never designed to handle. What has been missing is not intelligence, but a financial and identity layer that treats these agents honestly instead of pretending they are just fast humans.

This is where Kite enters the picture, not as another generic Layer 1 or speculative playground, but as an infrastructure rethink driven by a simple realization: if software is going to spend money, blockchains must change their mental model of who the user actually is.

Most existing chains are optimized for episodic human behavior. A person sends a transaction, waits for confirmation, checks a balance, and moves on. Fee volatility is tolerated because humans adapt emotionally and socially. Delays are annoying but acceptable. Uncertainty can be rationalized later. Autonomous agents do not work this way. They act continuously, make thousands of micro-decisions, and amplify inefficiencies instead of absorbing them. When fees spike unpredictably or confirmations lag inconsistently, agents do not slow down; they misbehave at scale.

Kite starts from this reality instead of trying to patch around it. Rather than chasing headline throughput or marketing-friendly metrics, it focuses on predictable execution, fast confirmation, and low, stable costs because those are the properties machines actually need. For an agent economy, reliability matters more than spectacle. Quiet consistency matters more than explosive numbers.

But speed alone is not the real problem. The deeper issue is delegation. People are not afraid of AI thinking. They are afraid of AI spending. Handing a machine unrestricted access to money feels dangerous because it is. Today’s workarounds prove this. Shared wallets, blanket permissions, API keys, and centralized dashboards all assume good behavior and hope nothing breaks. When something does break, the failure is usually catastrophic, draining funds or destroying trust in one moment.

Kite takes the opposite approach. It treats autonomy as something that must be constrained to be useful. Instead of asking users to trust agents, it designs agents so they are structurally incapable of exceeding what they were allowed to do. Autonomy becomes safe not because the agent behaves well, but because the system will not let it misbehave beyond defined limits.

This philosophy shows up most clearly in Kite’s identity architecture. Traditional wallets collapse identity, authority, and execution into a single key. That model barely works for humans and completely fails for machines. Kite separates identity into layers that mirror how responsibility works in the real world. There is the human, who remains the root authority and source of intent. There is the agent, which acts independently but only within explicit permissions. And there is the session, a short-lived execution context created for a specific task.

This separation changes everything. Sessions expire automatically. Permissions can be revoked without touching the human’s core identity. An agent can be paused or replaced without collapsing the entire system. If something goes wrong, the blast radius is small. Accountability is preserved without requiring invasive oversight. Delegation stops feeling like a leap of faith and starts feeling like a controlled process.

This design matters because agents will fail. They will misinterpret instructions. They will be manipulated. They will operate in adversarial environments. The question is not whether failure happens, but how much damage it causes when it does. Kite answers that question structurally instead of optimistically.

Payments themselves follow the same logic. Machines do not want surprises. They want predictability. Volatile fees and unstable units of account are noise that compounds into risk when decisions are automated. That is why Kite leans into stablecoin-native settlement and predictable execution. This is not ideology. It is usability. If an agent is deciding whether to pay for data, compute, or a service, cost must be a stable variable. Otherwise, rational optimization becomes impossible.

In this sense, Kite treats money as infrastructure, not speculation. Payments are supposed to be boring. They are supposed to work every time, quietly, without drawing attention to themselves. That is what allows automation to scale.

The real shift Kite is preparing for goes beyond agents serving humans. The deeper change is agent-to-agent commerce. One agent will pay another for data. Another will pay for inference. Another will outsource a subtask. These interactions will be invisible to humans but economically real. They will happen constantly, at machine speed, without human mediation. Without proper identity, this devolves into spam and fraud. Without constraints, incentives skew toward abuse. Without predictable settlement, coordination breaks down.

Kite is designed for this invisible economy before it becomes obvious. It assumes that most future transactions will not be watched by people, and therefore must be legible, attributable, and enforceable by machines themselves.

The KITE token follows the same restrained philosophy. Instead of forcing full financialization from day one, utility is introduced in phases. Early on, the focus is participation, experimentation, and ecosystem growth. This attracts builders and users who care about functionality rather than extraction. As the network matures and real usage patterns emerge, staking, governance, and fee mechanics activate around actual economic activity. This sequencing reduces reflexive risk and aligns incentives with long-term reliability instead of short-term hype.

Governance, in this context, is not about speed or noise. It is about defining the boundaries under which autonomous systems are allowed to operate. Poor governance in an agent-driven network does not just cause inefficiency; it causes systemic risk. Kite’s slower, usage-driven approach acknowledges that rules should emerge from reality, not theory.

What makes Kite compelling is not that it promises to replace existing blockchains. It quietly redefines who blockchains are for. Humans are not removed from the system. They are repositioned as authors of intent rather than executors of every action. Machines execute, but only within boundaries humans define. Accountability remains intact even as autonomy scales.

The uncomfortable question Kite forces the market to confront is simple: if autonomous agents become the primary transactors in Web3, do we trust today’s infrastructure to keep humans in control? Or do we need systems built from the ground up to understand delegation, limits, and responsibility at machine speed?

Kite does not promise inevitability. It does not rely on hype cycles or grand narratives. It prepares for a future where software acts economically and demands infrastructure that values clarity, control, and predictability over spectacle. If that future arrives slowly, Kite will look patient. If it arrives suddenly, Kite will look prepared.

In infrastructure, preparation usually matters more than prediction.