OpenLedger is not just another blockchain. It seeks to be the substrate on which a fair, transparent, and efficient AI economy is built — where data, models, and agents are first‐class citizens (i.e. tradable, ownable, auditable). Its mission is to unlock liquidity for those contributions, so everyone who helps build, improve, or serve AI systems is recognized and compensated. It’s about fair attribution, traceability, and built‐in economic incentive, all powered by blockchain.

The Problem Landscape Revisited

To fully appreciate OpenLedger, it helps to map the current deficiencies in the broader AI and machine learning (ML) world, particularly when mixed with expectations from Web3:

1. “Invisible value” of data contributors & annotators

Much of AI’s output depends on data—labeling, cleaning, curation. But once a model is trained, most of the economic surplus goes to model owners or service providers; data contributors seldom receive ongoing rewards, even when their data continues to influence downstream uses.

2. Opacity of model provenance

Which dataset(s), which version/model was used, how was it fine-tuned? These questions are often answered in vague ways, if at all. But for trust, compliance, reproducibility, and fairness, we need transparent lineage.

3. High cost + friction for deploying models & inference

Running inference at scale, hosting models, managing versioning, etc., involves expensive infrastructure and centralized control. These costs limit access to better AI for many developers, researchers, or smaller enterprises.

4. Lack of programmable, on-chain attribution & revenue flows

In many marketplaces, attribution is a manual or off-chain affair; revenue splits often arranged via contracts or sales outside the chain. This creates friction, risks conflict, and makes tracking/tracing hard.

5. Centralization risks

Many AI systems are controlled by large corporations or centralized cloud providers. This places a lot of power (and risk) in their hands: governance, privacy, updates, compliance, etc.

OpenLedger is targeting these pain points by baking in on-chain mechanisms for attribution, reward, and access; by designing cost efficiencies and scalability; and by designing for transparency and decentralization from the ground up.

What OpenLedger Actually Offers — Deeper Dive

Here are the core components, with more detail (and sometimes speculative interpretation) based on published materials and what’s plausible.

1. Datanets & Data Attribution

Datanets are communal, tokenized datasets with full traceability: every data contribution is recorded, provenance preserved, metadata tracked. Contributors to datanets are rewarded via mechanisms like Proof of Attribution.

The attribution mechanism is designed so that when a model is trained or used, there is quantification of which data points had influence on the model’s output. Rather than blunt notions like “dataset X contributed”, it attempts to measure impact. This is critical for fair reward distribution.

Also, through this mechanism, the quality of data matters. It's not enough simply to upload large volumes; what counts is how much effect a piece of data has on the model outputs. This ties rewards to impact.

2. Model Factory, OpenLoRA, Agents, & Inference

ModelFactory is the set of tools (including dashboards, interfaces) to train, fine-tune, deploy, and publish models. It allows AI developers (researchers, enterprises, hobbyists) to plug into datanets, perform compute (off-chain with on-chain anchoring), and publish models with verifiable metrics.

OpenLoRA is interesting: a framework allowing many (possibly thousands of) lightweight or low-rank adaptation (LoRA) models to share GPU resources efficiently. Instead of every model needing isolated full GPU resources, this aims to reduce cost, increase throughput, and make projects more accessible. This is especially helpful for smaller model variants, domain-specific fine-tuning, or experimentation.

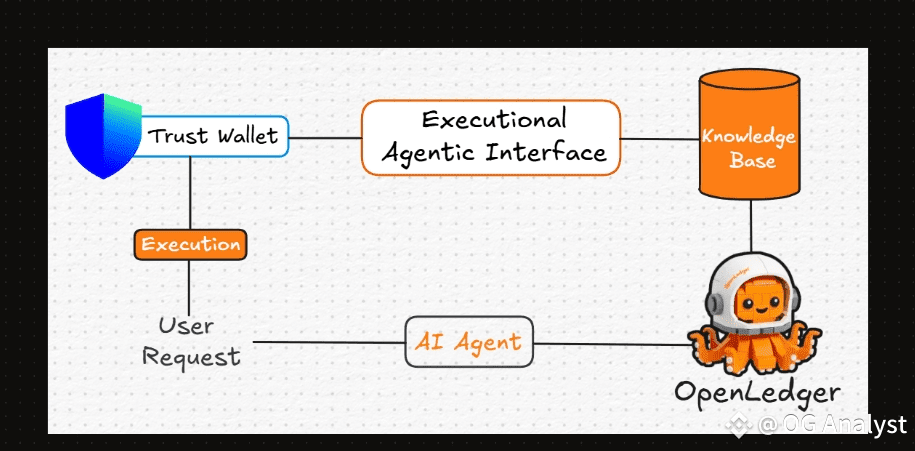

AI Agents & Inference Markets: Once models are live, they may be called upon by users or other agents. OpenLedger has – or plans to have – mechanisms to charge for inference, split revenue among model owners, data providers, and infrastructure (nodes, validators). The attribution engine will help determine which data / which contributors benefit when inference occurs.

EVM Compatibility + Layer-2 / Rollup architecture: OpenLedger is built as an OP Stack (optimism technology stack) rollup with data availability support from EigenDA. This means relatively low gas costs, high throughput, and compatibility with existing Ethereum wallets and tooling. The token OPEN starts on Ethereum (as ERC-20) and then is bridged / used in the native chain.

3. Tokenomics & Economic Structure

Here are specifics with deeper clarity from the tokenomics documents:

Aspect Detail / Values

Total supply = 1,000,000,000 OPEN tokens.

Initial circulating supply at TGE = Approximately 21.55% (≈ 215.5 million OPEN).

Primary allocations = - Community & Ecosystem: 61.71%, meant for data contributors, model developers, agent builders, tooling, public goods.

Team: 15% (with vesting).

Investors: ~18.29%.

Liquidity: ~5%.

(Some counts also list an “ecosystem” separate from “community + ecosystem” portions; definitions may vary). | | Unlock / vesting schedule | - At TGE: ≈ 215.5 million OPEN unlocked. Of that, allocations for liquidity, community rewards, ecosystem bootstrapping.

Community & ecosystem portion unlocks linearly over 48 months.

Team & investor allocations: 12-month cliff + 36 months linear vesting. |

These numbers show a commitment to long-term alignment, avoiding huge early unlocks for insiders, and making sure most rewards flow to network users and contributors.

4. Utility & Governance of OPEN

OPEN is more than “gas + rewards.” Its functions include:

Gas / Transaction Fee Token: It’s used for nearly all interactions: dataset registration, model publication, inference payments, validators, etc. Without OPEN, none of the economic activity can settle on-chain.

Attribution Rewards: Proof of Attribution (PoA) ensures contributors whose data or work influences model behavior are rewarded. Not just in model training, but also during inference usage.

Governance: OPEN holders vote on protocol upgrades, treasury decisions, model funding, etc. Some documentation suggests a governance approach inspired by other token-governed networks like Arbitrum.

Staking + Agent Performance Accountability: Agents providing services may need to stake OPEN; failure to deliver or misbehavior can result in slashing or reputational penalties. This helps align quality of service.

---

Key Strategic Moves & Partnerships

These are concrete steps OpenLedger has taken to strengthen both its technical security, ecosystem growth, and market positioning.

1. Partnership with Ether.fi restaking infrastructure (~US$6B TVL)

By leveraging restaking from established infrastructure (Ether.fi), OpenLedger gets enhanced security and scalability. Restaking mechanisms allow for better validator economics and shared security.

2. OpenCircle Launchpad & Funding of AI/Web3 Startups

OpenLedger has committed US$25 million via its “OpenCircle” launchpad for developers in AI and Web3. This funding aims to accelerate projects that build on top of OpenLedger’s primitives — datasets, agents, tools. This both builds the ecosystem and broadens adoption.

3. Public-facing docs, incentive programs, testnet phases

OpenLedger is running testnets, airdrops (or similar early user incentives), community programs to reward data/model contributions, and making documentation and SDKs available. These are all crucial for developer adoption.

Comparative Positioning: How OpenLedger Stacks Up

When evaluating OpenLedger’s place in the fast-evolving landscape of AI and blockchain, it’s useful to examine how it compares to other players that aim to merge these two powerful technologies. OpenLedger doesn’t just position itself as another smart contract platform that can host AI applications; it is designed from the ground up to support AI participation. This foundational difference gives it a sharper focus and a clearer value proposition than many of its competitors.

While several general-purpose blockchains such as Ethereum or Solana can technically accommodate AI projects, they lack native primitives for AI-specific needs like model attribution, dataset monetization, and inference metering. Builders on those platforms often need to create custom solutions for tracking dataset provenance, distributing royalties, or ensuring fair economic participation. OpenLedger removes that burden by embedding these mechanisms directly into its protocol, making it a specialized environment rather than a generic one-size-fits-all chain.

Projects like Ocean Protocol, Fetch.ai, and SingularityNET have pioneered decentralized data exchanges or AI agent networks. Yet OpenLedger distinguishes itself through its full-stack approach to on-chain AI economics. Instead of focusing only on data sharing (like Ocean) or autonomous agents (like Fetch), OpenLedger offers a holistic model that covers the entire AI lifecycle—from data contribution to model training, deployment, and inference billing. This creates a vertically integrated ecosystem where every contributor, from data curators to model developers and inference providers, can be compensated with precision.

Another key differentiator lies in Ethereum compatibility. Unlike some AI-focused chains that operate on proprietary frameworks, OpenLedger adheres to widely accepted Ethereum standards for wallets, smart contracts, and Layer-2 integrations. This means developers and users can connect with existing EVM tools, wallets, and liquidity pools without friction. By reducing onboarding barriers, OpenLedger effectively positions itself as a bridge between the booming Ethereum DeFi ecosystem and the emerging on-chain AI economy.

Performance and scalability also influence its comparative strength. Some AI-blockchain hybrids struggle with high transaction costs or limited throughput, creating bottlenecks for high-volume data and inference calls. OpenLedger’s architecture balances off-chain compute with on-chain verification, ensuring that heavy AI workloads can be executed efficiently while preserving the trust guarantees of blockchain. This hybrid model gives it a practical advantage over chains that attempt to force all compute on-chain, which can quickly become cost-prohibitive.

Finally, OpenLedger’s economic incentives offer a compelling draw for contributors. By implementing proof-of-attribution mechanisms and transparent royalty flows, it ensures that those who provide valuable data or models receive ongoing rewards whenever their assets are used. Many competing platforms still rely on more centralized or ad hoc revenue-sharing models, which can be opaque or biased toward early insiders. OpenLedger’s protocol-level reward system provides the transparency and fairness that builders and investors increasingly demand.

In summary, OpenLedger stands out in a crowded field by focusing on AI-native functionality, Ethereum interoperability, and carefully designed economic incentives. It doesn’t try to be everything to everyone, but instead concentrates on becoming the definitive platform for monetizing data, models, and AI agents. This strategic clarity gives it a strong comparative position and makes it a serious contender for developers and enterprises looking to participate in the decentralized AI economy.

Risks, Trade-Offs, and What’s Not Promised

Even with a strong ethos and architecture, OpenLedger has hard challenges. Understanding them is essential for realistic evaluation.

1. Privacy, Compliance and Regulations

Handling data (especially sensitive data: health, financial, biometric) introduces privacy liability. GDPR, HIPAA, other laws require careful handling. On-chain transparency can conflict with privacy; OpenLedger will need privacy primitives, consent mechanisms, possibly zero-knowledge proofs or TEEs, to manage this.

2. Quality vs Quantity of Data

If rewards are allocated simply by “impact,” bad actors might try to game metrics. Data might be noisy, mislabeled, or irrelevant but still get rewarded if the attribution mechanism isn’t robust. Ensuring data quality, careful valuation, and maybe curation is essential.

3. Scalability of Attribution Engine

Measuring how each data point influences model behaviors is computationally expensive (especially for large models). Doing so in a way that is reliable (statistically sound) and doesn’t cost more than the reward is challenging.

4. Tokenomics & Inflation Pressure

While a large portion of tokens goes to community rewards and ecosystem, that implies potential dilution over time as more tokens unlock. OPEN’s market value will depend on usage demand exceeding the rate of token supply and unlocking. If usage lags, downward pressure on price is likely.

5. Adoption & Liquidity

Even the best infrastructure is useless if there are no quality models, no meaningful datasets, and no users making inference calls. Bootstrapping that liquidity (both in terms of content and demand) is a non-trivial network effect. OpenLedger’s $25M fund helps, but adoption risk remains.

6. Competition & Switching Costs

Centralized AI providers continue to improve, offering plug-and-play models, huge datasets, and massive support. Developers already invested in their ecosystems may find switching costly. OpenLedger must offer real advantages in cost, flexibility, or economics to persuade adoption.

7. User / Developer Experience

Even if everything is great under the hood, friction (wallets, bridging, gas costs, latency, model performance, reliability) will make or break. The success depends heavily on how smooth and predictable OpenLedger feels for real users.

Expanded Future Opportunities: What OpenLedger Could Enable

Here are more speculative but plausible forward trajectories that build on what is already public, adding creative possibilities.

1. Vertical-domain Datanets & Specialized AI Services

Beyond generic datasets, we’ll see datanets built for specific domains: legal text, medical imaging, agricultural sensors, climate, geospatial data, etc. These vertical datanets could power domain experts or startups with high-value use cases. Because domain-specific data is often scarce, the opportunity for good attribution and high rewards is greater.

2. Hybrid Public-Private / Confidential Datanets

For domains sensitive to privacy (healthcare, finance), data could be contributed with encryption, secure enclaves, or via federated learning. OpenLedger might enable private datanets (visible metadata & attribution but not raw data) or zero-knowledge proofs of performance. This would allow compliance while maintaining reward flows.

3. Composable Agents & Model Alliances

Models and agents may be chained or composed: e.g., an agent that summarizes documents, then another that analyses sentiment, then another that aggregates results. OpenLedger infrastructure could allow marketplace of agents that interoperate, with revenue flows automatically split among component model owners and data contributors.

4. Model Versions & Lifecycles as Tradable Assets

Versioned models could be tokenized, allowing trading. If someone builds a better version, earlier version owners (contributors) may get royalties. Model markets could emerge where people back certain models/agents, invest in them (staking or governance), and share in returns.

5. Edge & On-device Inference with Micro-Payments

For devices like smartphones or IoT, inference could be performed locally (for speed or privacy) with micropayment systems (token rewards to model owners / data providers) for usage. This could unlock many applications: personal assistants, smart home, wearables.

6. Interoperability & Cross-chain Data Portals

As more blockchains try to integrate AI or data services, bridging data and model markets across chains could be valuable. OpenLedger might become a hub or standard for cross-chain model/dataset attribution.

7. Governed Public Goods & Research Grants

Using OpenLedger’s token and governance, funds could be allocated for public good: e.g., climate models, open science, open educational resources. Research datasets could be built and maintained via on-chain funding with transparent usage and reward.

8. AI Safety, Auditing, and Verifiable Models

As demand from regulators and the public increases for safe, robust, unbiased AI, OpenLedger could support tools for third-party auditing, bias detection, fairness metrics, and verifiable checkpoints. Because the chain tracks lineage and data impact, audits may be more possible.

What Would Success Look Like — Metrics & Signals

For someone evaluating OpenLedger or considering building on it, here are concrete signals that suggest it’s on track:

Active Datanets with High-Quality Data: Not just many datanets, but ones with meaningful, cleaned, curated data, domain-specialized, that model developers want to use.

Growing Model Library + Real Inference Traffic: Models live, in use, being called frequently. Inference payments flowing; users satisfied with performance and cost.

Strong Analytics & Attribution Reports: Proving that attribution mechanisms are fair, reliable, low overhead. Reports or dashboards showing data contributor rewards over time.

Stable, Increasing Token Velocity & Utility: OPEN used for many things: gas, inference, staking, governance, etc. Demand for OPEN grows faster than inflation/unlocking, or at least enough to maintain value.

Developer Adoption & Tooling Ecosystem: Good SDKs, APIs; ease of integrating with existing ML pipelines; cross-chain bridges; friendly UX; community tools.

Partnerships & Vertical Use Cases: Real world pilots (医疗, finance, supply chain, climate, media) that show impact. Institutions trusting OpenLedger for regulated data.

Security, Audits, and Privacy Compliance: Clear audit reports; regulatory clarity; inclusion of privacy features; minimal incidents.

Recommendations & Considerations for Builders / Contributors

If you are a researcher, developer, data provider, or investor thinking about participating in OpenLedger, here are what to consider to extract maximum value and reduce risk:

1. Participate early but understand the vesting

As many tokens are locked or released gradually, early involvement is good, but be aware of unlock schedules and token dilution. If you contribute early, ensure your incentives align with long-term rewards.

2. Focus on data quality & alignment

Since attribution is based on impact, invest effort in producing clean, well-curated, relevant datasets. Label well, document thoroughly. High quality is likely to get higher reward per unit of effort.

3. Monitor model design & efficiency

Use efficient modelling approaches (e.g., Low-Rank Adaptation, or modular architectures) to reduce inference and training costs. Efficiency is rewarded (both in cost savings and likely in throughput).

4. Engage governance and community

Owners of OPEN have power. Involvement gives you influence over protocol parameters: attribution weightings, infrastructure design, fees, etc. Getting involved with governance may help shape favorable conditions.

5. Use or build tools that make attribution transparent

If you develop models, build with transparency: publish checkpoints, metrics, evaluation datasets; design attribution experiments (e.g., ablation, impact measurement) so contributors can see how reward flows are determined.

6. Watch for privacy / compliance constraints

If you deal in sensitive data, or in regulated sectors, check whether functionalities exist (or are planned) for privacy. If not, you may need to plan hybrid or off-chain supplemented architectures.

7. Bridge strategically, but mind gas costs and UX

Since OPEN is initially an ERC-20 on Ethereum and then bridged, consider costs, delays, and security of bridges. If your application is sensitive to latency or transaction cost, examine whether running on the native chain, or optimizing batching, helps.

Potential Challenges That Could Derail Progress — What to Watch Out For

Beyond the earlier risks, here are specific pain points or failure modes that could challenge OpenLedger’s promise.

Attribution gaming: If attribution metrics are poorly designed, individuals might spam low-quality data or contrive models to maximize attribution without real value. Detecting and discouraging such behavior will be essential.

Compute costs vs reward mismatch: Training large models or running frequent inference costs money. If OPEN or reward schedules don’t keep up, contributors or model owners may find costs exceed rewards.

Ecosystem fragmentation: If data, models, or agents don’t interoperate or are locked into different silos, or if crediting / reward differs across components, the ecosystem may feel patchy and inconsistent.

User behavior & UX friction: High latency, unexpected fees, complicated workflows (wallet setups, staking, gas) could reduce adoption. Promise of seamless experience must be delivered well.

Regulatory pushback: Data monetization has legal implications in many jurisdictions. Licenses, privacy protection, IP law, data export laws all may complicate building datanets in certain sectors or geographies.

Token price volatility: Tokens subject to speculative trading could see volatility that harms participants. Data contributors may be discouraged if token rewards fluctuate wildly.

Looking Ahead: Vision & Speculative Futures (2026–2030)

What might OpenLedger look like in 3-5 years if it achieves high adoption and delivers on its promises? Here are some possible future states:

OpenLedger as the default marketplace for domain models

A world where specialized models (for law, medicine, agriculture, manufacturing) are published, discovered, licensed, and invoked through OpenLedger. Model “app stores” emerge around verticals, all backed by attribution and rewards.

Cross-chain, multi-chain AI ecosystems

OpenLedger bridges with other chains; data and models migrate across chains; models hosted on OpenLedger feed into dApps on other chains; interoperable agents.

AI “task-oriented agents” as utilities for communities

Agents that represent groups (e.g. environmental orgs, science consortia, municipalities) which run inference models, manage data, produce reports, serve citizens. These agents stake OPEN, are governed, and produce public value.

AI audit and assurance industry

Because attribution and provenance are transparent, an industry of audits, certifications, fairness / bias checking, security audits will emerge. Model owners could offer “certified lineage” or “bias-checked” variants.

Personal AI agents tied to your identity & contributions

Suppose you contribute data/annotations/models; over time you build reputation, measurable impact, possibly stake contributions. Your personal agent(s) may hold a portfolio of models / data, receive revenue, upgrade based on your preferences. Tradeable agents perhaps.

OpenLedger inside regulated spaces

Governments, regulators, or standard bodies may accept tools built on OpenLedger for regulatory compliance (e.g. medicine, finance, privacy). Perhaps signatures, data provenance, or model checkpoints on chain become part of audited regulatory filings.

---

Extra Value: Use Cases In Depth

Let’s explore two fully fleshed imaginary case studies, with some creative but plausible technical and economic detail.

Case Study A: OpenHealth Datanet & Medical Imaging Models

Scenario: Hospitals, diagnostic labs, and research centers collaborate to create a “OpenHealth Imaging” datanet: annotated MRI, CT scans, X-ray datasets (with privacy preserved via de-identification, consent, possibly secure enclaves). Contributors (hospitals and clinicians) upload metadata, anonymized data, and are recorded on-chain, with attribution rights.

Model Lifecycle:

1. A researcher builds a diagnostic model (say for early detection of a lung lesion). They fine‐tune on OpenHealth Imaging, register the model, anchor checkpoints, evaluations.

2. Every inference (i.e. when a doctor or clinic uses the model for diagnosis), the model is called via OpenLedger agents. The fees paid in OPEN are split: a portion to model developer, portion to data contributors whose data had high attribution influence, and portion to validators/nodes/infrastructure.

Privacy / compliance:

Use differential privacy, federated training among institutions. Only hashed metadata and attribution on chain; raw data stays in secure controlled environments.

Audits ensure fairness (e.g. model tested across demographics). Stakeholders vote on standards in governance proposals.

Economic Outcome:

Clinical centers get recurring income for data contributions.

Researchers can sustain funding not just via grants but via model usage.

Clinics/users get access to certified, transparent diagnostic tools.

Case Study B: Media & Content Creation Agents

Scenario: Content creators contribute creative assets (images, text, music samples) to media datanets. Agents trained on those datanets (e.g., text summarizers, image generators) are used by apps (e.g. design tools, content platforms).

Model & Agent Marketplace:

A creator releases a “Vintage Photo Style” image generator model.

App developers integrate it via an on-chain API; end-users pay per generation or via subscription.

Attribution engine identifies which creators’ assets most influence model look/style; revenue flows accordingly.

Utility for Creators:

Micro-payments for content use.

Transparent usage logs (who used models, for what — helpful for licensing, credit).

Potential to build reputation and followership: creators with frequently used assets gain status, which might translate to more lucrative opportunities.

Final Reflections: Where OpenLedger Could Make the Biggest Impact

Here are some distilled thoughts about where OpenLedger’s efforts are most likely to shift landscapes:

Shifting the Data Value Chain: Data is increasingly recognized as central to AI. But historically, value accrues largely to those who train, host, or productize models. OpenLedger could open up new value flows back to data originators — rewarding upstream labor that traditionally gets overlooked.

Moving AI toward Transparency and Accountability: Particularly as AI becomes more integrated into regulated domains (healthcare, law, finance), demands for explicability, traceability, auditability will increase. OpenLedger’s chain of provenance and attribution gives a structural foundation for meeting those demands.

Lowering Barriers for Smaller Contributors: Small researchers, individuals, specialists (e.g. rural domain experts, indigenous language speakers, etc.) could participate in AI’s value chain with less overhead. If inference costs and model deployment costs are kept low, their contributions could be viable income streams.

Enabling a More Sustainable AI Ecosystem: At present, many models are trained in opaque, repeat-expensive ways with duplicated efforts. An ecosystem where datanets and models are shared, reused, versioned, composable could reduce wasted compute and accelerate innovation.