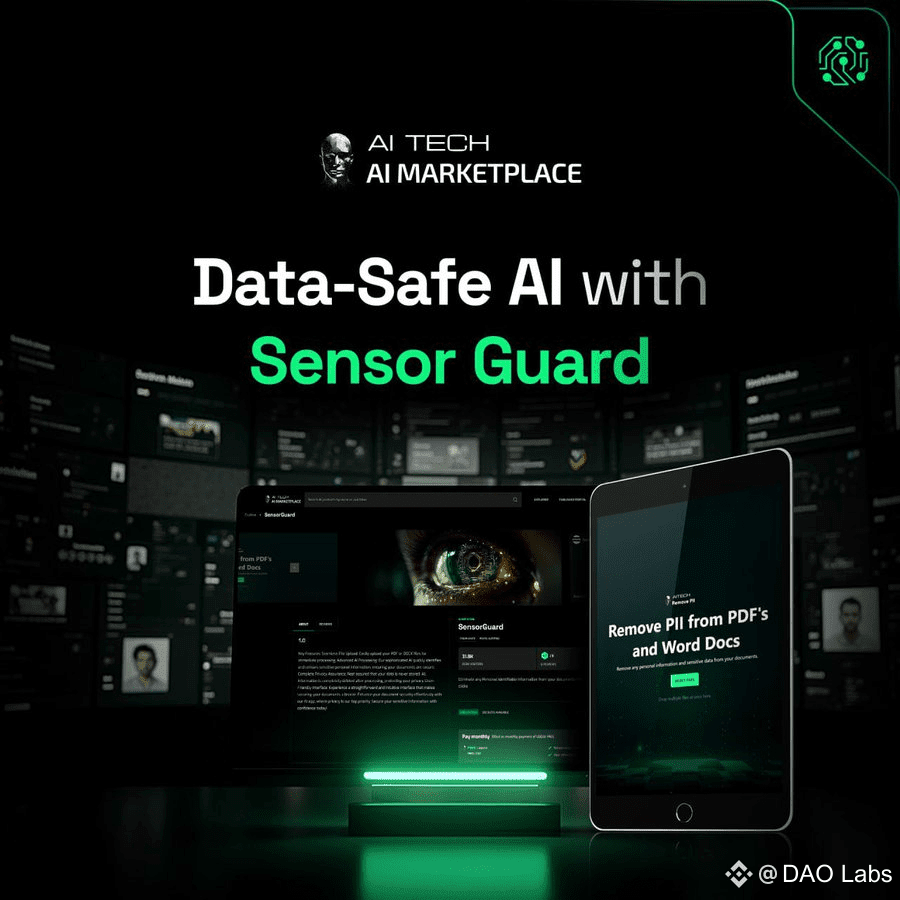

The $AITECH network, steered by @AITECH , has added a critical layer to its infrastructure: Sensor Guard. This addition has drawn focused interest from #SocialMining participants at @DAO Labs , who recognize its significance in elevating decentralized AI from experimental to institutional-grade.

Sensor Guard doesn’t aim to outsmart AI — it aims to guide it. By proactively identifying and redacting sensitive information, it offers a foundational step toward building AI systems that aren’t just powerful, but also explainable and compliant. In a time when LLMs and agents are often deployed in loosely controlled environments, this tool gives developers and auditors a baseline of trust.

Its relevance to the Social Mining narrative is also worth noting. Sensor Guard sets the stage for community discussions around how governance, privacy, and fallback mechanisms should be standardized across emerging AI protocols. These are not just add-ons — they are trust builders.

From the #SolidusHub perspective, this move shifts the dialogue toward system-level assurance. Instead of focusing purely on throughput or speed, the focus turns to integrity — a trait increasingly valued by institutions seeking dependable AI systems.

Sensor Guard won’t headline a bull market, but it may quietly define the standards that next-generation AI networks are measured by. As DAO Labs contributors track these shifts, the integration of safety-by-design becomes a key marker of long-term viability.