Author: Janelle Teng. Translated by Cointime: QDD.

While a few foundational closed-source companies have captured the majority of venture capital investment in the GenAI category, more open-source voices are entering the system and could shake up the current paradigm.

A few weeks ago, I wrote about how the AI model layer has become one of the most strategic and competitive layers in the modern software technology stack. Currently, the three most valuable GenAI startups are all closed source companies backed by deep-pocketed financial investors and strong strategic partners.

1. Cohere officially announced its $270 million Series C funding round yesterday led by Inovia Funds at a valuation of approximately $2.1 billion to $2.2 billion. The company now counts NVIDIA and Oracle among its backers.

2. Anthropic announced a $450 million Series C funding round a few weeks ago, led by Spark Capital, at a valuation of about $5 billion. Google has invested $300 million in the company.

3. OpenAI announced it had raised $10 billion in funding from Microsoft. The company was reportedly valued at about $29 billion in an April subscription that included participation from multiple venture capital firms.

Fundraising activity by these well-known foundational model companies is remarkable, especially in the context of a slowdown in VC activity, where these large round sizes are even more impressive as $100 million+ rounds have become increasingly rare following the market downturn.

Source: Carta, https://carta.com/blog/vc-megadeals-2022/

The boundless confidence that underpins these closed-source investments is not universally felt in the VC industry. Earlier this week, Miles Grimshaw of Benchmark was asked by TechCrunch why his firm did not invest in foundational model companies when it had the opportunity, and whether the large amounts of money these companies had raised were a factor. To this, Grimshaw responded:

“We don’t have the confidence to imagine that any of these companies could have a lasting outsized market share. I think you can see open source emerging and catching up very quickly right now. Over time, you can imagine that the inputs to these large language models will reduce in cost, both in terms of the amount of compute available on a chip and the cost of any chip. The knowledge is clearly diffusing, and more and more people know how to do it without spending a lot of money trying to figure out how to do it. You can even see the rate of depreciation of companies like the OpenAI models. Think about how quickly they make all the spending on GPT-2 or GPT-3 obsolete.”

The competitive dynamics at the model layer are changing. Grimshaw’s response prompted me to dig deeper into the current GenAI funding paradigm and how open source momentum during “AI’s Linux moment” might impact the current state.

In the GenAI Gold Rush, Where Is the Money Going?

We are currently in the AI Hype Cycle (pictured above), so it should come as no surprise that venture capital is pouring into this space. In fact, Morgan Stanley found that as of April 2023, more than $12 billion in funding has flowed into the GenAI category globally, and here is the growth function for 2022:

As Amara’s Law states: “We tend to overestimate the impact of technology in the short term and underestimate its impact in the long term”. Even with such huge amounts of money already flowing into this category, AI as a technology paradigm is still in the early stages of its S-curve, and much remains unproven. So where is the money going? Based on Morgan Stanley’s findings, about 80% of GenAI investment went to model-layer companies. I ran a similar analysis based on the raw data from NFX’s GenAI Market Map spreadsheet and found that after filtering out companies before 2013, about 70% of investment went to model-platform or MLOps companies.

This prioritization focus on this part of the technology stack makes sense, as we are in the early stages of GenAI adoption and the model layer is still evolving with some emerging leaders but no clear winners. The model layer, similar to cloud infrastructure providers, forms the foundation on which the other layers of the technology stack build, and we can expect more downstream applications to spawn as the model layer matures. Additionally, training and developing LLMs tends to be more expensive than building applications, so model layer companies have higher capital requirements and tend to raise larger rounds.

Source: The Economist, https://www.linkedin.com/feed/update/urn:li:activity:7036730584655695872/

But it’s important to note that even within the “hottest” funding stream of the Model Layer, a Pareto pattern seems to be emerging. The vast majority of investment dollars in this layer are being garnered by a select few closed-source model companies. In particular, as mentioned earlier, OpenAI’s stunning $10 billion funding announcement exceeded the average VC round size benchmark for 2023 (where ~70% of all VC rounds raised in Q1 2023 were $10 million or less), as well as other large Model Layer funding rounds. As shown in the chart above, OpenAI’s funding accounted for nearly 50% of the total cumulative funding raised by GenAI startups.

The David and Goliath battle between open and closed source models

Given the massive investment going into the model layer, is the race to dominate this technology stack limited to these well-funded closed-source model vendors? Not really.

As I’ve pointed out before, many other players, including large tech companies, non-AI native startups, and academic institutions, have caused turmoil in the ecosystem, resulting in many open source models entering the fray. When I say long, I really mean long… just check out this open source LLM database (thanks to Sung Kim for maintaining it). Many of these models didn’t exist just six months ago, but now, due to the chain reaction that has been set off, up to three open source models are released every day!

Source: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

Chris Ré of Stanford University likens this open source momentum to AI’s Linux moment (referring to the movement toward open source operating systems as alternatives to Microsoft’s closed platform Windows): “AI has a strong open source tradition since the beginning of the deep learning era. What I mean by the Linux moment is that we may be at the beginning of an era of open source models and important open source efforts building significant, lasting, and widely used models. Many of the most important datasets (e.g., LAION-5B) and model efforts (e.g., Stable Diffusion from Stability and Runway, GPT-J from EleutherAI) were done in an open source fashion by smaller, independent players. This year we’ve seen these efforts inspire a lot of further development and community excitement.”

Everyone in the ecosystem is watching, reassessing their defensibility as open source LLMs prove themselves as credible competitors that are no less inferior than their more well-funded closed-source counterparts.

In the viral “We don’t have Moat, and neither does OpenAI” leaked memo, a Google researcher claimed that open source players are closing the gap in quality and are ultimately beating proprietary closed-source models on everything like training speed and cost: “While our models still have a slight edge in quality, the gap is closing incredibly fast. Open source models are faster, more customizable, more private, and more capable in every way. They do with $100 and 13B params what we struggled to do with $10 and 540B. And they do it in weeks, not months. This has profound implications for us.”

The memo cites as an example Vicuna-13B, an open source chatbot trained by fine-tuning LLaMA on user-shared conversations collected from ShareGPT. Vicuna-13B was able to easily outperform its predecessors LLaMA and Stanford Alpaca in a fraction of the time, achieving over 90% the quality of OpenAI’s ChatGPT and Google’s Bard (below). It’s particularly noteworthy that Vicuna-13B cost about $300 to train, compared to the cost of training certain closed-source LLMs, which are estimated to cost millions of dollars.

来源:SemiAnalysis,https://www.semianalysis.com/p/google-we-have-no-moat-and-neither

But the future is far from certain. Some believe that the "Linux moment" was not enough to make the open source model win over closed source competitors, and that it might take an "Apache moment" to tip the scales in their favor.

AI's Linux moment adds fuel to the fire

Will the best-funded players win? Will there be a few winners? Will the market structure remain fragmented in the long run? There is never a dull moment in GenAI because the pace of change in this space is unprecedented. The recent boom in open source players has added to the already very competitive pressure on the model layer, but I believe this dynamism is important because it can promote innovation, democratize more AI resources, and allow downstream companies to build more products and applications on top of it.

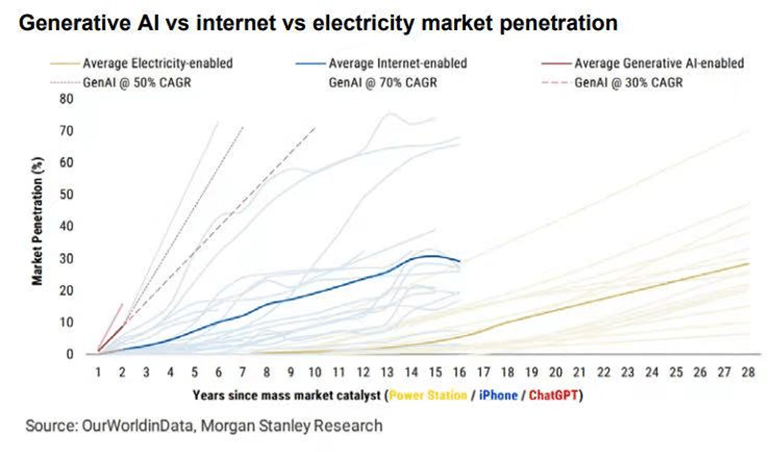

Source: Morgan Stanley

As Marc Andreesen recently wrote: “AI may be the most important and best thing our civilization has ever created, at least as important as electricity and the microchip, and possibly more so.” So I end this post with the above picture as a backdrop to highlight the profound moment we are currently living through, and the unprecedented speed of adoption that is being spurred by all the fuel being poured into this space!

This article and the information provided are for informational purposes only. The opinions expressed are those of the author alone and do not constitute an offer to sell or a recommendation to buy, nor do they constitute an offer to buy, nor do they constitute a recommendation of any investment product or service. While certain information contained in this article is derived from sources believed to be reliable, this information has not been independently verified by the author and its employer or affiliates, and its accuracy and completeness cannot be guaranteed. Therefore, no representation or warranty of any kind should be made as to the fairness, accuracy, timeliness or completeness of this information, and no reliance should be placed on this information. The author and all of his employers and affiliates assume no responsibility for this information and make no guarantee that the information or analysis contained herein will be updated in the future.