Balaji: The 'verification cost' for AI users becomes the real bottleneck

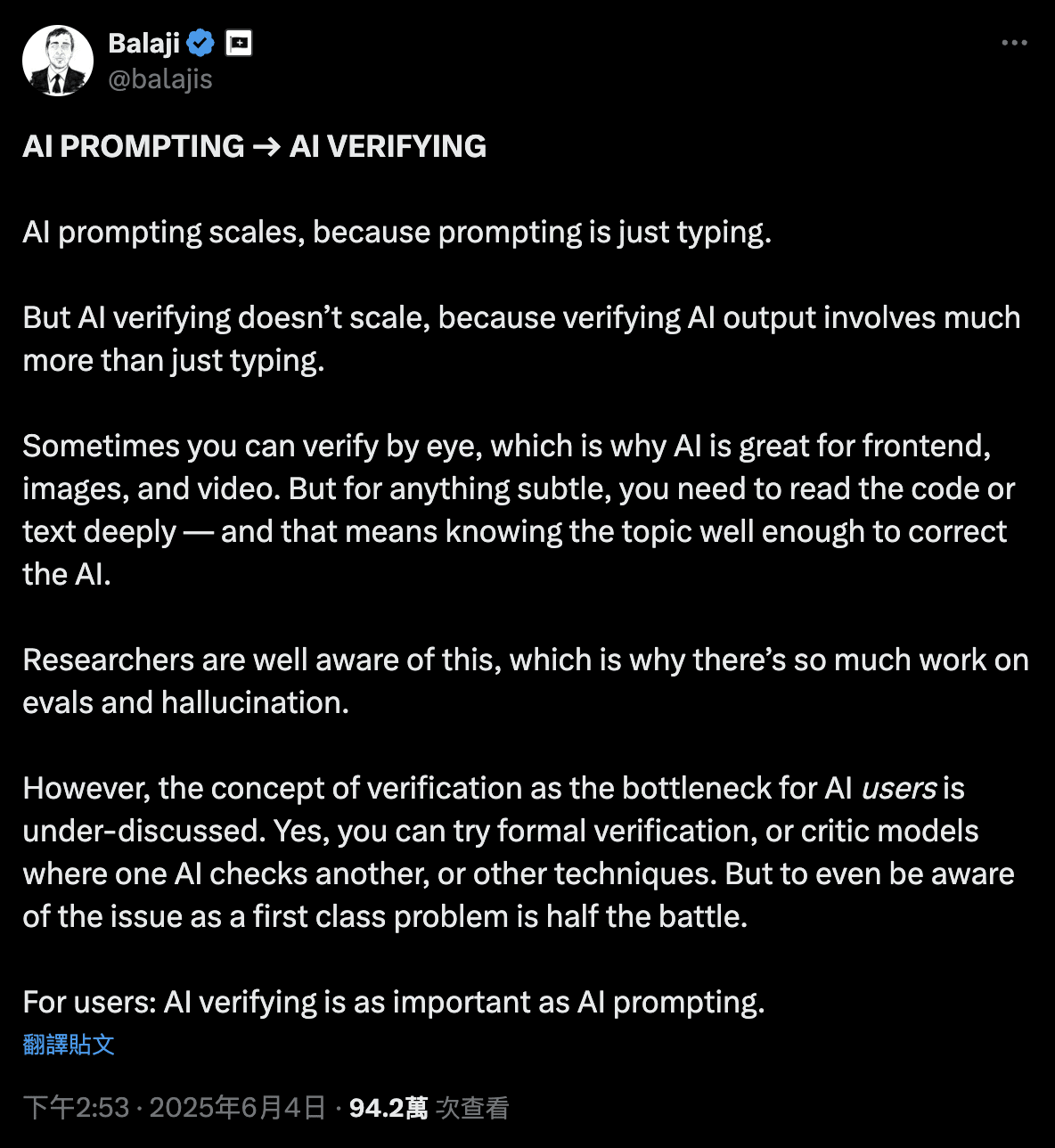

Former Coinbase CTO Balaji pointed out last month that the AI usage process can be divided into two stages: 'input prompting' and 'verifying output'.

The former is something anyone can do, just type a few lines; but the latter is more difficult and requires professional knowledge, patience, and logical thinking to determine if the AI has produced an error or a 'hallucination'.

Image source: X/@balajis

Image source: X/@balajis

He notes that this gap is easily handled with images or videos, as the human eye is naturally good at judging visual content. However, when faced with code, technical articles, or logical reasoning, verification work becomes quite tricky.

The most important question when using AI is how to verify the output of this AI model is correct in a cost-effective way. We need other tools or products to verify content in non-visual domains.

He added: 'For users, AI verification is just as important as AI prompting.'

Karpathy: AI accelerates creation, but does not reduce the verification process

Andrej Karpathy, co-founder of OpenAI and the father of Tesla's self-driving, further extended Balaji's view, pointing out that the essence of creation is a two-stage process that repeats: 'generation' and 'discrimination': 'You make a stroke (generation), and you also have to step back and think if that stroke really improves the work (discrimination).'

He believes that large language models (LLMs) significantly compress the time cost of 'generation', allowing users to instantly obtain a large output, but it does not help reduce the cost and workload of 'judgment'. This is especially severe for coding.

LLMs can easily produce dozens or even hundreds of lines of code, but engineers still need to read, understand, and check all logic and potential errors line by line.

Karpathy states that this is actually the most time-consuming task for most engineers, which is known as the 'Verification Gap'. AI accelerates the creation process, but this time cost is directly transferred to verification.

a16z: The trust crisis of the generative era needs to be filled by cryptographic technology

The well-known venture capital firm a16z approaches from the institutional and industrial levels, believing that AI technology will accelerate the spread of 'fake information' due to low generation thresholds and difficulty in verification, leading to the internet being flooded with counterfeit content. a16z argues that trust should be engineered, and the solution is to introduce cryptographic technology, such as:

Cryptographically process the data produced by AI in stages (hashed posts)

Create using identities verified by blockchain (crypto IDs)

Establish a credible content chain through the openness and traceability of on-chain data

These practices not only make information immutable and verifiable, but also establish a defense line for the credibility of content in the AI era, which is expected to become an important intersection of cryptographic technology and AI.

From prompts to verification capabilities, the new literacy and demands of the AI era have taken shape

Currently, generative AI brings exponential growth in information production, but without equally efficient verification capabilities, users may fall into a dilemma of time-consuming operations and misinformation pollution.

Thus, the core skill of the current AI era is no longer just writing precise prompts, but effectively and cost-effectively verifying AI outputs. Whether through mutual review of AI models or professional verification tools, it becomes particularly important.

This article is reprinted with permission from: (Chain News)

Original title: (Does AI make life more convenient? Balaji and a16z discuss how to shorten the time cost of verifying AI content?)

Original author: Crumax

'Former Coinbase CTO: AI accelerates creation, but verification costs become a true bottleneck' This article was first published in 'Crypto City'