A study by researchers from George Washington University showed that being polite to AI models is a waste of computational resources.

Adding the words "please" and "thank you" to prompts has a negligible effect on the subsequent quality of chatbot responses.

Experts found that polite language is generally "orthogonal to substantive good and bad output tokens" and has a "negligible effect on the dot product" — meaning such words exist in separate regions of the model's internal space and have virtually no impact on the outcome.

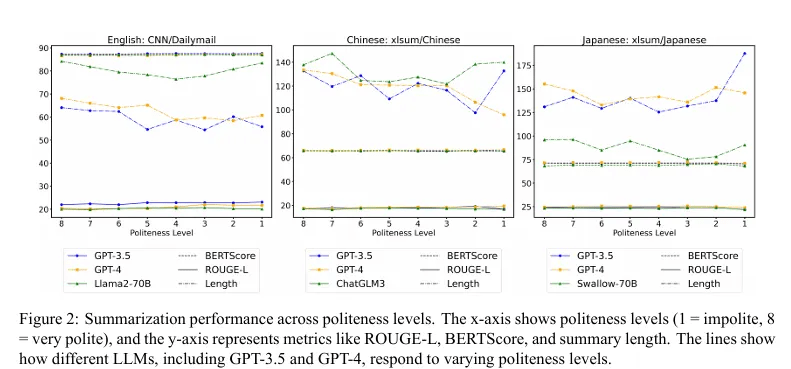

The article contradicts a Japanese study from 2024, which stated that politeness enhances the performance of artificial intelligence. In that work, GPT-3.5, GPT-4, PaLM-2, and Claude-2 were tested.

Productivity growth depending on the level of politeness. Data: study.

AI Director David Acosta noted that discrepancies in results are related to the overly simplified model from George Washington University.

"Conflicting results about politeness and AI performance are generally due to cultural differences in training data, nuances in prompt design for specific tasks, and contextual interpretations of politeness, which require cross-cultural experiments and task-adapted assessment systems to clarify the influence," he commented.

The team behind the new work acknowledged that their model is "deliberately simplified" compared to commercial systems like ChatGPT. However, in their opinion, applying the approach to more complex neural networks will yield the same result.

"Whether the AI's response is inadequate depends on the training of the LLM, which shapes the embeddings of tokens, and the content of the tokens in the query — rather than whether we were polite with it or not," the study states.

Recall that in April, OpenAI CEO Sam Altman stated that the company spent tens of millions of dollars on responses from users who said "please" and "thank you."