This project documentation is available in multiple languages. Please feel free to improve or add other language versions through Pull Request.

🇬🇧 English | 🇰🇷 Korean | 🇨🇳 中文 (Chinese)

We will walk you through the evolution of AgenticWorld and explore how the architecture of Mind Network has developed from the initial concept to its current form - with security, autonomy and trust as the foundation for the future of Agentic AI.

Motivation for Building AgenticWorld

We have experienced the baptism of several technological waves in the past, and today, we firmly believe that the era of Agentic AI has arrived. Unless MAGA (Microsoft, Apple, Google, Amazon) has bet on the wrong AI, it is certain that in the near future, each of us will have and entrust one or more Agentic AI to perform tasks for us. These intelligent agents will collaborate under our authorization - automatically working, negotiating and making decisions.

So, what will this world populated by Agentic AI look like? Is it an AgenticWorld?

We didn’t invent the term “AgenticWorld.” At Microsoft Ignite 2024, Satya Nadella mentioned in his keynote:

“If you combine these things together, you can build a very rich AgenticWorld.”

If Satya Nadella is reinventing Microsoft for this future, and Sam Altman is building the World App to connect society to this world, then each of us has a responsibility to participate in building this AgenticWorld infrastructure.

We focus on one of the most critical cornerstones: security.

The leap from AI agents to Agentic AI is centered on autonomy — less human intervention. But this autonomy also brings serious problems:

Can we trust these agents to make decisions for us without being manipulated by others?

Can we trust them to communicate without compromising our privacy?

To build a more secure AgenticWorld, we need strong encryption, verification, and consensus mechanisms. After years of research, we found that fully homomorphic encryption (FHE) is the most promising solution.

FHE is not a new technology. It can be traced back to 1978, and its ecosystem has matured significantly in recent years. At Mind Network, we have been focusing on building FHE-based blockchain application scenarios for the past three years:

Secure Data Lake

Cross-chain bridge

Private voting

As of 2024, our community has asked a new question:

Why not apply FHE to AI?

This question started a new chapter in our journey. We started trying out FHE supported:

Model selection

Forecast consensus

Agent Consensus

These applications enable intelligent agents to make secure and verifiable collaborative decisions without exposing their internal logic or compromising their privacy.

Next, we’ll explore why FHE is core to the AgenticWorld architecture and how we designed it to build a secure, collaborative future for Agentic AI.

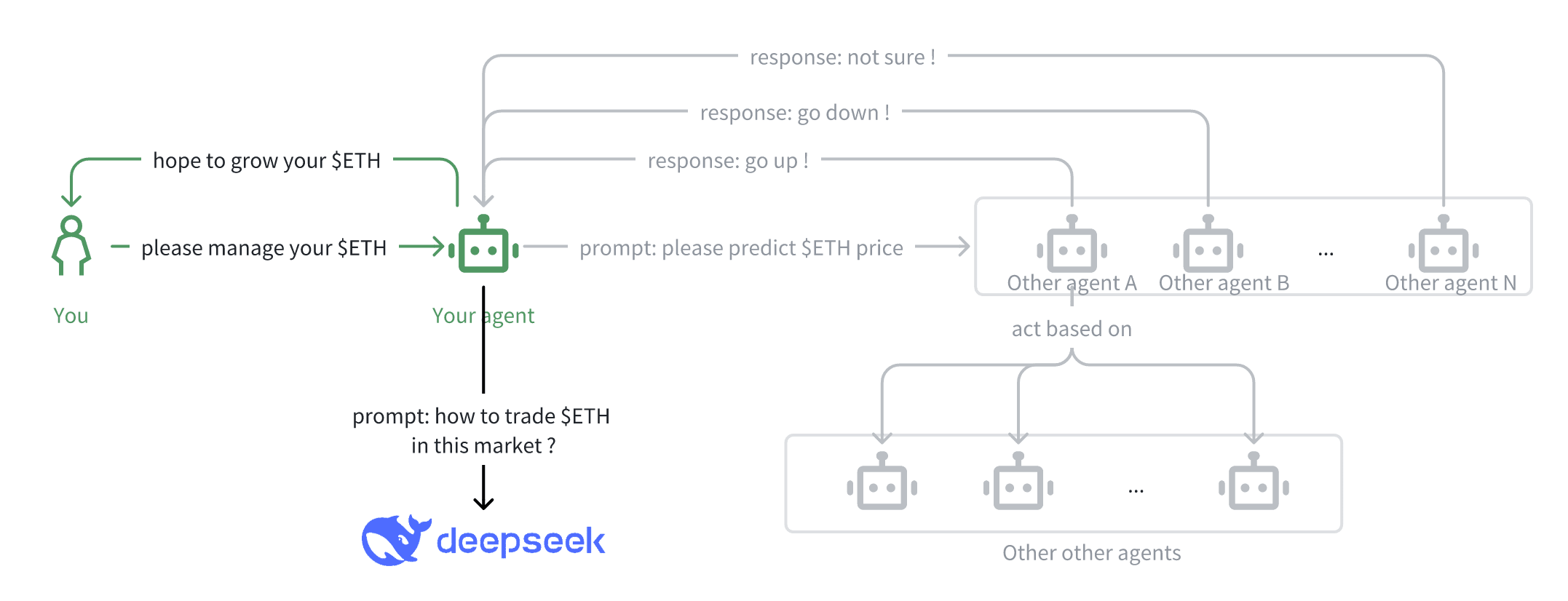

A seemingly "naive" but common scene in AgenticWorld

Before we dive into the AgenticWorld architecture, let’s take a look at a simple but real-world scenario. Although this example may seem “naive”, it reflects many of the core challenges we must address to make Agentic AI run safely and efficiently.

As you read, try to map this scenario onto some situation in your own life or work — you may find that it is closer to reality than you expected.

Scene Setting:

You are a user — more likely a Web3 native who is accustomed to using on-chain assets and proxy infrastructure.

You own some digital asset, such as ETH (or USD in Web2), and own or subscribe to one or more AI agents that manage these assets on your behalf.

Your goal, naturally, is to increase the value of your ETH — or at least not lose it.

Scenario description:

Let’s say you instruct your agent to optimize its ETH holdings. This agent, while powerful, is not omniscient. In order to make smart decisions, it needs to get insights from other agents. In the language of Agentic AI, this may involve calling Functions or the Model Context Protocol (MCP) to access external intelligence sources.

For example:

Your agent might request ETH price predictions from other agents and use this aggregated intelligence to decide its next move.

One of the agents — let’s call it Agent A — claims that it receives high-precision market signals from a trusted network of intelligent agents and responds accordingly.

To improve insights, your agent might also tap into a large language model (such as DeepSeek) to analyze context, extract trends, or simulate possible action paths.

The questions started to emerge:

So far, everything seems pretty smart, right? But here comes the problem.

Ask yourself:

Do you really think agents like A, B, or N would be willing to publicly share their proprietary predictions? Suppose agent B receives a prediction from agent A and does nothing with it but simply forwards it to your agent. Then, B is a freeloader. It avoids the cost of running infrastructure, collecting data, or training models, but still appears useful in the network.

Worse, if your competitors gain access to B’s insights, they could potentially infer your proxy behavior or even launch adversarial tactics against you. Do you really have the confidence to use this openly accessible intelligence? What you really want is: insights that only you can see. If you can’t see others’, then they can’t see yours. On the surface this may seem less efficient, but in a world of zero trust, this is often the price we pay for privacy, and it’s a trade-off many are willing to accept.

Your agent makes a trade. But — what did it base its decision on? Can you verify the predictive logic or data sources it relied on? How can you assess the risk or liability of the agent if its decision is based on opaque and unverified information sources? You can only hope it made the right choice?

So when your proxy calls a large model, such as DeepSeek, how do you know that it is really calling the original DeepSeek model? And not a tampered version replaced by a malicious competitor or attacker? In a world where proxies are decentralized, the attack vector of disguising or replacing services is real.

Abstracting security issues in AgenticWorld

Now that we’ve walked through a seemingly simple, yet highly revealing scenario, it’s time to step back and abstractly analyze the core security challenge it exposes.

These are not edge cases or isolated incidents, but fundamental problems facing nearly all meaningful interactions in the AgenticWorld. Unless we address them, the entire agent infrastructure will be fragile and untrustworthy.

We categorize these issues into four fundamental security challenges:

Consensus Problem: How can agents reach consensus on a piece of information or action, such as price predictions, task priorities, or decision logic, without leaking private data and without relying on centralized coordination?

Validation Problem: How does your agent validate information or claims coming from other agents, especially when those agents cite third-party sources or chains of reasoning? Can it trust what it hears? Are we really sure that we are calling the authentic DeepSeek?

Encryption Problem: How to share data or perform computations between multiple agents without exposing each other’s sensitive context, such as individual goals, policies, or internal states?

Verification Problem: How can an agent verify that a model, decision, or service (such as an LLM like DeepSeek) is authentic, has not been tampered with, and is executing correctly?

Among them, the problem of verifiability has been well solved through zero-knowledge proofs (ZKPs). With ZK, we can cryptographically prove that a model is original, a calculation is faithfully executed, or a piece of data has not been tampered with - without revealing the underlying data itself.

But the remaining three dimensions — consensus, verification, and encryption — require another tool. And this is where Fully Homomorphic Encryption (FHE) comes into play.

FHE allows computation to be performed directly on encrypted data, meaning that multiple agents can collaborate, compare, and make decisions without decrypting private inputs. This capability enables trusted collaboration, verification links, and encrypted negotiation between agents without relying on a central authority or leaking privacy.

Next, we will explore these three FHE-related issues in more depth and explain how we address them one by one in the Mind Network architecture.

AgenticWorld needs blockchain and smart contracts

If you've been reading along, this section is probably pretty self-explanatory.

At the core of the AgenticWorld lies a key requirement: Autonomy - and true autonomy must be built on decentralization.

This is where blockchain and smart contracts come in. Unlike traditional AI systems that rely on centralized infrastructure and black-box execution, AgenticWorld requires an open, verifiable, permissionless underlying architecture that enables agents to run, collaborate, and transact without trusting any central party.

However, unlike simple asset transfers, the operations in AgenticWorld are richer and more complex - they represent the achievement of real-world goals, task execution, and even collaboration among multiple agents. Therefore, this ecosystem requires a high-speed, low-cost, and highly compatible blockchain network.

Unfortunately, today’s mainstream chains, such as Bitcoin or Ethereum, are not high-performance and cost-effective enough to support large-scale proxy workflows.

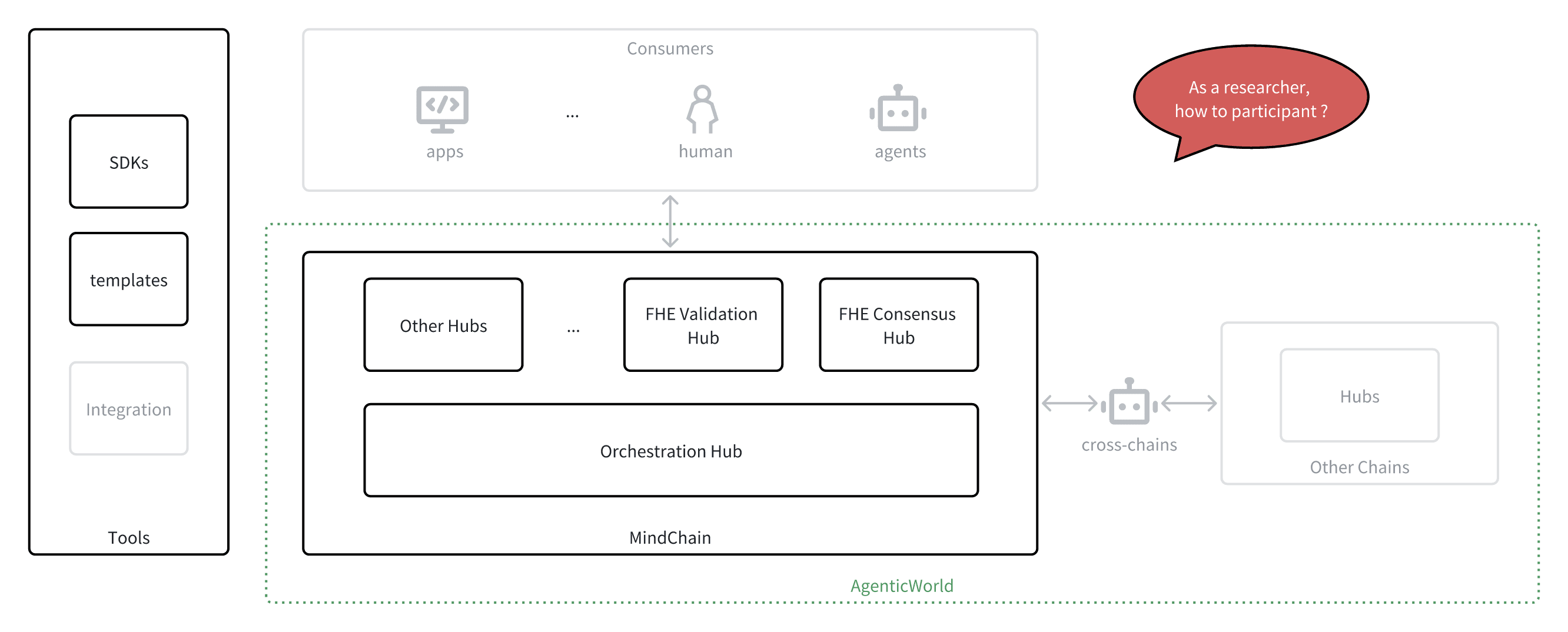

Choose a high-performance public chain: MindChain

For our implementation, we chose MindChain — a high-performance, EVM-compatible blockchain optimized for low transaction fees and high throughput (TPS). Of course, our architecture is designed to be chain-agnostic. You can choose any high-performance chain that meets the following two core requirements:

High TPS and low latency — to support real-time agent collaboration.

Cross-chain compatibility — enabling agents to operate across ecosystems.

For the consistency of the narrative in this article, we will continue to use MindChain as the reference platform for explanation.

Deployment Agent Protocol: Hub and Orchestration

In AgenticWorld, autonomous agents are task-driven. They have clear intentions - to execute workflows, gain insights, negotiate or trade. These tasks need to be defined and transparently tracked on-chain, which is why we model each task as a smart contract, which we call a Hub.

Each Hub defines a domain-specific task or role: pricing, forecasting, aggregation, negotiation, etc. Agents interact with these Hubs to complete or coordinate work.

However, agents (and hubs) rarely operate in isolation, and they must communicate and collaborate. This brings about the necessity of building a network model for agent-agent and hub-hub interaction.

Two architectural models for proxy communication

There are two main architectural patterns for the interaction between Hubs (and their proxies):

Peer-to-peer model: In this model, Hubs call each other directly through public methods. This approach is highly flexible, but lacks structure - each interaction requires a custom integration. This will lead to an exponential growth in the number of interface contracts, which will cause compatibility issues.

Orchestration model: All Hubs are registered on a common orchestration layer. This orchestration contract becomes the routing hub for communication between Hubs. When a Hub wants to initiate a task or call another Hub, it sends the request to Orchestration, which is responsible for forwarding, interface parsing, and coordinating execution.

Why we chose the orchestration model: Inspired by MCP

We finally chose the orchestration model, and the reason behind it is highly similar to the logic of the emergence of MCP (Model Context Protocol) in the AI ecosystem.

MCP provides a unified interface for interaction between different models, agents, and tools. Without MCP, each AI tool needs to integrate with each other one by one - it will be a mess that is impossible to maintain. MCP standardizes the communication interface so that agents can seamlessly connect to universal protocols like USB-C.

In AgenticWorld, we apply the same principles:

The orchestration layer is like the "MCP of on-chain proxies". It standardizes the way Hub tasks are exposed and called, allowing any proxy to interact with any other proxy or Hub - provided that they follow common interface specifications.

This design brings many benefits:

Plug-and-play interoperability between hubs

Reduce task interface fragmentation

Provides a lower barrier to integration for new agents and protocols

All routes are transparent and auditable, improving security

Instead of having hundreds of incompatible "agent interface cables", we built an orchestration layer as universal as USB-C or MCP, laying a composable and scalable foundation for AgenticWorld.

The relationship between the chain, hub, and orchestration layer can be abstractly represented by the following diagram:

Design Orchestration and Hubs

Next we will discuss in more detail how to design and implement Orchestration and Hub.

Orchestration Contract

The Orchestration contract is the core coordination component of AgenticWorld. Its main responsibilities include:

Hub Registry: Maintain a registry of proxy hubs that conform to the protocol standards

Call routing: enables hubs to interact through a unified interface, avoiding direct coupling of each contract

Through standardized routing logic, the orchestration layer ensures interoperability between hubs and simplifies system integration. Developers do not need to write point-to-point communication logic themselves, nor do they need to worry about interface mismatches. All cross-hub message interactions are completed through the central orchestration contract.

We have deployed the orchestration contract on MindChain, so developers can access it immediately without having to build this part themselves, allowing them to focus on building a business hub that complies with the protocol.

interface IHub {

function receiveCall(uint256 fromHubId, string calldata data) external;

}

contract HubOrchestration {

// Hub ID maps to its contract address

mapping(uint256 => address) public hubs;

function registerHub ( uint256 hubId , address hubAddress ) public { {

hubs [ hubId ] = hubAddress ;

}

function routeCall ( uint256 fromHubId , uint256 toHubId , string calldata data ) public { { .

address toHub = hubs[toHubId];

require(toHub != address(0), "Target hub not registered");

IHub(toHub).receiveCall(fromHubId, data);

}

}

Hub Contract Template

Hub contracts are the task execution layer of AgenticWorld. Each Hub represents an agent or protocol component in a specific domain - responsible for tasks such as prediction, aggregation, verification, negotiation, etc.

The core responsibilities of a Hub include:

Registers itself with the Orchestration contract and joins the proxy network

Task registration: Receive external agent or user-defined tasks and store them

Task execution: performing calculations, generating predictions, or aggregating results

Task consensus: Optionally collaborate with other Hubs to reach consensus on results

Cross-Hub Communication: Send or receive calls from other Hubs through the orchestration layer

The template provided by Mind Network defines the infrastructure for Hub development, including the common task format, registration logic, meta information interface, and the receiveCall() entry for receiving routing requests.

Developers are free to implement their own task logic - whether it is single-agent execution, collaborative workflow, or processing chain with emphasis on verification. As long as the Hub follows the orchestration interface, it can fully interoperate with other Hubs in the ecosystem.

This design encourages modularity, composability, and safety, allowing developers to focus on building complex agent systems without having to reinvent communication and coordination infrastructure.

contract HubN is IHub {

uint256 public hubId;

address public orchestration;

// Mapping of task IDs to booleans used to track task registrations

mapping(uint256 => bool) public taskID;

uint256[] public taskList; // [task_id_1, task_id_2, ..., task_id_n]

function registerTask(uint256 taskID, string calldata data) external {}

function getTask(uint256 taskID) external view returns (Task memory) {}

function doTask(uint256 taskID, string calldata data) external {}

function taskConsensus(uint256 taskID) external {}

function callOtherHub ( uint256 toHubId , string calldata data ) external { { .

HubOrchestration(orchestration).routeCall(hubId, toHubId, data);

}

function receiveCall(uint256 fromHubId, string calldata data) external {

require(msg.sender == orchestration, "Unauthorized source");

// Process the received message

emit TaskReceived(fromHubId, data);

}

event TaskReceived(uint256 fromHubId, string data);

}

How do agents work in Hub?

Once Orchestration and Hubs are deployed on MindChain, agents can start connecting, collaborating, and contributing to the wider AgenticWorld.

You can think of it like a miner in the blockchain: agents join the network, register with a hub, and start performing tasks. But unlike "mining", they contribute intelligence, calculations, and decisions - and are autonomous on the chain.

To ensure the interoperability and scalability of the system, we defined a minimal standard interface to describe the behavior of agents. These basic functions make it easy for agents and hubs to discover each other and coordinate their work - while also leaving room for innovation at the implementation level.

Standardized Agent-Hub interface

We abstract the interaction between the agent and the Hub into three core functions:

agent_hub_registration():

The agent establishes a connection with a specific Hub, starts the handshake process, identifies the identity and enables subsequent interactionsagent_task_registration():

Register a specific task. The agent and the Hub agree on the task ID, context, and execution scope.agent_task_execution():

Execute registered tasks. The agent completes the calculation and submits the result, which is verified, accepted or reported by the Hub.

These standardized interface hooks allow almost any proxy architecture to connect to any Hub - as long as it conforms to this protocol.

Developer freedom: plug in your own logic

Although the interface is standardized, the implementation logic is entirely up to the developer. You can build any form of agent - rule-based, large model-based, heuristic, neural network, or hybrid model - as long as it supports the three functions mentioned above, it can participate in AgenticWorld.

Proxies in Web2: A reusable framework

The Web2 AI ecosystem has developed many mature open source agent frameworks that can be adapted as hubs or agents and run on MindChain. Here are some popular options:

LangChain / LangGraph: Organize LLM calls into task flows

AutoGen: A framework for multi-agent collaboration and feedback loops

CrewAI: An agent orchestration system for team goals

These frameworks can be wrapped into the proxy interface layer and then seamlessly connected to the on-chain Hub.

Proxies in Web3: The Implementation of Decentralization

In the Web3 world, we have seen some native proxy platforms and have integrated with them:

Swarms: Distributed Multi-Agent Coordination System

AI16Z: Proxy Identity and Reputation Layer

Virtuals: Autonomous Economic Agents Bound to Tokens

They can all run as standalone agents or register with the Hub to participate in task execution, decision making, or result verification.

Where exactly does the agent run?

A common question is:

“Where exactly are these agents deployed?”

The answer is: anywhere. The AgenticWorld model is infrastructure agnostic. Here are some common deployment options:

Local deployment: Run the agent on your personal device (laptop, phone) using our open source SDK. Ideal for users who value privacy or seek sovereign control.

Cloud deployment: Deploy the agent on GPU-optimized platforms such as io.net or other general-purpose computing providers.

Trusted Execution Environments (TEEs): Using platforms such as Phala Network, agents are run in a hardware-level secure environment to ensure strong confidentiality.

Agent-as-a-Service Service Provider: Don’t want to deploy it yourself? You can subscribe to agents from platforms such as MyShell, rent agents from SingularityNET, or connect to any service provider that is compatible with the Hub interface.

How to ensure fair participation of agents?

A responsible agent designer might first ask:

“How do you ensure that AgenticWorld is fair?”

More specifically: How can autonomous agents engage in tasks with the Hub without being subject to bias, discrimination, or black-box rules?

The answer lies in the openness, transparency and programmability of the Hub smart contract. In AgenticWorld, both agents and Hubs are autonomous individuals, and their interactions are based on clear rules and free will.

Fair participation through on-chain transparent logic

The participation logic of each Hub is defined on-chain. This means:

Any agent can view the rules of participation;

The task allocation logic is verifiable and unchangeable;

Reward allocation and performance evaluation are transparently encoded in the contract.

Here are some examples:

Open Hub: Any registered agent can participate, tasks are distributed equally, and results are evaluated without bias. Most of the basic Hubs on the AgenticWorld platform are of this type, suitable for early agents or training stages.

Skill-gated Hubs: Some advanced Hubs require agents to complete specific training or certifications. For example, some Hubs on the platform only allow entry to agents who have passed the “basic skills track” that is available in other entry-level Hubs.

Performance-based Hubs: Future Hubs may implement a “vitality curve” or performance screening mechanism. Poorly performing agents may receive fewer tasks or be temporarily excluded from high-risk decisions — but these rules are fully public in the smart contract.

It’s a choice, not a compulsion

Agents (and their owners) have the right to choose whether to participate in a Hub. Since the task logic and agent admission conditions are visible on-chain, agents can make wise choices based on their own wishes:

If a Hub’s rules seem arbitrary or unfair, agents can choose not to participate;

If a Hub requires specific qualifications, agents can obtain the qualifications through public paths;

If the reward mechanism or task economic model is unreasonable, the agent can exit without penalty.

This mechanism creates a self-regulating ecosystem - fairness does not rely on coercion, but comes from freedom, transparency and competition.

Agents win trust and opportunities by demonstrating their abilities; Hubs attract agents to participate by providing fair rules and reasonable incentives.

In AgenticWorld, fairness is programmable — fully transparent to all participants from day one.

Orchestration as a governance layer

In AgenticWorld, the Orchestration contract plays an increasingly important role in fairness across the network, in addition to being a router for inter-agent communication. More than just a router for agent communication, the Orchestration contract observes engagement patterns between agents and the Hub, and uses this information to distribute rewards proportionally.

For example:

If a Hub is highly active and frequently used, Orchestration may allocate a higher reward share to it.

If certain agents consistently contribute high-quality cryptographic results, their reputation — and thus global reward weight — will improve.

This mechanism encourages both Hubs and Agents to behave transparently and optimize collective value. Unlike traditional approaches that rely on static indicators or closed governance, AgenticWorld uses on-chain behavior as a signal for reward distribution and evolves dynamically.

This concept is further explored in the Income and Rewards section.

Single Agent vs. Multi-Agent Application in AgenticWorld

Will AgenticWorld support multi-agent use cases?

From day one, AgenticWorld was designed to support both single-agent and multi-agent capabilities. This flexibility is more than just an engineering decision — it reflects the diversity of how tasks are delegated, coordinated, and executed. If you are not familiar with how to build and use multi-agent systems, you can check out the following documents, and there is more information here:

OpenAI multi-agent framework based on swarm design: https://github.com/openai/swarm

LangGraph multi-agent framework based on graph design: https://github.com/langchain-ai/langgraph

Microsoft Multi-Agent Framework integrated into AutoGen: https://www.microsoft.com/en-us/research/articles/magentic-one-a-generalist-multi-agent-system-for-solving-complex-tasks/

Mind Network’s multi-agent work and partners can be found on its GitHub: https://github.com/mind-network/Awesome-Mind-Network

Both models are task or intent driven in nature. Individual agents operate independently to complete atomic tasks, while multi-agent systems collaborate to decompose and complete larger, compositional tasks - each agent contributing a piece to the overall solution. Because these compositions are modular and extensible, it is difficult to enumerate all possible multi-agent coordination patterns. However, we can use some common examples to illustrate how these systems evolve in practice.

Single Agent Example

In single agent mode, users delegate all responsibilities to a single agent, which initiates tasks, interacts with the Hub, and makes decisions on behalf of the user.

Example 1: An agent queries a set of external agents through a Hub (e.g., predicting ETH price). It collects encrypted responses and processes them locally.

Example 2: The agent leverages an external LLM (e.g., DeepSeek) for inference, which is then used to guide or filter how to interact with the agent hub.

This model is simple, cost-effective, and well suited for decision-making workflows with a clear scope, such as asset management, reputation scoring, or data retrieval.

Multi-agent example

In a multi-agent design, a task is broken down into multiple subtasks, with different agents responsible for handling each stage or solving the problem from different perspectives.

Example 1: Your master proxy queries a Hub (Hub1), which routes the request to multiple external proxies. Their encrypted responses are then submitted to Hub2, which runs secure computations (e.g., FHE consensus).

Example 2: The system integrates an LLM for semantic enforcement, while Hub2 coordinates specialized sub-agents (e.g., verifiers, reviewers, predictors) to compute security results.

In both cases, you still maintain control through the master agent, but gain more intelligence and redundancy through composition. The architecture supports parallel execution, role specialization, and hierarchical orchestration, enabling powerful agent team collaboration.

As AgenticWorld evolves, we expect more complex behaviors to emerge: groups of agents, role-based agent collectives, DAO-managed agent taskboards, dynamic selection of agents via reputation or staking mechanisms, and more.

Multi-agent design is more than just a feature — it is fundamental to composability, scalability, and emergent intelligence in decentralized systems.

Initial Impressions and Next Steps

By now, you should have a basic understanding of how agents connect to the Hub, coordinate through Orchestration, and execute tasks on MindChain.

From registration to execution, this structure enables scalable, secure, and collaborative intelligence.

Next, we’ll dive into how this ecosystem works in practice — starting with understanding the cryptographic foundations that underpin it and ensure its security. What’s the first step? Understanding the FHE foundations that underpin proxy privacy-preserving computation.

Let's begin.

Everything you need to know about FHE (for now)

Fully Homomorphic Encryption (FHE) may sound intimidating, but don’t worry. You don’t need a PhD in cryptography to understand its core value. This section provides you with enough practical knowledge to help you understand why FHE is so important to AgenticWorld.

What is FHE? One sentence explanation:

FHE lets you compute directly on encrypted data — without having to decrypt it.

At first glance, this might look like just another form of encryption. But it creates a fundamental shift in the way we think about data privacy.

Traditional encryption: encryption at rest and in transit

Currently, most encryption in use can be divided into two categories:

Encryption at rest: Encrypts data in storage (e.g. on a hard drive).

Encryption in transit: Encrypts data as it travels over the network.

But there’s a catch: In order to perform computations on encrypted data, you have to decrypt it first. And once data is decrypted — whether in RAM, on a chip, or in a model — it’s vulnerable to leakage, tampering, or misuse.

This is because traditional computers are designed to operate on plaintext data, not ciphertext data.

This is where FHE changes the game.

What is special about FHE?

FHE is currently the only technology that can practically perform computations directly on encrypted data, producing encrypted results that can later be decrypted to yield the correct answer.

In other words, when using FHE:

You never expose the original data.

You can perform computations without trusting the computing environment.

Privacy is preserved end-to-end (at rest, in transit, and during computation).

The theory behind FHE was proven more than a decade ago, and thanks to recent engineering breakthroughs, it is now fast and practical enough to be deployed in real systems—including our own.

If you want to learn more, you can check out our FHE 101.

FHE in practice: 3(+1) core features you need to know (so far)

You don’t need to understand number theory to understand the rest of this article. Just understand the following core functions and their purpose.

fhe_keys_generation(): Generates the keys required for all FHE schemes. It returns three keys:

fhe_secret_key: used to encrypt and decrypt data

fhe_public_key: used to encrypt data

fhe_compute_key: used to compute the key for encrypted data without decrypting the data.

This fhe_compute_key differs from other encryption systems - it allows computation to be performed while maintaining encryption.

fhe_encrypt(): Encrypts data using fhe_public_key or fhe_secret_key. Most systems use public key encryption, which allows encryption without exposing the private key.

fhe_compute(): Performs functions (such as addition, multiplication, etc.) on encrypted data, using fhe_compute_key. The output is still encrypted.

fhe_decrypt():Decrypt the final result using fhe_secret_key. Only the data owner can perform this step.

Example: Secure Addition Using FHE

Here is a conceptual example showing how to use FHE to securely encrypt two numbers and add them together:

# Step 1: Key Generation

fhe_secret_key, fhe_public_key, fhe_compute_key = your_agent.generate_fhe_keys(seed)

# Step 2: Encrypt Data

data = 1

enc_data = your_agent.fhe_encrypt(fhe_public_key, data)

# Step 3: Compute on Encrypted Data (add 1 + 1)

enc_result = other_agent.fhe_compute(fhe_compute_key, fhe_add, enc_data, enc_data)

# Step 4: Decrypt Result

result = your_agent.fhe_decrypt(fhe_secret_key, enc_result)

# returns 2

This example shows how the data remains encrypted throughout the entire process - only the final result is decrypted, and only the owner of the data can do so.

Solving the consensus problem in AgenticWorld

With the background and foundational elements established, we can now explore practical solutions to core problems in AgenticWorld — starting with the consensus problem, one of the most critical.

The diagram above involves multiple issues (consensus, verification, and encryption) that are sometimes confused together. To make this issue more understandable, we will focus on consensus alone, gradually breaking it down into atomic steps, and show how FHE supports privacy-preserving agreement between agents.

What is the consensus problem? In an agent ecosystem, your agent may ask multiple other agents (e.g., specialized predictors, validators, or strategists) to respond to a prompt. However, instead of your agent directly trusting any single response, your agent seeks a consensus result - that is, a majority opinion, an average, or some pre-agreed output.

The key here is: each proxy's response remains encrypted during the aggregation process. Your proxy only decrypts the final result.

FHE-based consensus process

Let's walk through the high-level steps as shown in the diagram:

Tip: Your agent submits tasks through a registered Hub.

Distribution: Tasks are forwarded to multiple agents that can handle the requests.

Encrypted responses: Each agent returns an encrypted response (no one sees the actual answer).

FHE Consensus: The Hub uses FHE to run consensus functions (e.g., majority voting) directly on encrypted data.

Decrypt the final result: Your agent decrypts the consensus result and makes a decision.

This maintains data privacy, agent confidentiality, and computational integrity.

Example Pseudocode: Cryptographic Consensus Using FHE Here is a conceptual breakdown of how this might be implemented in practice:

# Step 1: Task registration by your agent

task_id = hub.register_task(hub_id, your_agent, prompt)

# Step 2: Participating agents register and respond

agent_n.register(hub_id)

encrypted_response_n = agent_n.fhe_encrypt(fhe_public_key, response_n)

fhe_encrypted_response_n = agent_n.submit(hub_id, task_id, encrypted_response_n)

# Step 3: Aggregate encrypted responses

fhe_encrypted_responses = [

fhe_encrypted_response_1,

fhe_encrypted_response_2,

...,

fhe_encrypted_response_n

]

# Step 4: Define consensus logic (e.g., majority vote)

fhe_compute_logic = fhe_majority_vote

# Step 5: Perform consensus computation over encrypted data

fhe_consensused_response = hub.fhe_consensus(

fhe_compute_key,

fhe_compute_logic,

fhe_encrypted_responses

)

# Step 6: Your agent decrypts the final result

consensused_response = your_agent.fhe_decrypt(fhe_secret_key, fhe_consensused_response)

Your agents make an educated decision — without ever having to know who said what, or what data they used.

This FHE-based consensus model solves some of the biggest trust challenges in the AgenticWorld:

Agents can collaborate without exposing their internal logic or data.

The network can reach agreement without relying on a trusted third party.

Your proxy can verify the result without knowing who provided it - thus protecting privacy and integrity.

Solving authentication issues in AgenticWorld

In a decentralized proxy ecosystem, validation is as critical as consensus. Consensus is the response of the aggregating proxy, while validation is the verification of the correctness or trustworthiness of a specific result — usually before using that result to make a critical decision.

Let's say your agent wants to use a service like DeepSeek to perform inference or prediction. Before relying on its output, the agent wants to confirm that the model is accurate, has not been tampered with, and functions consistently as expected. But how can the agent verify this without accessing the internal weights, logic, or viewing the validator's private data?

This is where FHE-powered authentication comes in.

What are verification questions?

Your agent might delegate the task to an external AI service or a large language model (e.g. DeepSeek), but it still needs to answer:

“Can I trust this output?”

The proxy cannot peek inside the model or run repeated queries on hundreds of endpoints to verify plaintext results. It needs to rely on other proxies to verify behavior without revealing the data, the verifier, or the model.

FHE Authentication Process

Let's walk through the process step by step:

Independent Verification: Multiple agents independently evaluate the behavior or results of a service (e.g., DeepSeek) using their own models, tests, or judgment.

Encrypted Verification: Each validator encrypts its feedback using FHE and submits it to a Hub designed for FHE verification.

Cryptographically verified consensus: The Hub aggregates these cryptographically verified signals using FHE logic (e.g., threshold approval, score majority, pass/fail).

Verify the result: Your agent receives the final, consensus-verified result — still encrypted, and decrypts it using its private key. If the result is verified, the agent can use the service with confidence.

Key point: Your proxy can build trust in the service without breaking privacy or revealing who verified what.

Example Pseudocode: Cryptographic Authentication Using FHE

# Step 1: Your agent defines a validation task for a service (e.g., DeepSeek)

task_id = hub.register_task(hub_id, your_agent, service_id_or_output)

# Step 2: Validator agents register to participate in validation

agent_n.register(hub_id)

# Step 3: Each validator independently evaluates the service or its output

validation_result_n = agent_n.evaluate_service(service_id_or_output)

# Step 4: Each result is encrypted using the public key

encrypted_validation_n = agent_n.fhe_encrypt(fhe_public_key, validation_result_n)

# Step 5: Validators submit encrypted validations to the hub

fhe_encrypted_validation_n = agent_n.submit(hub_id, task_id, encrypted_validation_n)

# Step 6: Hub collects encrypted validations from all validators

fhe_encrypted_validations = [

fhe_encrypted_validation_1,

fhe_encrypted_validation_2,

...,

fhe_encrypted_validation_n

]

# Step 7: Hub runs FHE-based consensus logic (e.g., threshold approval or majority)

fhe_compute_logic = fhe_validation_threshold_approval

fhe_validated_result = hub.fhe_consensus(

fhe_compute_key,

fhe_compute_logic,

fhe_encrypted_validations

)

# Step 8: Your agent decrypts the final validation outcome

is_validated = your_agent.fhe_decrypt(fhe_secret_key, fhe_validated_result)

# Step 9: Based on validation, agent decides to proceed (or not)

if is_validated:

your_agent.use_service(deepseek)

else:

your_agent.reject_service(deepseek)

This design brings the following specific benefits:

Validator privacy — Validators can evaluate services without revealing how they evaluated them.

Trust hierarchy – agents can “trust trust” – through cryptographic verification.

Tamper-proof — If DeepSeek or any other service is compromised, the verification process can detect it — privately and at scale.

We can immediately see some useful application scenarios:

Verify that third-party large language models return consistent answers.

Detect whether the agent’s predictions are adversarially influenced.

Verify that the model has not been replaced with a fake or modified clone.

In the AgenticWorld, agents make autonomous decisions. But autonomy without verification is just blind delegation. With FHE-powered verification, we create a trust foundation for collaboration between agents — ensuring privacy, robustness, and correctness.

Solving encryption issues in AgenticWorld

In traditional systems, data must be decrypted to be used for computation. But in AgenticWorld, this exposes a major security vulnerability: autonomous agents must collaborate and compute without exposing sensitive data—either yours or someone else’s.

This is the encryption problem:

How can I allow a proxy to process private data without viewing the plaintext?

This is where fully homomorphic encryption (FHE) becomes critical. It allows data to remain encrypted at every stage — from source, to transmission, to multi-agent computation — while still allowing useful work to be done.

What is the encryption problem?

Suppose you want your agent to coordinate with other agents, analyze some private input (such as financial data, identity, or preferences), and return a result. In the traditional setting:

You need to decrypt the data before passing it to the proxy.

The proxy decrypts and forwards it to other proxies or services.

With every jump, your privacy is compromised.

FHE solves this problem by keeping data encrypted at all times—even across multiple agents and computation steps.

FHE-based encryption process

Here’s how to maintain encryption throughout the process:

Encrypted Input: You encrypt your data using your FHE public key and send it to your proxy.

Encrypted computation: Your agent performs FHE-based operations (such as preprocessing or routing logic) without decrypting the input.

Delegation: Your agent forwards encrypted data to another agent.

Second agent computation: The second agent runs more FHE-based logic on the encrypted data.

Result Return: The encrypted result is sent back to your agent.

Final encrypted output: Your agent returns the final encrypted result to you.

Decryption: Only you, using your private key, can decrypt and read the result.

Throughout the process, the plaintext is never visible - not by your agents, other collaborators, or even during processing.

Example Pseudocode: Maintaining Encryption Between Proxies

# Step 1: You encrypt your private input data

encrypted_input = your_agent.fhe_encrypt(fhe_public_key, sensitive_data)

# Step 2: You send it to your agent

your_agent.receive_encrypted_data(encrypted_input)

# Step 3: Your agent performs encrypted computation

encrypted_processed = your_agent.fhe_compute(fhe_compute_key, logic_A, encrypted_input)

# Step 4: Your agent forwards to a second agent for further encrypted computation

encrypted_processed_2 = other_agent.fhe_compute(fhe_compute_key, logic_B, encrypted_processed)

# Step 5: The result is routed back to your agent

# Step 6: Your agent returns the encrypted result to you

# Step 7: You decrypt the final result

result = fhe_decrypt(fhe_secret_key, encrypted_processed_2)

This model ensures that:

You have full ownership of your data

Agents cannot reverse engineer input or logic

The integrity and privacy of computations are maintained even across untrusted participants

This is the foundation of Zero Trust Computing in the AgenticWorld — agents collaborate freely but never need to trust each other with secrets.

AgenticWorld requires cross-chain collaboration

So far, we’ve discussed how agents can securely collaborate within a single chain through an FHE-enabled Hub. But the AgenticWorld does not exist in isolation. In fact, agents and the smart contracts they rely on are distributed across multiple blockchains.

Whether due to lower gas fees, domain-specific execution requirements, or ecosystem compatibility, proxies and hubs will inevitably be distributed on different chains. In order to collaborate meaningfully, they must interoperate — securely, efficiently, and asynchronously.

This brings up the cross-chain issue.

What is the cross-chain problem?

Suppose there is such a situation:

You use a Hub on BNB Chain to coordinate the agent's strategy.

Another user participates in the same Hub’s logic on MindChain — but their agent is on a different chain.

Both agents want to contribute cryptographic computations, reach consensus, and verify the results — just as if they were on the same network.

The challenges are:

How can we enable agents to contribute shared tasks across chains without compromising security or requiring full trust in the bridge?

Cross-chain process in AgenticWorld

Two users, on different chains, each control their proxy.

Both users want to contribute to the shared Hub1, which is logically the same task definition but deployed on two chains: BNBChain and MindChain.

Their agents independently compute and contribute encrypted data.

A cross-chain coordination agent facilitates communication between the two hubs, synchronizing cryptographic states and routing consensus inputs.

Ultimately, both agents receive results that reflect the shared cross-chain logic, even though each agent acted locally.

This makes AgenticWorld truly decentralized - across both the computing and chain layers.

To achieve this we need to:

Keep FHE encrypted payloads - cryptographic logic remains secure even when passed across chains.

Cross-chain proxy layer - a relay or bridge proxy that knows how to map task identifiers and verify cross-chain hub states.

Collaborative consistency — Orchestration contracts must be able to interact with corresponding contracts or mirrored states across chains to ensure consistent results.

This design specifically takes into account the following facts:

Scalability: Proxies can be deployed wherever is cheapest or fastest without compromising global coordination.

Interoperability: The Hub can aggregate insights from multiple ecosystems (DeFi on BNB, computation on MindChain, storage on Filecoin, etc.).

Composability: You can compose workflows of agents and services across chains like building blocks - fully encrypted and fully autonomous.

What you can build with cross-chain design:

Multi-chain proxy federation for joint operation consensus model

Cross-chain verification hub with input from different ecosystems

Cross-chain FHE voting proxy governance

Income and Rewards in AgenticWorld

An ecosystem without a functioning economy cannot thrive. In AgenticWorld, autonomous agents provide computation, coordination, and intelligence — and they should be rewarded accordingly for their contributions. This section focuses on two core economic mechanisms:

Revenue – for value-added services

Rewards - for fair contribution sharing

Together, these two mechanisms ensure sustainability, incentivize participation, and promote a vibrant agent-driven economy.

Revenue: Value exchange between agents and Hubs

Agents in AgenticWorld often rely on services provided by various Hubs — such as predictive models, data streams, orchestration services, or consensus layers. Many of these Hubs offer paid services and therefore generate revenue streams.

As shown in the figure:

Your agent may pay a fee to use Hub1.

Hub1 may in turn depend on Hub2 and pay for its services.

This payment cascade reflects a modular service economy—agents are both consumers and contributors.

Income streams can be:

Fixed Fee

Billing by Task

Subscription

Usage-based billing

Importantly, monetization is up to the Hub owner. Some Hubs may adopt a freemium model, while others may operate like public goods. The architecture is flexible.

Rewards: Distribute value fairly among agents

Rewards are different from income – rewards focus on distribution, not payment.

In AgenticWorld, there are two main reward streams:

Protocol-level rewards — distributed by the orchestration contract on MindChain

Hub-level rewards — defined and distributed independently by each Hub

Arrangement Level Rewards

Since the orchestration contract observes the activity of all agents on all hubs, it can fairly calculate the overall contribution of agents. Mind Network uses this data to distribute $FHE tokens — its native reward token — to contributing agents across the network.

This creates a global benchmark reward for agents participating in AgenticWorld - ensuring that agents are recognized regardless of which Hub they choose.

Hub-level rewards

Each Hub may also distribute additional rewards at its discretion:

Revenue sharing (sharing service revenue with agents)

Hub native tokens (i.e. new tokens launched by the Hub)

Additional $FHE rewards for special or high-impact tasks

This structure allows for a variety of economic models. Some Hubs may be like DAOs, some like SaaS platforms, and others like public resources. The orchestration contract guarantees minimum fairness, while the Hub can add additional incentives.

Flexible and fair incentive model

We intentionally left the economic design open. Users and agents are free to choose a Hub that aligns with their preferences — whether it’s a high-reward system, a low-cost service, or a public welfare Hub.

Mind Network ensures:

Everyone can earn at least $FHE based on their contributions, through protocol-level rewards.

Reward flow is transparent and traceable

Hub Economy Remains Flexible and Competitive

This dual reward structure promotes composability, fairness, and self-sovereign economic governance within AgenticWorld.

So, bringing all of this together, how do we build an AgenticWorld using Mind?

From a 100-mile high altitude, let's sketch the architecture of AgenticWorld, all of which should have been covered in detail in the previous chapters.

Participate in AgenticWorld

AgenticWorld is an open, composable ecosystem designed to welcome contributors from the Web2, Web3, and AI communities. Whether you are a developer, user, partner, or researcher, there are multiple ways to connect to the network and help shape its evolution.

We summarize the main participating roles as follows:

As a developer

Developers are the backbone of AgenticWorld. If you are building software, tools, or autonomous systems, here’s how you can get involved:

Build agents: Use our open source SDK to create agents that can register with the Hub, perform tasks, and interact with the orchestration layer.

Development Hub: Design and deploy smart contracts that define agent workflows — whether for prediction markets, task verification, negotiation, or custom agent behavior.

Each new Hub launched expands the usefulness of AgenticWorld and provides new areas for agents to explore.

As a user

You don’t need to be a developer to benefit from AgenticWorld. As an end-user, you can harness the power of autonomous agents with minimal friction:

Own your agents: Subscribe to or deploy agents that work under your proxy. These agents can manage assets, schedule tasks, negotiate on your behalf, or interact with other services.

Train through action: Let your agents learn and evolve by performing tasks in Hub. Every interaction contributes to the optimization of your agent strategy, intelligence, and personalization.

AgenticWorld enables everyday users to leverage decentralized AI without having to build models from scratch.

As a partner

If you are part of an existing Web3 protocol, AI tool provider, or computing platform, you can extend your services to AgenticWorld through multiple integration points:

Connect Your Chain: Expand the reach of AgenticWorld by integrating your blockchain with MindChain — enabling cross-chain agent coordination and scaling the agent economy.

Launch your own Hub: Deploy a domain-specific Hub and expose your services (e.g., compute, storage, insights, model reasoning) as on-chain task modules for agents to interact with.

Provide services to other hubs: Register your proxy service as a callable endpoint that other hubs or proxies can use in their workflows.

Consume existing Hub services: Enhance your own workflow by consuming verified cryptographic services in the AgenticWorld network.

Whether you are a Layer-1 protocol, LLM provider, or decentralized computing node, AgenticWorld provides you with access to an intelligent, trustless coordination layer.

As a researcher

AgenticWorld is more than a product - it's an open research frontier at the intersection of AI, cryptography, decentralized systems, game theory, and social coordination. If you're a researcher, there's a deep and diverse set of challenges waiting to be explored - and a growing community ready to collaborate.

Here are ways you can contribute as a researcher:

Advancing agent coordination theory: Helping to define the mathematical and algorithmic foundations of decentralized agent interactions. Topics include agent consensus, incentive alignment, trust metrics, governance, and emergent behavior in multi-agent systems.

Explore FHE, ZKP, and Cryptographic Protocols: Push the boundaries of privacy-preserving computing. Design new circuits, optimize cryptographic primitives, or explore hybrid approaches (e.g., FHE + ZK) for verifiable AI. FHE still needs more research on how to engineer it.

Research economic and incentive models: Design and simulate token economics, staking, penalty or reputation mechanisms to ensure agents behave honestly, sustainably and efficiently — both individually and in networks.

Participate in standards and protocol design: Participate in open standards that define agent interfaces, task definitions, consensus algorithms, or agent lifecycle frameworks. Your work may define how the next generation of autonomous agents interact with each other.

Publish, present and collaborate: Share your research findings in academic journals, conferences and open repositories. We support collaborative research, co-authorship and cross-institutional collaboration - providing grants, hackathons and publishing support.

Whatever your background is in AI alignment, cryptography, distributed systems, mechanism design, or philosophy of autonomy — AgenticWorld provides a laboratory for real-world, impactful research.

What's next

We’ve introduced you to the architectural foundations of AgenticWorld - from its philosophical vision to the technical primitives that make it secure, scalable, and interoperable. At the core of all of this, is a simple idea:

Agents should be autonomous, collaborative, and trustworthy—without compromising privacy, ownership, or control.

To achieve this goal, we introduced:

FHE-based consensus enables agents to reach agreement without revealing their data

FHE-based authentication enables agents to trust results without inspecting internal data

End-to-end encryption ensures data remains private across multi-agent workflows

Cross-chain orchestration, enabling agents to collaborate across fractured ecosystems

Reward and revenue models to ensure agents are sustainably incentivized

Together, these components build a unified, modular, and secure agent collaboration infrastructure.

But this is just the beginning. Here are some of our plans to continue driving growth:

Open standards for Hub and orchestration: We will continue to evolve open specifications for Hub interfaces, orchestration, and agent lifecycle management — so developers can easily plug in without reinventing infrastructure.

Developer Tools and SDKs: We’re building better SDKs, CLI tools, and templates so that Agents and Hubs can be deployed in minutes — not weeks.

Integration with more agents and models: Expect integration with major LLM frameworks, autonomous agent libraries, and decentralized identity standards — connecting AgenticWorld to the real-world applications people are already using.

FHE Optimization and Hardware Acceleration: Mind Network continues to push FHE performance to a production-ready state through compiler improvements, circuit optimizations, and hardware-accelerated backends.

Community and incentivizing experimentation: We will launch grants, hackathons, and bounty programs to reward early builders and researchers to help shape the future of AgenticWorld.

Join Us

If you’re curious about how decentralized intelligent systems can shape the next generation of the internet — we invite you to build it with us.

Because AgenticWorld is more than just a technology stack. It’s more than just a network of agents and contracts.

It’s a new layer of digital civilization. A fabric of trust, where autonomy and intelligence converge. A world where agents serve humans — and each other — without compromise.

Whether you’re an engineer, researcher, creator, or visionary — your contribution matters.

Let's build AgenticWorld together.