Hello Square family 👋

#MavisEvan In my view, Walrus stands out not because it talks about decentralization, but because it engineers trust directly into the system. What truly drew me in was how it handles asynchronous challenges and cryptographic commitments through its Red Stuff protocol.

I’ll explain this the way it finally clicked for me.

The Core Problem With Decentralized Storage

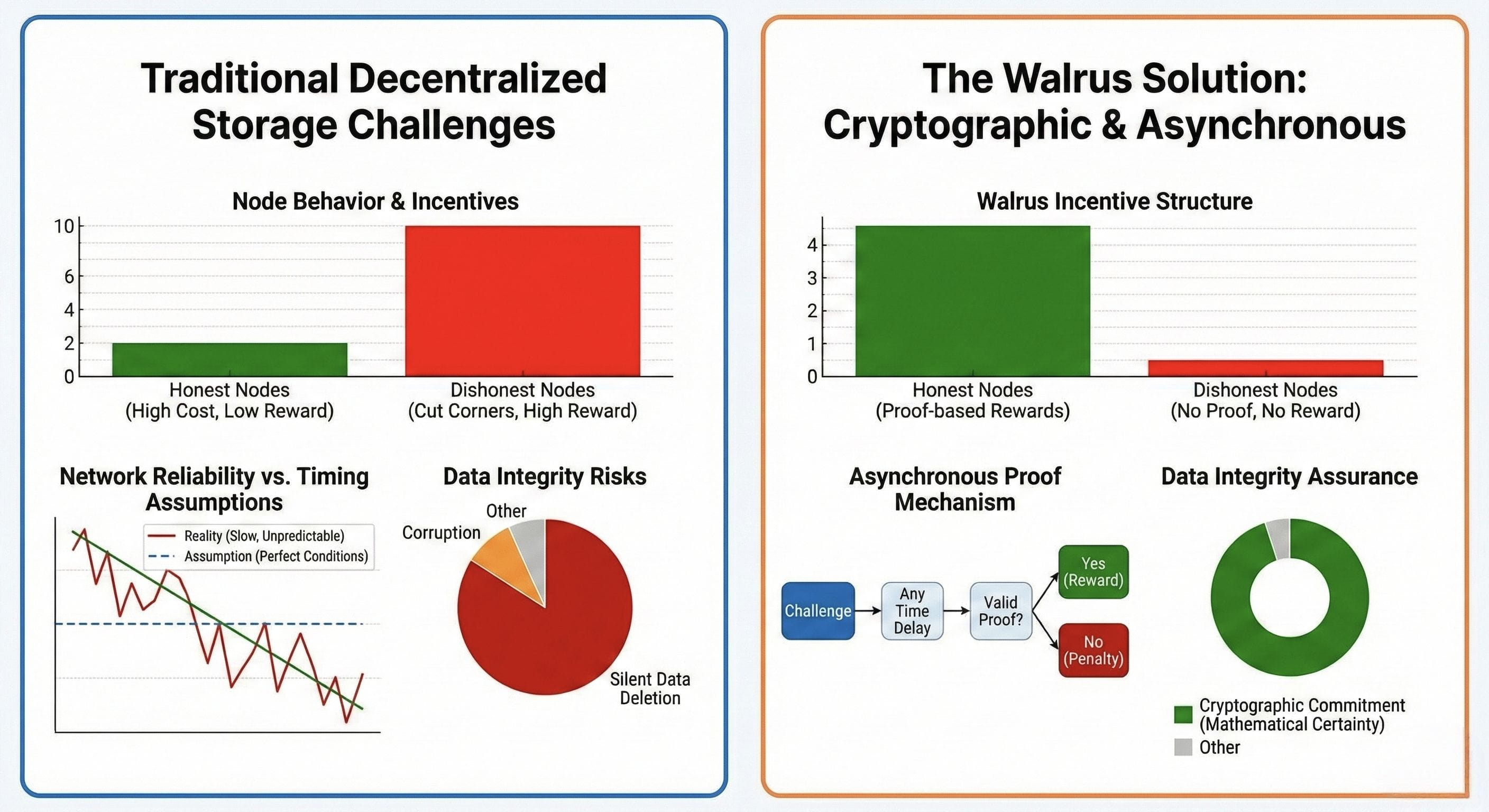

As I dug deeper into decentralized storage systems, one recurring issue kept surfacing: in open networks, you don’t actually know who is honest.

Storage nodes are rewarded for holding data, but storing data has real costs. Over time, some participants are incentivized to cut corners. They claim to store files while quietly deleting them to save resources. This isn’t a rare edge case—it’s one of the biggest threats to any storage network that wants to scale sustainably.

Many systems attempt to solve this with frequent checks or strict timing assumptions. But from what I’ve seen, that approach relies on something unrealistic: perfect network conditions.

In real decentralized environments, networks are slow, unreliable, and unpredictable. Messages arrive late. Connections drop. Delays are normal. Designing security around strict timing assumptions feels fragile.

Walrus clearly recognizes this reality.

Why Asynchronous Design Changes Everything

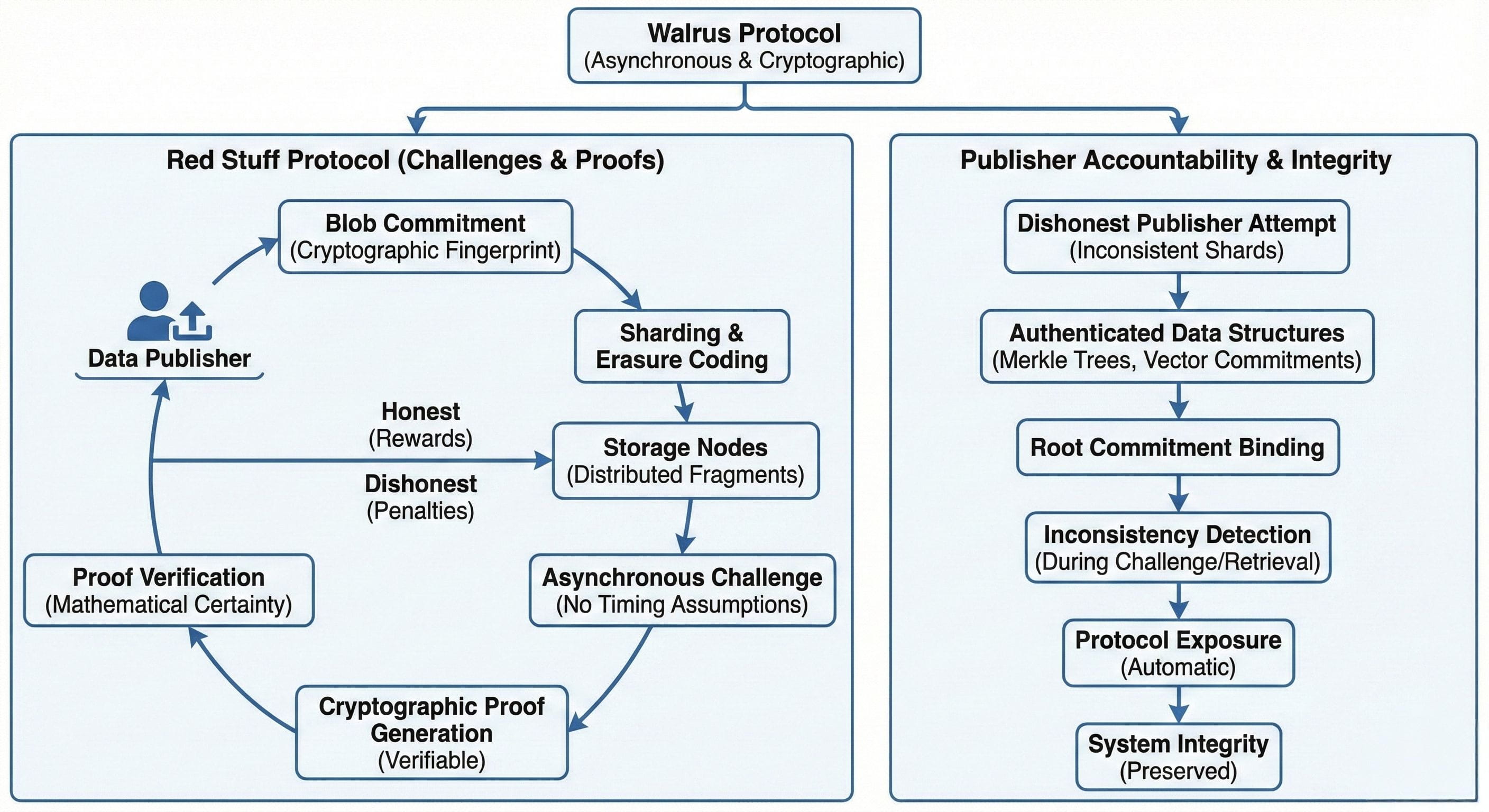

What stood out to me during my research is that Walrus is designed for asynchronous environments from the ground up. This is where the Red Stuff protocol becomes important.

Instead of relying on fast responses or tight deadlines, the system focuses on a single question: is the proof correct or not?

If an attacker tries to manipulate the network by introducing delays, it doesn’t help them. The protocol doesn’t punish slowness—it punishes incorrect proofs.

In my opinion, this is a powerful design choice. Security is anchored in mathematics rather than timing assumptions, which aligns far better with how decentralized systems actually behave in the real world.

Blob Commitments: Removing Ambiguity and Excuses

When data is uploaded to Walrus, the publisher creates what’s called a blob commitment. The simplest way to think about this is as a cryptographic fingerprint of the entire dataset.

No matter how large the file is, this commitment fully represents it.

Each storage node only holds a fragment of the data, but when challenged, it must prove that its fragment corresponds to that original commitment. There’s no room for ambiguity. Either the fragment matches the commitment, or it doesn’t.

What impressed me most is how this removes excuses entirely.

A node can’t claim the network was slow or the challenge arrived late. If it deleted the data, it simply cannot produce a valid proof. Over time, this behavior becomes economically irrational. Dishonest nodes lose rewards, while honest nodes continue to earn.

The system filters itself.

Publishers Are Accountable Too

Another detail I appreciated is that Walrus doesn’t only defend against dishonest storage nodes. It also anticipates malicious publishers, which is something many designs overlook.

A dishonest publisher might attempt to send inconsistent shards to different nodes, effectively creating multiple versions of the same dataset. If unchecked, this quietly undermines reliability.

Walrus addresses this using authenticated data structures such as Merkle trees and vector commitments. All shards are cryptographically bound to a single root commitment.

If a publisher tries to cheat by distributing conflicting data, those inconsistencies surface naturally during challenges or retrieval. No human intervention is needed. The protocol exposes the problem on its own.

From an engineering perspective, this is a clean and robust solution.

Trust Enforced by Structure, Not Assumptions

What I personally like most about Walrus is that it doesn’t rely on social trust, governance committees, or subjective judgment. There’s no need to believe participants are acting honestly.

The rules are enforced by cryptography and incentives.

If someone breaks the rules, the system proves it. Penalties follow automatically. Everything remains neutral, verifiable, and predictable.

My Overall Take

After researching Walrus in depth, my takeaway is simple: this system is built for long-term resilience.

It accepts that decentralized networks operate under hostile and asynchronous conditions. Instead of fighting that reality, it designs around it. Honesty isn’t encouraged by hope—it’s enforced by structure.

From data ingestion to storage to retrieval, integrity is preserved without relying on timing tricks or fragile assumptions. In my view, this is exactly the kind of design decentralized infrastructure needs if it’s going to move beyond experimentation and into serious, real-world usage.

That’s my professional take, shared the way I understand it. I hope it adds value to the discussion here.