@APRO Oracle :For years, “oracle” has meant one thing in crypto: price feeds pushed on-chain by a set of trusted nodes. This model powered DeFi’s first wave, but it was never designed for what blockchains are trying to do now — interact with messy, probabilistic, real-world data at scale.

As AI agents, autonomous finance, and real-world integrations expand, the limits of traditional oracle design are starting to show. Not loudly. Quietly. Structurally.

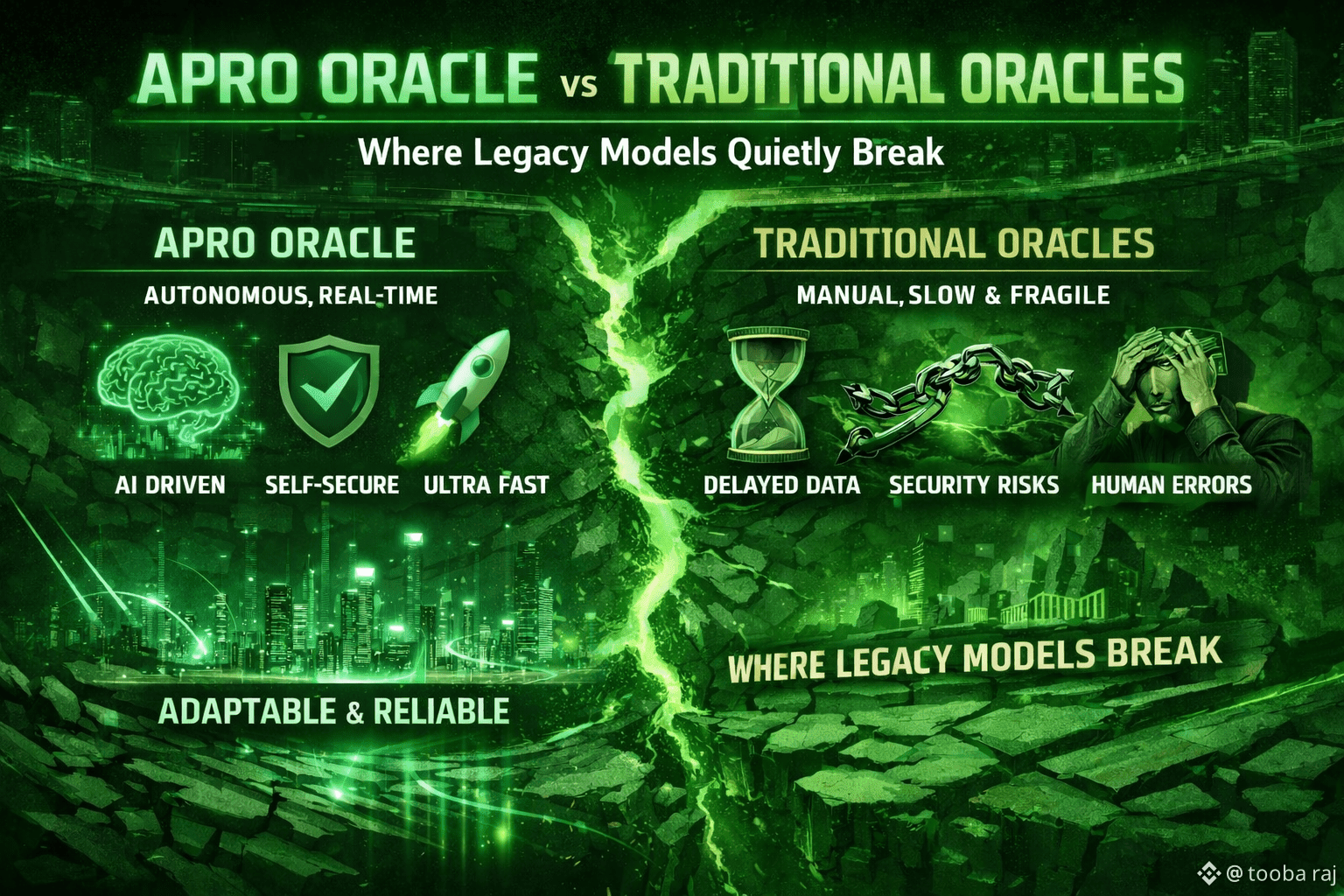

APRO doesn’t compete by being faster or cheaper in isolation. It challenges assumptions baked into legacy oracle architecture — assumptions that worked when data was simple, static, and financial-only.

Let’s unpack where traditional oracles break, and why APRO’s design signals a different direction.

1. Traditional Oracles Assume Data Is Deterministic

Reality: most real-world data isn’t

Legacy oracles were built around a clean premise:

“There exists one correct value, and we just need to report it securely.”

This works for:

Token prices

FX rates

Simple numerical feeds

But breaks down when data becomes:

probabilistic

noisy

contextual

AI-generated

event-based

subjective or multi-source

Examples:

Was a shipment actually delivered?

Did an AI model behave according to policy?

Is off-chain computation valid?

Did a real-world condition meaningfully occur?

Traditional oracles struggle because they are value broadcasters, not truth evaluators.

APRO reframes this: instead of assuming a single “truth,” it treats data as something that must be verified through process, not just fetched.

2. Legacy Oracles Centralize Trust Behind “Decentralization”

Most traditional oracle networks claim decentralization, but in practice rely on:

fixed node sets

reputation-based whitelists

static aggregation logic

uniform data pipelines

This creates a quiet centralization layer:

same providers

same APIs

same incentives

same failure modes

If those upstream sources fail or bias their output, decentralization at the node layer doesn’t fully help.

APRO introduces a more modular trust surface:

heterogeneous nodes

hybrid verification roles

separation between data sourcing, validation, and attestation

Instead of asking “who publishes the data?”, APRO asks:

“How do we prove that the data was produced and verified correctly?”

That shift matters as oracles move beyond prices.

3. Static Aggregation Fails in Dynamic Environments

Traditional oracles aggregate via fixed rules:

median

weighted average

threshold consensus

These rules assume stable conditions. But real-world data environments change constantly:

source reliability fluctuates

latency varies

adversarial behavior evolves

AI outputs differ per context

Static aggregation can’t adapt.

APRO introduces adaptive verification logic, where:

validation methods can change per task

multiple verification paths coexist

confidence emerges from process, not just numbers

This allows oracle behavior to scale across use cases instead of forcing everything into a price-feed-shaped box.

4. Off-Chain Computation Is a Blind Spot for Legacy Oracles

As protocols increasingly rely on:

AI inference

off-chain computation

complex simulations

external workflows

traditional oracles hit a wall. They can report results, but cannot prove how those results were produced.

This creates a trust gap:

Was computation manipulated?

Was the model altered?

Was inference reproducible?

APRO directly addresses this with verifiable off-chain workflows, where computation steps themselves become auditable artifacts.

Instead of trusting outcomes, systems can verify execution integrity.

This is a foundational shift — from data delivery to computation verification.

5. Legacy Oracles Are Price Infrastructure — Not Intelligence Infrastructure

Most oracle networks were designed during DeFi’s first wave, when the dominant need was pricing collateral.

But today’s stack is different:

autonomous agents

AI-driven protocols

real-world coordination

conditional execution

dynamic policy enforcement

These systems don’t just need numbers. They need:

reasoning checkpoints

randomness validation

behavioral proofs

multi-source consensus

auditability

APRO positions itself as infrastructure for machine-to-machine trust, not just DeFi price updates.

That’s why it emphasizes:

randomness verification

AI verification boundaries

hybrid nodes

off-chain/on-chain coherence

It’s closer to a truth engine than a feed publisher.

6. The Quiet Break: Legacy Oracles Still Work — Until They Don’t

This is what makes the shift subtle.

Traditional oracles won’t suddenly fail. They’ll keep serving prices just fine.

But cracks appear when protocols try to:

scale into AI-native systems

automate real-world actions

rely on off-chain reasoning

verify complex events

reduce blind trust

At that point, developers start layering patches:

extra validators

custom verification logic

ad hoc committees

APRO’s thesis is simple:

those patches should be first-class infrastructure, not hacks.

Final Thought: From Feeds to Frameworks

Legacy oracles solved an early problem beautifully: getting prices on-chain.

APRO is responding to a newer one:

How do blockchains reason about the real world without blindly trusting it?

That’s the real divide.

Not speed.

Not cost.

Not node count.

But philosophy.

Traditional oracles deliver data.

APRO builds verifiable truth pipelines.

And as crypto shifts from financial primitives to autonomous systems, that difference quietly becomes foundational.