I've been following the 0G project for about half a year now, and just today I have time to give everyone a detailed review of 0G, to see how @0G_labs is re-planning the future of artificial intelligence from centralized management to decentralized management. Let's go through it all at once!

I happened to recently discuss AI with a friend, who said, ‘Today’s AI is increasingly like a ‘private toy’ of large companies,’ and I feel there is some truth to that. The chatbots and image generation tools we use daily are basically run by a few tech giants, with data in their hands. No one outside knows how the algorithms actually work, and if something goes wrong, no one can clarify it. But if I told you that future AI might be ‘decided by everyone,’ would you believe it?

The first thing that came to my mind was the 0G project, which is currently trying to turn this ‘if’ into a reality.

One: Why pursue ‘decentralized AI’?

First, let’s talk about how ‘crowded’ the current AI landscape is. The short video recommendations you scroll through daily and the ‘you may also like’ sections in shopping apps are all busy with AI models from a few big companies, but these models are like ‘sealed jars’:

Your data is taken for training, but was it misused? Unknown;

Why does AI recommend these things to you? The algorithms are hidden, unclear;

What if one day the servers of big companies crash or services are stopped for some reason? Those applications that depend on them might all go down.

This is the headache of centralized AI: a few people hold the control, while the majority can only passively endure, also bearing the risks of data leaks and service interruptions.

What decentralized AI aims to do is to dismantle this ‘sealed jar’ and allow everyone to participate:

Anyone can understand how AI is trained and operates, with algorithms that can be clearly verified;

What data was used and how it was used are all recorded on-chain, allowing for clear traceability;

Even if a certain node has issues, other nodes can continue to operate, preventing a complete collapse.

In short, it is about transforming AI from the ‘reserved area of big companies’ into ‘public resources for everyone’ — transparent, decided by everyone, and more resilient.

Two: How does 0G achieve this? Modularization is key.

To decentralize AI, 0G relies on a ‘modular’ architecture — breaking down complex systems into Lego blocks, where each part can be upgraded independently and combined efficiently.

This is particularly flexible in compliance, as different countries have different rules:

If a certain area strictly regulates data storage, the DA module for data storage can be placed on local compliant servers;

If a country restricts overseas computing power, companies can only use local computing power modules to handle business, adding other modules as needed;

If some places ban tokens but welcome distributed computing, they can remove the token-related modules and keep compliant parts like computing scheduling and data encryption.

It’s like building blocks: non-compliant parts are set aside for now, while compliant ones can still be used, and they can be added back when rules change.

Three: Let’s look at the core differences between centralized and decentralized AI.

Centralized AI is like an ‘integrated machine’: fast and stable, but if you want to replace a part or add a function, you have to dismantle the entire machine, which is quite troublesome. Moreover, data is hidden within the machine, making it invisible from the outside, occasionally leading to ‘nonsense’ (what people call ‘AI hallucination’), because no one can verify its reasoning process.

Decentralized AI is more like ‘building blocks’: the parts (modules) are public, and anyone can swap or add them, with data and reasoning processes recorded on-chain for verification, reducing ‘hallucinations.’ But the drawbacks are also evident — coordinating so many parts may slow things down, and if the ‘interfaces’ between different blocks are not standardized, it could lead to chaos.

Four: What are the ‘blocks’ of 0G?

0G has broken the entire system down into several key modules, each with clear tasks:

1) 0G Chain: This is the most fundamental chain, compatible with existing decentralized applications (dApps), and core functions like execution and consensus can be upgraded independently without touching the entire chain;

2) 0G Storage: This is a decentralized storage space capable of holding a vast amount of data, ensuring that data won’t be lost or unusable through special encoding and verification methods;

3) Data Availability Layer (DA): This manages whether the data is ‘sufficiently usable,’ verifying by randomly selecting nodes, ensuring reliability while allowing for unlimited scalability;

4) 0G Serving Architecture: This specifically manages the reasoning and training of AI models, and provides developers with a toolkit (SDK) so they can directly use AI functions without having to build a complex architecture themselves;

5) Alignment Nodes: These oversee the entire system to ensure that AI behavior aligns with ethical standards, and the management of these nodes is also decentralized, not subject to the say-so of any individual.

The advantage of this architecture is its ‘flexibility.’ For instance, if stronger storage capacity is needed, the Storage module can be upgraded; if faster reasoning speed is required, the Serving architecture can be optimized without altering other parts. This also lowers the entry barriers for developers.

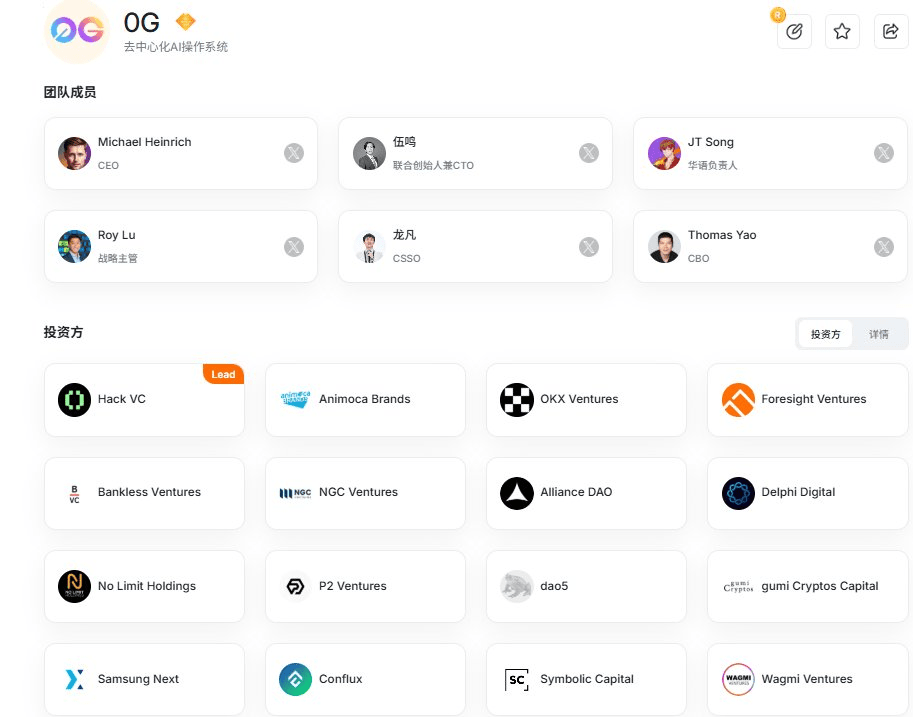

Five: Is 0G reliable now? Look at the financing team and ecosystem partners; I've mentioned this numerous times.

Determining whether a project is reliable is significantly influenced by funding and the actual progress of implementation.

0G announced last January that it had raised $325 million, primarily used to scale product development and build a developer community.

Moreover, 0G has already formed a deep partnership with HackQuest, focusing on building the developer community. It’s worth noting that HackQuest, as a developer education platform, has also raised $4.1 million, and the collaboration between the two can better promote the development of the developer ecosystem.

Having money and partnerships isn't enough; the data from the testnet speaks volumes:

More than 650 million transactions, with 22 million accounts and over 8,000 validators;

During peak hours, the TPS of each shard can reach 11,000, which is sufficient for processing the massive data required by AI.

Another point is the number of nodes — 85,000 nodes have already been sold and are maintained by more than 8,500 operators worldwide. The more nodes there are, the stronger the overall network's stability and security, like having over 8,500 people ‘standing guard’ together, making it hard to encounter problems.

Additionally, 0G is one of the earliest decentralized AI modular public chains to have in-depth cooperation and is also the first decentralized AI modular public chain. It has not yet conducted a Token Generation Event (TGE), and the potential discussion value behind this is evident!

Six: Speaking of 0G, we must mention its iNFT. Simply put, an iNFT is an ‘NFT that can run AI functions’ — what you buy is not just a picture, but a smart ‘little assistant.’

Why is this thing fresh? Because it uses a new standard called ERC-7857:

When buying an iNFT, not only is ownership transferred to you, but the AI model and data (also known as ‘metadata’) are included, so you won't end up with just an empty shell;

Sensitive data is encrypted and stored, ensuring privacy, but authenticity can be verified on-chain, so there's no fear of being scammed;

This AI little assistant can also ‘grow,’ and the metadata can be updated at any time; the longer you use it, the more valuable it may become.

This breaks the old model of traditional AI. Previously, using AI meant ‘borrowing’ from large companies, but now you can ‘own’ an AI little assistant, and you can sell it or authorize others to use it, with all the profits going to you.

Seven: Learning threshold? 0G has actually paved the way for you long ago.

Recently, 0G partnered with HackQuest @HackQuest_ to launch a dedicated course called the 0G Learning Track, covering everything from data layers and storage mechanisms to how to connect AI frameworks and operate across chains. All content is explained clearly, and upon completion, participants can obtain certificates certified by both parties, which is indeed a good springboard for developers looking to enter decentralized AI.

Eight: To be honest, 0G's idea is pretty good, but there are also quite a few challenges:

1) Decentralized systems are inherently slower than centralized ones; how to find a balance between scalability and performance? It will depend on the actual situation after the mainnet goes live;

2) What if the module interface standards are not uniform when different projects are integrated? It may lead to ‘fragmentation’;

Compliance issues also need to be considered, as data and AI involve different policies.

3) Nevertheless, decentralized AI is a direction worth exploring. If it can truly achieve ‘transparency, participation from everyone, and risk resistance,’ then AI can genuinely serve everyone, rather than being a tool for a select few.

Will 0G succeed? It's too early to draw conclusions now, but at least it has taken the first step. I will continue to track this project, as I believe it is worth investing time in! Moreover, @Jtsong2 is here with us to build it together!

#0GLabs #Aİ