Written by: Mario Chow & Figo @IOSG

Introduction

In the past 12 months, the relationship between web browsers and automation has undergone dramatic changes. Almost all major tech companies are racing to build autonomous browser agents. This trend became more pronounced starting from the end of 2024: OpenAI launched Agent Mode in January, Anthropic released the 'Computer Use' feature for the Claude model, Google DeepMind introduced Project Mariner, Opera announced the agent-based browser Neon, and Perplexity AI launched the Comet browser. The signals are clear: the future of AI lies in agents that can autonomously navigate web pages.

This trend is not just about adding smarter chatbots to browsers, but represents a fundamental shift in how machines interact with digital environments. Browser agents are a type of AI system that can 'see' web pages and take actions: clicking links, filling out forms, scrolling pages, entering text: just like human users. This model promises to unleash tremendous productivity and economic value as it can automate tasks that currently still require human intervention or are too complex for traditional scripts.

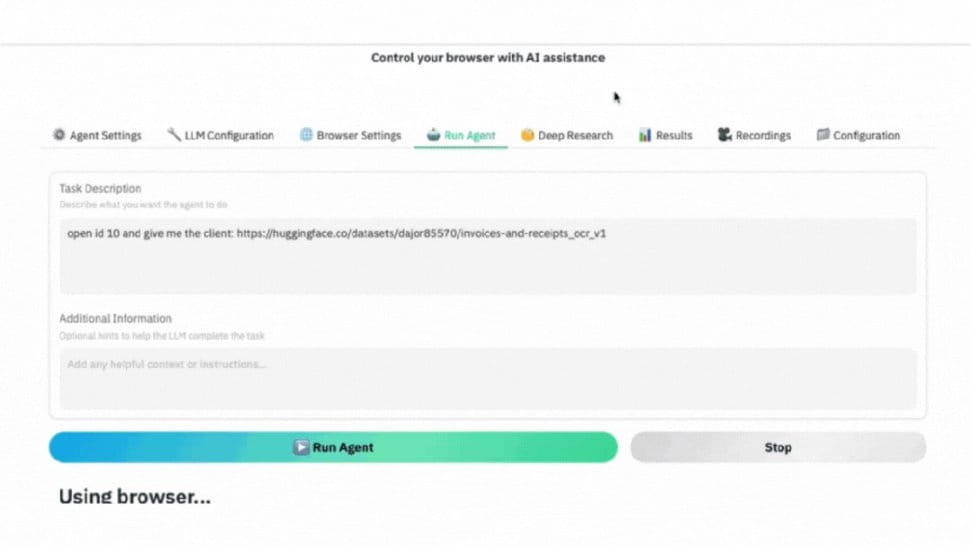

▲ GIF demonstration: Actual operation of AI browser agents: following instructions, navigating to target data set pages, automatically taking screenshots and extracting the required data.

Who will win the AI browser wars?

Almost all major tech companies (as well as some startups) are developing their own browser AI agent solutions. Here are some of the most representative projects:

OpenAI – Agent Mode

OpenAI's Agent Mode (formerly known as Operator, set to launch in January 2025) is an AI agent with an integrated browser. Operator can handle various repetitive online tasks: for example, filling out web forms, ordering groceries, scheduling meetings—all accomplished through the standard web interfaces commonly used by humans.

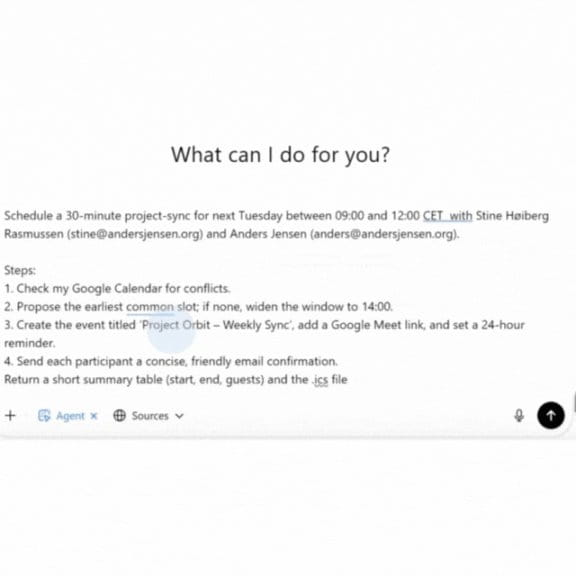

▲ AI agents schedule meetings like professional assistants: checking calendars, finding available time slots, creating events, sending confirmations, and generating .ics files for you.

Anthropic – Claude's 'Computer Use'

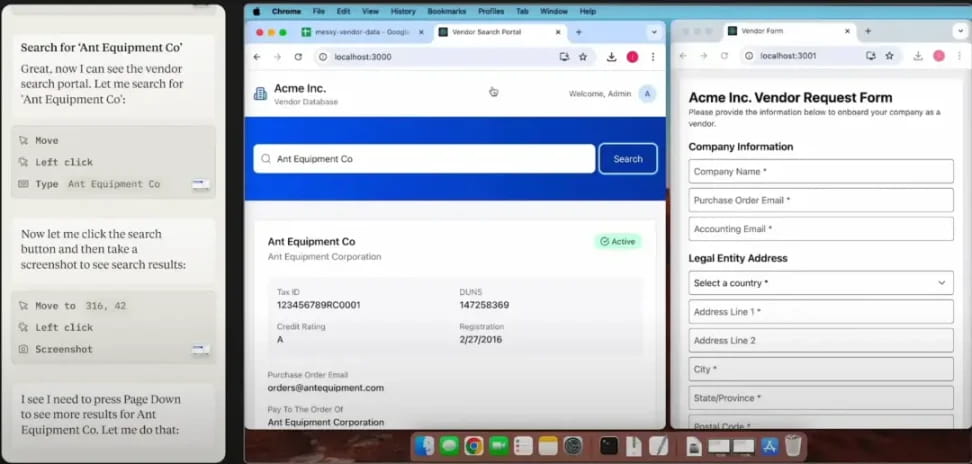

By the end of 2024, Anthropic introduced a brand new 'Computer Use' feature for Claude 3.5, granting it the capability to operate a computer and browser like a human. Claude can see the screen, move the cursor, click buttons, and enter text. This is the first large model agent tool of its kind to enter public beta testing, allowing developers to let Claude automatically navigate websites and applications. Anthropic positions this as an experimental feature, with the primary goal of achieving automation in multi-step workflows on the web.

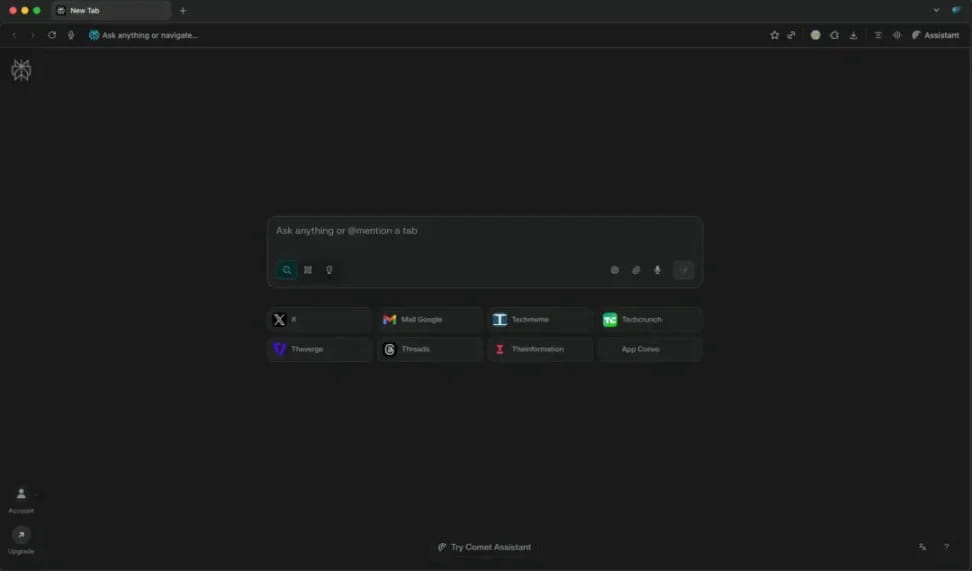

Perplexity – Comet

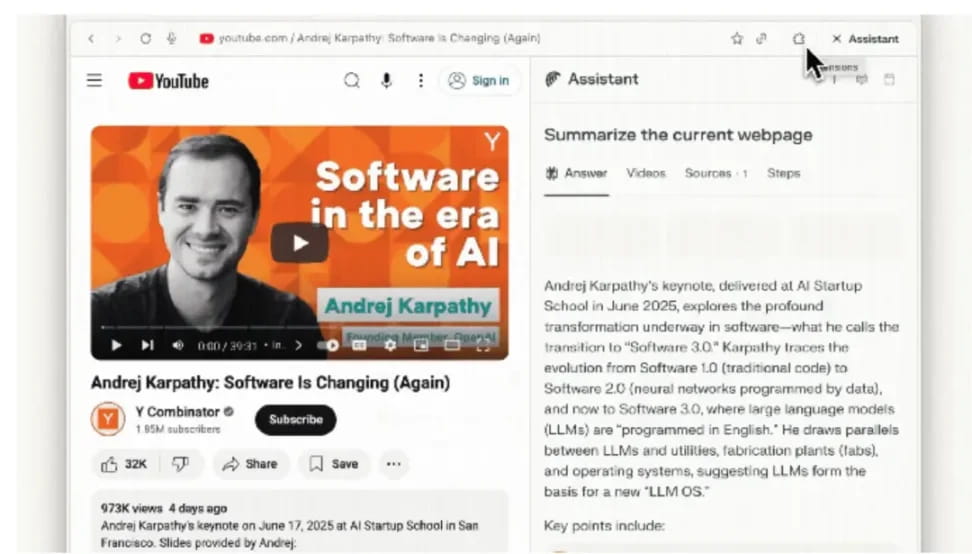

AI startup Perplexity (known for its question-answering engine) launched the Comet browser in mid-2025 as an AI-driven alternative to Chrome. The core of Comet is a conversational AI search engine built into the address bar (omnibox), capable of providing instant answers and summaries instead of traditional search links.

Additionally, Comet also includes Comet Assistant, an agent that resides in the sidebar and can automatically perform daily tasks across websites. For example, it can summarize opened emails, arrange meetings, manage browser tabs, or browse and scrape web information on your behalf.

Through the sidebar interface, the agent can perceive the current web content. Comet aims to seamlessly integrate browsing with AI assistants.

Real-world application scenarios of browser agents

Previously, we reviewed how major tech companies (OpenAI, Anthropic, Perplexity, etc.) are injecting functionality into browser agents through different product forms. To better understand their value, we can further explore how these capabilities are applied in real-life scenarios within daily life and corporate workflows.

Daily web automation

#E-commerce and Personal Shopping

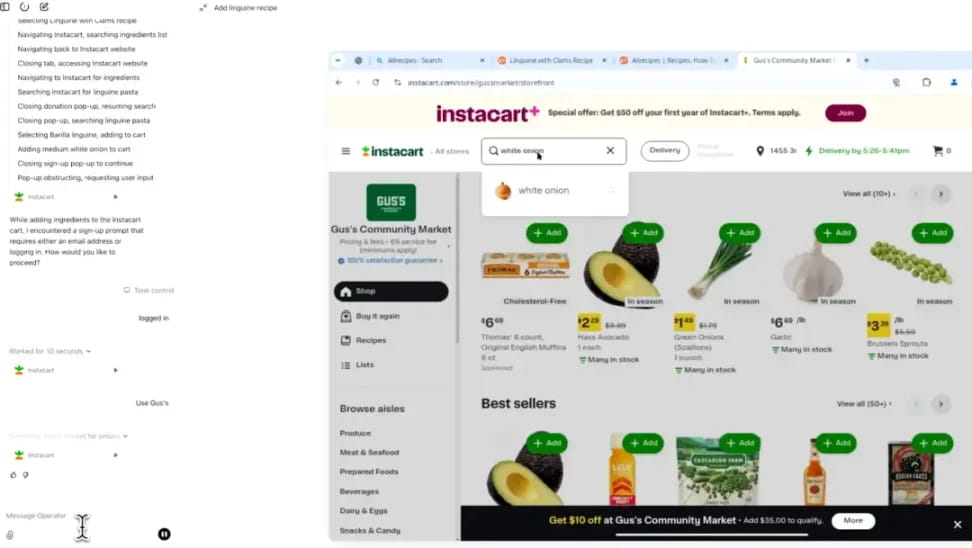

A very practical scenario is delegating shopping and booking tasks to agents. Agents can automatically fill your online shopping cart and place orders based on a fixed list, or look for the lowest prices among multiple retailers and complete the checkout process on your behalf.

For travel, you can have the AI perform tasks like: 'Help me book a flight to Tokyo next month (ticket price under $800), and book a hotel with free Wi-Fi.' The agent will handle the entire process: searching for flights, comparing options, filling in passenger information, and completing the hotel booking, all done through airline and hotel websites. This level of automation far exceeds existing travel bots: it’s not just recommendations, but direct purchases.

#Enhancing Office Efficiency

Agents can automate many repetitive business operations that people perform in browsers. For example, sorting emails and extracting to-dos, or checking for gaps in multiple calendars and automatically scheduling meetings. Perplexity's Comet Assistant can already summarize your inbox content through a web interface or add events to your schedule. Agents can also log in to SaaS tools to generate regular reports, update spreadsheets, or submit forms after obtaining your authorization. Imagine an HR agent that can automatically log into different job posting websites; or a sales agent that can update lead data in a CRM system. These mundane tasks that would otherwise consume a lot of employee time can be accomplished by AI through automating web forms and page operations.

In addition to single tasks, agents can chain together complete workflows across multiple network systems. All these steps need to operate across different web interfaces, which is where browser agents excel. Agents can log into various dashboards for troubleshooting, or even orchestrate processes, such as completing onboarding procedures for new employees (creating accounts on multiple SaaS websites). Essentially, any multi-step operation that currently requires opening multiple websites can be delegated to agents.

Current challenges and limitations

Despite the enormous potential, today's browser agents are still far from perfect. Current implementations reveal some long-standing technical and infrastructure challenges:

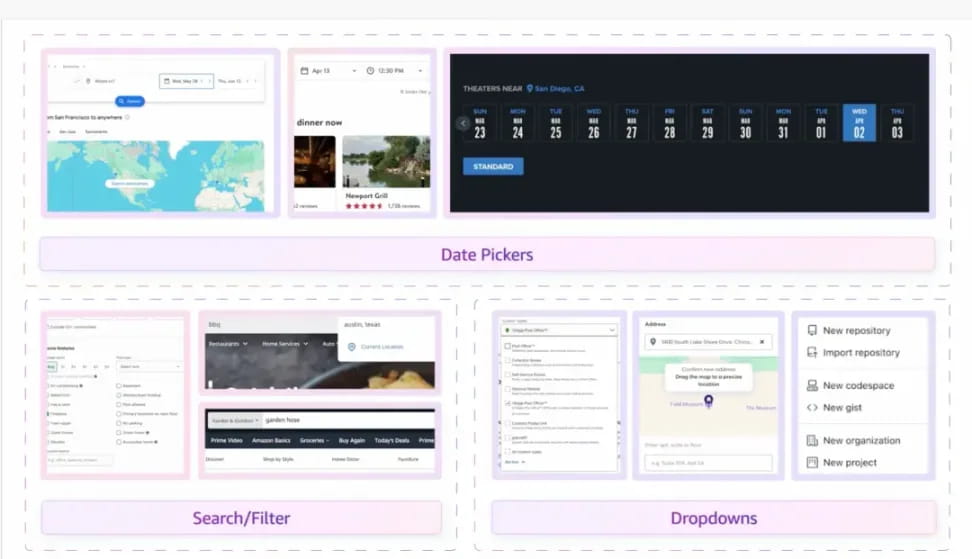

Mismatched architecture

Modern networks are designed for human-operated browsers and have gradually evolved over time to actively resist automation. Data is often buried in HTML/CSS optimized for visual presentation, limited by interactive gestures (mouse hovering, scrolling), or accessible only through unpublished APIs.

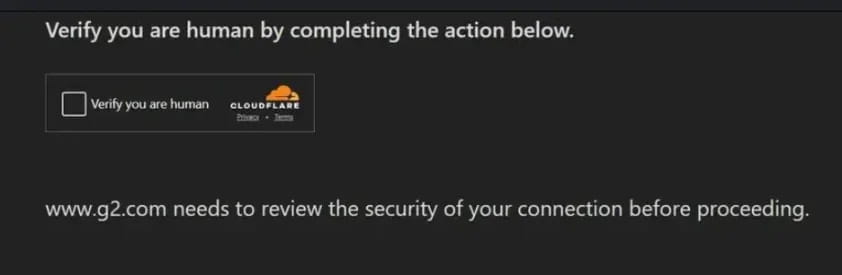

On this basis, anti-crawling and anti-fraud systems have artificially introduced additional barriers. These tools combine IP reputation, browser fingerprints, JavaScript challenge feedback, and behavior analysis (such as the randomness of mouse movements, typing rhythm, and dwell time). Ironically, the more 'perfect' and efficient the AI agent behaves—such as instant form filling and never making mistakes—the easier it is to be identified as malicious automation. This can lead to hard failures: for instance, OpenAI or Google's agents might successfully complete all steps before checkout, but ultimately get blocked by CAPTCHAs or secondary security filters.

Human-optimized interfaces combined with anti-robot defenses create a fragile 'human-machine mimicry' strategy for agents. This approach is highly prone to failure, with low success rates (if not intervened by humans, the completion rate of full transactions is still less than one-third).

Trust and security concerns

To give agents complete control, they often need access to sensitive information: login credentials, cookies, two-factor authentication tokens, and even payment information. This raises concerns that are understandable to both users and businesses:

What if the agent makes a mistake or is deceived by a malicious website?

If the agent agrees to certain terms or executes a transaction, who should be held responsible?

Based on these risks, current systems generally adopt a cautious approach:

Google's Mariner does not input credit card information or agree to terms of service, but rather hands it back to the user.

OpenAI's Operator prompts users to take over logins or CAPTCHA challenges.

An agent powered by Anthropic's Claude may directly refuse to log in for security reasons.

The result is frequent pauses and handovers between AI and humans, weakening the seamless automation experience.

Despite these obstacles, progress is still moving quickly. Companies like OpenAI, Google, and Anthropic learn from failures in each iteration. With increasing demand, a 'co-evolution' may emerge: websites becoming more friendly to agents in advantageous scenarios, while agents continuously enhance their ability to mimic human behavior to bypass existing barriers.

Methods and Opportunities

Current browser agents face two vastly different realities: on one hand is the hostile environment of Web2, where anti-bot and security defenses are everywhere; on the other hand is the open environment of Web3, where automation is often encouraged. This difference determines the direction of various solutions.

The following solutions are roughly divided into two categories: one helps agents bypass the hostile environment of Web2, while the other is native to Web3.

While the challenges facing browser agents remain significant, new projects are continuously emerging to directly address these issues. The cryptocurrency and decentralized finance (DeFi) ecosystems are becoming natural testing grounds because they are open, programmable, and less hostile to automation. Open APIs, smart contracts, and on-chain transparency eliminate many friction points common in the Web2 world.

The following are four categories of solutions, each addressing one or more core limitations of the current situation:

Native agent-based browsers for on-chain operations

These browsers are designed from the ground up to be driven by autonomous agents and are deeply integrated with blockchain protocols. Unlike traditional Chrome browsers, which require additional reliance on Selenium, Playwright, or wallet plugins to automate on-chain operations, native agent-based browsers directly provide APIs and trusted execution paths for agents to call.

In decentralized finance, the validity of transactions relies on cryptographic signatures rather than whether the user behaves 'like a human.' Therefore, in on-chain environments, agents can bypass common CAPTCHA, fraud detection scores, and device fingerprint checks seen in the Web2 world. However, if these browsers point to Web2 sites like Amazon, they cannot bypass the relevant defenses, and in that scenario, normal anti-bot measures are still triggered.

The value of agent-based browsers is not in magically accessing all websites, but in:

Native blockchain integration: built-in wallets and signature support, eliminating the need for MetaMask pop-ups or parsing the dApp frontend DOM.

Automation-first design: provides stable high-level instructions that can directly map to protocol operations.

Security model: fine-grained permission controls and sandboxes ensure that private keys are secure during automation.

Performance optimization: able to execute multiple on-chain calls in parallel, without the need for browser rendering or UI delays.

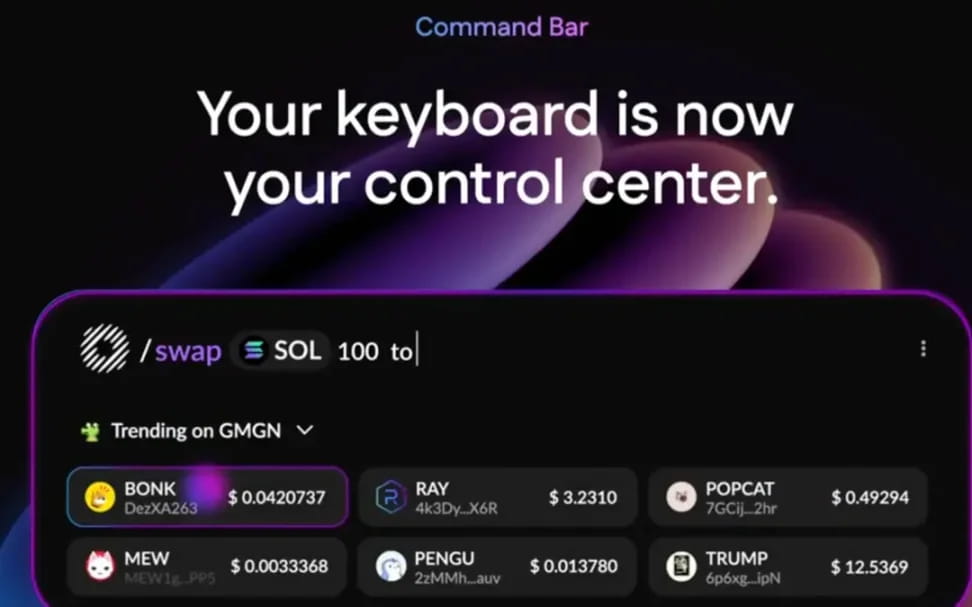

#Case Study: Donut

Donut integrates blockchain data and operations as first-class citizens. Users (or their agents) can hover to view real-time risk indicators of tokens or directly input natural language commands like '/swap 100 USDC to SOL.' By skipping the hostile friction points of Web2, Donut allows agents to run at full speed in DeFi, enhancing liquidity, arbitrage, and market efficiency.

Verifiable and trustworthy agent execution

Granting agents sensitive permissions poses significant risks. Related solutions utilize trusted execution environments (TEEs) or zero-knowledge proofs (ZKPs) to encrypt and confirm the expected behavior of agents before execution, allowing users and counterparties to verify agent actions without exposing private keys or credentials.

#Case Study: Phala Network

Phala uses TEEs (such as Intel SGX) to isolate and protect the execution environment, avoiding the Phala operators or attackers from spying on or tampering with agent logic and data. TEE acts like a hardware-backed 'secure room,' ensuring confidentiality (inaccessible from the outside) and integrity (unmodifiable from the outside).

For browser agents, this means they can log in, hold session tokens, or handle payment information, all while ensuring that this sensitive data never leaves the secure enclave. Even if the user's machine, operating system, or network is compromised, it cannot be leaked. This directly alleviates one of the biggest barriers to the practical application of agents: the trust issue regarding sensitive credentials and operations.

Decentralized structured data networks

Modern anti-bot detection systems not only check whether requests are 'too fast' or 'automated,' but also combine IP reputation, browser fingerprints, JavaScript challenge feedback, and behavior analysis (such as cursor movement, typing rhythm, session history). Agents operating from data center IPs or fully reproducible browsing environments are easily identifiable.

To address this issue, such networks no longer scrape web pages optimized for humans, but directly collect and provide machine-readable data, or proxy traffic through real human browsing environments. This approach bypasses the vulnerabilities traditional crawlers face in parsing and anti-crawling phases, providing agents with cleaner and more reliable inputs.

By proxying agent traffic to these real-world sessions, distributed networks allow AI agents to access web content like humans without immediately triggering blocks.

#Case Studies

Grass: a decentralized data / DePIN network where users share idle residential broadband, providing agent-friendly, geographically diverse access channels for public web data collection and model training.

WootzApp: an open-source mobile browser supporting cryptocurrency payments, featuring a backend agent and zero-knowledge identity; it gamifies AI/data tasks for consumers.

Sixpence: a distributed browser network that routes traffic for AI agents through global contributors' browsing.

However, this is not a complete solution. Behavioral detection (mouse/scrolling patterns), account-level restrictions (KYC, account age), and fingerprint consistency checks may still trigger blocks. Therefore, distributed networks are best viewed as a foundational layer of concealment that must be combined with human-mimicking execution strategies to achieve maximum effect.

Web standards for agents (looking ahead)

Currently, an increasing number of tech communities and organizations are exploring: if future web users are not just humans, but also automated agents, how should websites safely and compliantly interact with them?

This has spurred discussions on some emerging standards and mechanisms aimed at allowing websites to clearly indicate 'I allow trusted agents to access,' and providing a secure channel to complete interactions, rather than defaulting to treating agents as 'bot attacks' as is done today.

'Agent Allowed' tag: just like the robots.txt that search engines adhere to, future web pages may include a tag in the code that tells browser agents 'this can be safely accessed.' For example, if you book a flight through an agent, the website won't pop up a bunch of CAPTCHAs; instead, it will directly provide an authenticated interface.

Certified agent API gateway: websites can open dedicated entrances for verified agents, just like a 'fast lane.' Agents do not need to simulate human clicks or inputs, but rather follow a more stable API path to complete orders, payments, or data queries.

W3C discussions: The World Wide Web Consortium (W3C) has been exploring how to create standardized channels for 'managed automation.' This means that in the future, we may have a universally accepted set of rules that allow trusted agents to be recognized and accepted by websites while maintaining security and accountability.

Although these explorations are still in their early stages, once implemented, they could greatly improve the relationships between humans, agents, and websites. Imagine: no longer needing agents to desperately mimic human mouse movements to 'fool' risk control, but rather completing tasks through a 'officially allowed' channel.

On this path, natively encrypted infrastructure may take the lead. Because on-chain applications inherently rely on open APIs and smart contracts, they are friendly to automation. In contrast, traditional Web2 platforms may continue to remain cautious, especially those reliant on advertising or anti-fraud systems. However, as users and businesses gradually accept the efficiency gains brought by automation, these standardized attempts may likely become the key catalyst for pushing the entire internet towards an 'agent-first architecture.'

Conclusion

Browser agents are evolving from simple conversational tools to autonomous systems capable of completing complex online workflows. This shift reflects a broader trend: embedding automation directly into the core interface of user interactions with the internet. While the potential for productivity gains is enormous, the challenges are equally severe, including how to overcome entrenched anti-bot mechanisms, and how to ensure security, trust, and responsible usage.

In the short term, agents' reasoning capabilities, faster speeds, tighter integration with existing services, and advancements in distributed networks may gradually improve reliability. In the long term, we may see the gradual establishment of 'agent-friendly' standards in scenarios where automation benefits both service providers and users. However, this transition will not be uniform: in automation-friendly environments like DeFi, adoption will be faster; while on Web2 platforms that heavily rely on user interaction control, acceptance will be slower.

In the future, competition among tech companies will increasingly focus on several aspects: how well their agents navigate in real-world constraints, whether they can be safely integrated into critical workflows, and whether they can consistently deliver results in a diverse online environment. Whether all of this will ultimately reshape the 'browser wars' depends not solely on technological strength, but on the ability to build trust, align incentives, and demonstrate tangible value in everyday use.