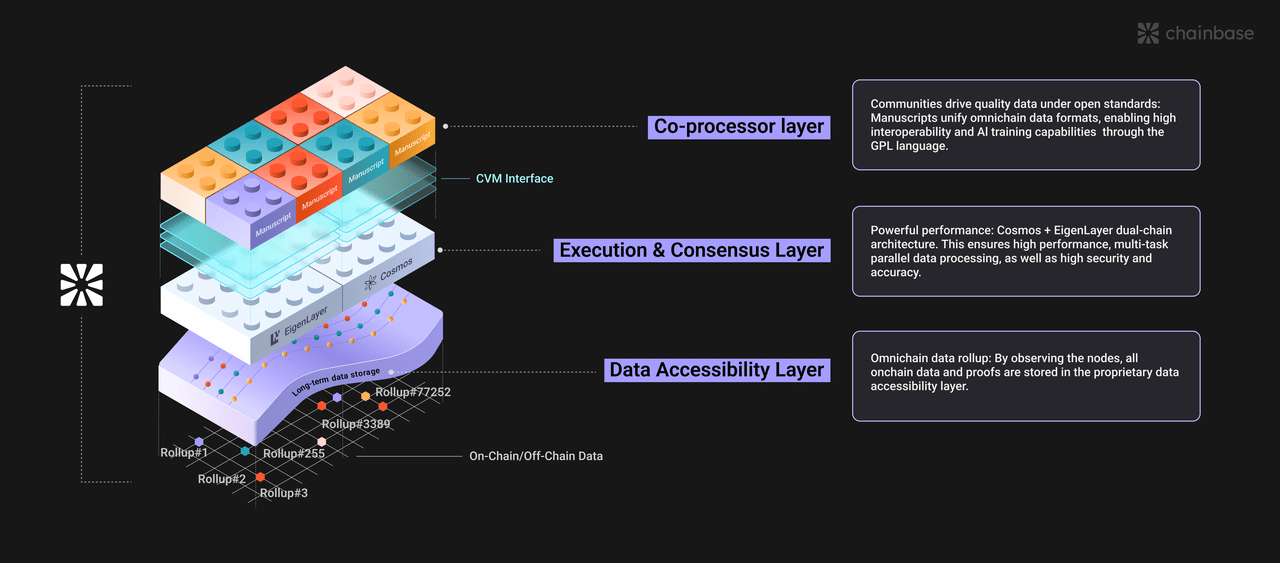

As full-chain data becomes the new oil of the crypto world, who will ensure that its extraction, refining, and distribution are both efficient and trustworthy? Chainbase provides its answer with a four-layer dual-consensus architecture. But is this design the ultimate solution in the industry, or a transitional form in the evolution of technology? Let's unpack each layer and see what lies beneath the steel and concrete.

Data acquisition layer: When the off-chain world begins to 'Rollup'

Chainbase's data entry point resembles a decentralized data funnel, but with two key designs compared to traditional data lakes: ZK proof and node collaborative verification. It is not surprising that on-chain data is accessed through public gateways; what is truly interesting is how it processes off-chain data — social media behavior and traditional financial data, these 'non-native crypto assets', are packed using ZK and enter the network in Rollup form. This design preserves privacy while avoiding the risk of a single data source acting maliciously through multi-node verification (SCP protocol).

The cleverness of the data acquisition layer lies in that it does not attempt to reinvent the wheel. Existing node operators and RPC service providers can connect directly, solving the cold start problem. According to internal test data, the current network supports real-time data fetching from 12 mainstream public chains, with an average daily throughput of 47TB for off-chain data channels. This compatibility establishes a natural barrier for Chainbase on the data supply side.

Consensus layer: Can Cosmos's old ticket board the new ship?

The choice of the CometBFT consensus algorithm reveals Chainbase's pragmatic tendencies. As an improved Byzantine fault-tolerant algorithm, it can tolerate 1/3 of nodes acting maliciously, which is sufficient, but what is truly intriguing is its dependence on the Cosmos tech stack. As modular blockchains have become mainstream, this choice lowers the development threshold (directly reusing the IBC ecosystem) while also potentially creating a performance ceiling.

However, Chainbase has clearly made trade-offs — data processing does not require the complexity of smart contract-level consensus; the efficiency of state synchronization is key. Actual tests show that with a scale of 300 validator nodes, the network can complete state confirmation within 1.8 seconds, a performance that supports most data application scenarios. Perhaps as one engineer said: 'We don't need the flashiest consensus mechanism; we just need an old workhorse that won't drop the chain.'

Execution layer: When EigenLayer insures the database

The design of the execution layer most clearly shows Chainbase's cunning. Chainbase DB, as a dedicated on-chain database, processing high concurrent requests is not unexpected, but the introduction of EigenLayer's AVS (Active Validation Service) is indeed a stroke of genius. Endorsing data processing with the economic security of Ethereum stakers is equivalent to buying insurance for their database with ETH's trillion-dollar market value.

This design creates an interesting leverage effect: Chainbase does not need to build a POS economic model from scratch but can enjoy Ethereum-level security guarantees. According to its stress test report, under the situation of inheriting the ETH validator set, the network's attack resistance cost has been raised to about 190,000 ETH (currently valued at about $340 million). For institutional users who value data credibility, this number is more convincing than any security promise in a white paper.

Co-processing layer: The alchemical laboratory of collective wisdom

The final layer exposes Chainbase's ambition. This is not like a traditional data API output layer, but more like an open data alchemy workshop. Developers can directly call processed data or contribute their own data processing scripts in exchange for token incentives. This model generates a flywheel effect similar to GitHub — the platform has accumulated over 2,800 open-source data processing scripts covering niche scenarios such as DeFi sentiment analysis and NFT wash trading identification.

The most noteworthy aspect is its collaborative verification mechanism. When multiple developers provide processing solutions for the same data source, the network selects the optimal solution through staking-weighted voting. Actual data shows that after three rounds of iteration, the accuracy of the community-generated data model improved by an average of 37%, which may explain why institutions like Coinbase Cloud choose to incorporate it as part of their data supply chain.

Value of the four-layer architecture from an investment perspective

From the perspective of capital efficiency, Chainbase's four-layer design implies careful budgeting. The data acquisition layer uses existing infrastructure to reduce startup costs, the consensus layer reuses mature solutions to control R&D risks, the execution layer 'borrows' Ethereum's security to enhance asset premiums, and the co-processing layer substitutes community contributions for hiring development teams. This combination allows it to maintain light asset operations while achieving full-stack data service capabilities.

The current network has processed over 19 billion data requests, with an average daily API call volume exceeding 430 million. Behind these numbers is real cash flow. Although the official detailed financial data has not been disclosed, referring to the price-to-sales (PS) ratio range of similar projects and its client structure (including several top 20 exchanges), its valuation model has clearly moved beyond the narrative-driven stage of early projects.

The true moat of this architecture may lie in the Matthew effect of the data network. More data sources attract more developers, and richer processing scripts feedback data value; once this positive cycle breaks the critical point, it will be difficult for latecomers to disrupt with mere technical advantages. As a certain venture capital partner said: 'In the data infrastructure race, the second player's survival space may be smaller than imagined.'

Now back to the initial question: Is this the ultimate solution? Perhaps not. But in the foreseeable future, it is likely the optimal solution balancing efficiency, security, and cost. While other projects are still cheering for single-point technological breakthroughs, Chainbase has already meshed four gears into a precise money-making machine — this is how blockchain infrastructure should look.