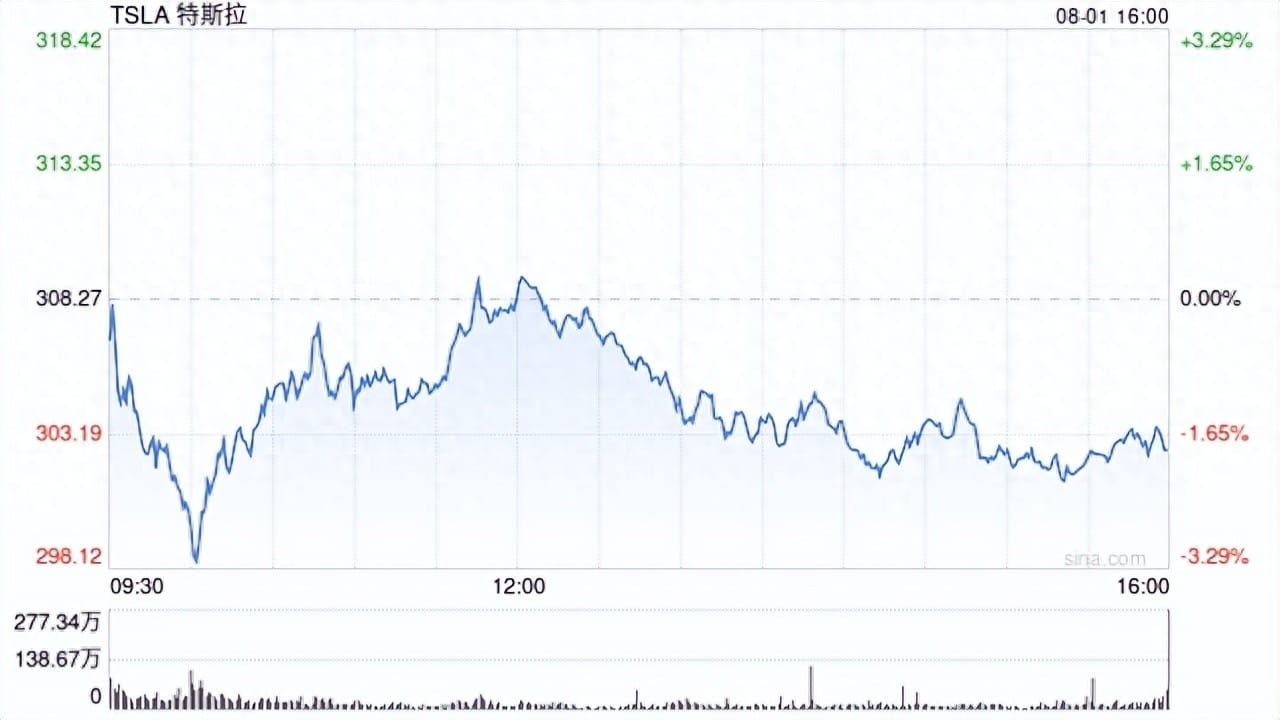

Tesla loses for the first time and is ordered to pay $243 million in damages!

The exaggerated promotions by Musk over the years have now become evidence against Tesla. Tesla's unbeaten record in lawsuits related to driver assistance has thus come to an end and may set a precedent for future similar accountability lawsuits.

Tesla loses for the first time

Last Friday, a federal jury in Miami, Florida, ruled that Tesla must bear partial responsibility for a fatal car accident in Florida in 2019 and ordered Tesla to pay a total of $243 million in damages to prevent similar actions in the future.

After a three-week trial, the eight-member jury determined after two days of deliberation that Tesla was one-third responsible for the accident, while the driver was two-thirds responsible. The driver of the Tesla was involved in the accident due to looking down to pick up a dropped phone, and he has been sued separately.

The jury determined that the pain and emotional distress suffered by the plaintiff totaled $129 million, but given Tesla's partial responsibility, it must pay one-third of the compensation, amounting to $43 million in compensatory damages. In addition to the $200 million in punitive damages, Tesla needs to pay a total of $243 million to the plaintiff.

Tesla subsequently issued a statement saying: "Today's ruling is wrong and will only hinder the development of automotive safety, jeopardizing Tesla and the entire industry's efforts to develop and implement life-saving technologies. Given the significant legal errors and irregularities in the trial, we plan to appeal."

It is necessary to explain the unique punitive damages system in the U.S. legal system, as few other countries have similar high additional compensations. In U.S. civil lawsuits, especially those involving giant enterprises and severe wrongdoing, courts may grant plaintiffs actual loss compensation as well as substantial punitive damages, typically not exceeding nine times the actual loss.

Punitive damages aim to punish the defendant for malicious fraud, intentional or gross negligence, serving as a deterrent to both the company and society to prevent similar actions from occurring again. It should be emphasized that this compensation is different from administrative fines, as it is paid to the plaintiff rather than the government, but attorneys typically take a fee of 30% to 40%.

Typically, companies will appeal, leading to a significant reduction in punitive damages. Tesla does not agree with this ruling and has announced an appeal. Therefore, it would not be surprising if the $200 million punitive damages were significantly reduced after the appeal.

The most well-known case in Chinese internet culture is the McDonald's coffee burn case (Liebeck v. McDonald's). In 1994, a 79-year-old woman was burned by hot coffee from McDonald's and was awarded $200,000 in actual damages and $2.7 million in punitive damages after suing. The court found that McDonald's had received hundreds of complaints about coffee burns but had not taken effective measures to protect customer safety, thus requiring additional punishment. However, after appealing, the punitive fine was reduced to $480,000.

The Monsanto case is also a typical example of punitive damages. A gardener in California was diagnosed with non-Hodgkin lymphoma (NHL) after long-term use of Monsanto's Roundup, which contains glyphosate. In 2018, a California court ruled that Monsanto failed to adequately warn about the cancer risks of glyphosate and intentionally concealed scientific evidence, ordering it to pay $39 million in damages and $250 million in punitive damages. However, after two appeals, the punitive damages were reduced to $21 million.

This victory in the case set a precedent for tens of thousands of subsequent lawsuits. In 2019, Monsanto was again ordered to pay $55 million in damages and $1 billion in punitive damages in the Roundup cancer case. The massive litigation pressure led to Monsanto’s sale to Bayer, which agreed to pay $10.9 billion to settle 125,000 related lawsuits in 2020.

Innocent couple separated by life and death

Understanding the unique punitive damages in the U.S., we can reflect on the details of this Florida accident case and understand why the jury found Tesla partially responsible. Musk's past exaggerated descriptions of Autopilot became the main basis for the $200 million fine.

On the night of April 25, 2019, around 9 PM, George McGee was driving a Tesla Model S on a remote country road in Key Largo, Florida. He had driven this road at least thirty times, comfortably activating Tesla's enhanced driver-assist system, Autopilot. However, during the drive, his phone slipped onto the car floor.

McGee later told police that he was looking down to pick up his phone and did not realize he was approaching an intersection. When he looked up, it was already too late. His Tesla ran through a stop sign at the intersection at 100 km/h, crashing into a parked Chevrolet Tahoe SUV.

A couple standing on the other side of Chevrolet is now separated by life and death. 22-year-old Benevides Leon Naibel was struck and thrown over 20 meters, unfortunately dying on the spot; while her boyfriend, 33-year-old Dillon Angulo, suffered multiple fractures and brain injuries, receiving long-term treatment and rehabilitation.

Clearly, driver McGee is the direct responsible party for this tragic accident. He himself does not dispute his negligent driving. Tests showed that McGee had not been drinking, but he thought that Tesla's Enhanced Autopilot would automatically brake when encountering obstacles. Angulo and Naibel's families sued the driver at the time and later reached a settlement. However, the victims' families believe that Tesla also needs to bear part of the responsibility. Therefore, they subsequently sued Tesla.

They believe that Tesla's marketing approach to the Autopilot driver-assist feature led drivers to mistakenly think that the system was fully autonomous. Moreover, at that time, the system was in a 'beta' phase, meaning it had not undergone safety testing and was not suitable for use on roads with intersections or lateral traffic.

The road segment where this accident occurred was not suitable for the then-current Autopilot. Lawyers wrote in the lawsuit: 'Tesla allowed the activation of autonomous driving on unsuitable roads, knowing that this would lead to collisions and cause innocent casualties, and these people did not choose to participate in Tesla's experiment.'

Tesla clearly disagrees with this. They stated in a release, "The evidence clearly shows that this accident was unrelated to Tesla's autonomous driving technology. Like many unfortunate accidents since the invention of the smartphone, this accident was caused by driver distraction."

'McGee admitted his responsibility because he was looking for his dropped phone while pressing the gas pedal, which caused the vehicle to speed, while also overriding the vehicle's systems. At the time of the 2019 accident, no collision-avoidance technology could have prevented this tragedy.'

Musk's statements became evidence

Although Musk did not attend the trial, he was the focal point of the court over the past three weeks, with both sides repeatedly mentioning his name and his various statements about Autopilot.

During the opening statements and throughout the trial, the plaintiff's attorneys and expert witnesses cited many of Musk's past commitments regarding autonomous driving and Tesla's autonomous driving technology. They accused Musk and Tesla of making false statements to customers, shareholders, and the public, exaggerating the safety and functionality of autonomous driving, and encouraging drivers to over-rely on the system.

The trial focused on how Tesla and Musk marketed their driver-assist software. Although Autopilot is entirely a driver-assist system that requires drivers to maintain high attention and be ready to take over the vehicle at any time, Tesla emphasized this point in the user manual but still named it Autopilot.

What statements made by Musk did the plaintiff's lawyer list in court as evidence to persuade the jury?

In 2016, Musk stated when releasing Autopilot 2.0 hardware that he described its sensor system using the term 'superhuman'; in a public interview that year, he stated, 'Our sensors and software will ultimately surpass human capabilities in detecting and responding to the environment. In this regard, Model S and Model X can autonomously drive more safely than humans.'

In a 2016 earnings call, Musk stated, 'I think we will be able to prove that we can achieve full autonomous driving... from a hardware perspective, the problem has largely been solved.' In 2019, he wrote online, 'Tesla's full autonomous driving will work. The software is gradually improving, and we are close to solving it.'

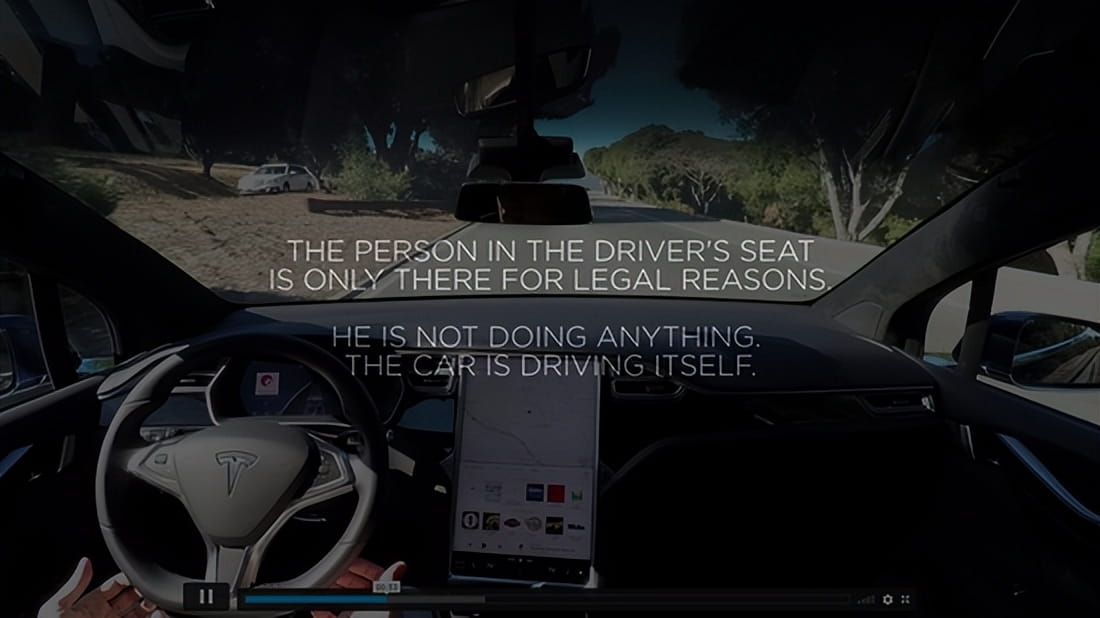

The most controversial promotional segment was a video released by Tesla in 2016 claiming its hardware platform supported full autonomous driving, stating, "The driver is only in the driver's seat for legal reasons." However, this video was later analyzed by multiple parties as misleading advertising, as the hardware could not truly support the scenarios demonstrated in the video. Multiple former Tesla employees admitted that the actual routes used had long been pre-mapped, and the vehicles in the video did not actually autonomously determine paths.

This statement has been widely cited to criticize Tesla for misleadingly implying that its vehicles have full autonomous driving capabilities in its marketing, while in reality still requiring human oversight. This promotional strategy became key evidence in subsequent legal lawsuits and regulatory investigations, with courts and regulators finding that Tesla misled consumers.

Musk's statements at the time were intended to showcase that Tesla's driver-assist system could reach autonomous driving levels in the future, but the plaintiffs argued that Musk exaggerated the technical capabilities of Tesla's Autopilot, misleading the public's safety expectations.

The driver of the accident, McGee, also stated in court, 'My understanding of Autopilot is that if I make a mistake or do something wrong, the vehicle will assist me. If I make a mistake, the car should be able to help me. In this accident, I really felt it disappointed me.'

Tesla's lawyer emphasized in defense, 'McGee is the only responsible party for the accident, and he acknowledged the responsibility. He has passed that intersection dozens of times; it was his act of looking down to pick up the phone that caused the accident. In 2019, no safety system could have prevented such an accident. The plaintiff's lawyer is fabricating lies, trying to shift the responsibility to the vehicle.'

The jury ultimately supported the plaintiff's opinion. On the most critical issue, whether Tesla marketed vehicles with defects, and whether that defect was a legal cause of the plaintiff's damages, the jury answered affirmatively.

Most ended in settlements

This is certainly not the first time Tesla has been sued for accidents related to the Autopilot or FSD driver-assistance system. However, the Florida case marks the first time Tesla has lost a related lawsuit and has been found partially responsible for the accident, and the hefty punitive fine may prompt more future lawsuits.

Over the past few years, Tesla has been involved in more than twenty similar civil lawsuits, sued by accident car owners or the families of the deceased, yet Tesla has consistently maintained that this was due to the owner's failure to take timely control of the vehicle and had nothing to do with the driver-assist system. In most cases, Tesla would settle with them, and very few lawsuits reached the trial stage.

In 2023, a jury in Riverside, Southern California, ruled that Tesla's Autopilot does not have manufacturing defects and does not need to be held responsible for a fatal incident involving a Tesla crashing into a tree in 2019. At the time, this Model 3 crashed into a tree and caught fire while in Autopilot mode at a speed of 120 km/h. However, in that case, the jury focused on technical manufacturing defects and did not discuss the misleading marketing by Tesla and Musk.

In March 2018, 38-year-old Apple engineer Walter Huang was driving a 2017 Model X, which crashed head-on at a speed of 100 km/h into a concrete barrier on Highway 101, causing the electric vehicle's battery to ignite. The scene was extremely tragic; Huang died after being taken to the hospital, leaving behind a wife and two children.

According to accident investigations, at the time of the crash, Walter Huang was also operating his phone and did not take control of the vehicle in time, failing to hit the brakes to avoid the collision. However, Huang's widow later sued Tesla for using users as Beta testers, claiming that Huang had previously discovered that the Autopilot in Model X had recognition errors, but Tesla stated it would debug it in the future, allowing him to continue driving.

Why is this $200 million fine from Florida significantly impactful for Tesla? After six years of litigation, the family of Walter Huang, who was involved in the 2018 incident, reached a settlement with Tesla last year. Meanwhile, the 2019 Florida accident persisted to the end, achieving a preliminary victory in the lawsuit.

For many years, Tesla and Musk have promoted Autopilot and its other driver-assistance software, Full Self-Driving, as significant advancements in automotive safety. Musk claims that Tesla vehicles using this software are safer than human drivers and that the company is betting on developing a safe, autonomous taxi fleet in the future.

Federal regulators and California regulatory agencies have long questioned the safety of Tesla's system. According to a report from the National Highway Traffic Safety Administration (NHTSA) last year, Tesla's driver-assist system has 'critical safety gaps,' leading to at least 467 collision incidents, including 13 fatal accidents. Whether Autopilot and FSD bear direct responsibility in these accidents is still pending official investigation and determination by U.S. regulatory agencies.

California demands a one-month sales ban

The core issue is that while Tesla's Autopilot and FSD are named autonomous driving, they are actually L2+ level advanced driver-assistance systems, significantly distant from true L4 level autonomous driving, and have not reached a level that can operate without supervision (L3 level). In the event of an accident, the driver must bear all responsibilities.

However, Tesla and Musk misled consumers through the naming of 'autonomous driving' and various videos and public statements, including the official statement that 'the driver is in the driver's seat for legal reasons,' suggesting to consumers that the vehicle already had full autonomous driving capabilities or was about to achieve them.

This is precisely the direct reason Tesla and Musk have faced ongoing 'false misleading marketing' lawsuits over the past few years. In September 2022, California consumers filed a class-action lawsuit against Tesla for misleading consumers regarding the naming of 'Autopilot' and 'FSD'. Over the past few years, California courts have repeatedly rejected Tesla's requests to terminate the lawsuit and switch to arbitration; this case is still in progress.

Meanwhile, the California Department of Motor Vehicles (DMV) also filed an administrative lawsuit against Tesla on July 28, 2022, accusing it of false advertising regarding Autopilot and FSD capabilities, seeking to suspend Tesla's sales license in California for at least 30 days and to compensate consumers.

This lawsuit just had its trial last month, and a final ruling has not yet been made. California is the largest electric vehicle market in the U.S.; if Tesla is banned from selling for a month, it would cause a significant loss to its cash flow.

Notably, last Friday, Tesla announced the launch of ride-sharing services in the San Francisco Bay Area through the Robotaxi app. At first glance, it seems Tesla has successfully expanded its unmanned taxi service from Austin, Texas, to the Bay Area, marking a significant milestone, and the operational scope far exceeds that of Google's Waymo.

However, in reality, Tesla has not obtained the on-road operation qualification for unmanned taxi services in California and is not qualified to provide L4 level autonomous driving services, only offering traditional vehicle transport permits (Charter Party Carrier Permit). Therefore, unlike the safety personnel sitting in the passenger seat of the Austin Robotaxi, the safety personnel in Silicon Valley's Tesla ride-sharing service vehicles sit directly in the driver's seat. Moreover, there are no Robotaxi markings on the vehicle.

This is another classic misalignment marketing from Musk.

Source: Sina.com, Silicon Valley Observer / Zheng Jun

\u003cc-94/\u003e