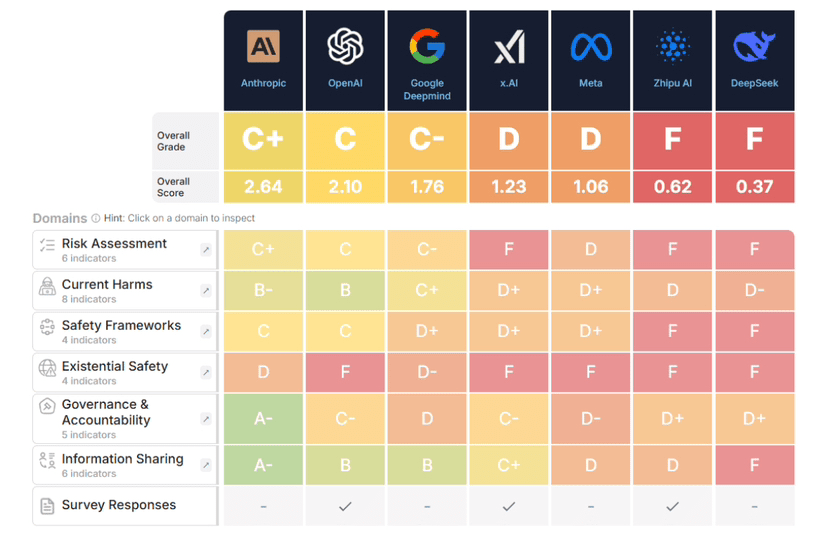

The Future of Life Institute (FLI) released a report stating that leading artificial intelligence developers #AI lack clear strategies for ensuring safety when creating systems with human-level intelligence. The document assessed the preparedness level of the seven largest companies in the field of large language models (LLM) for the emergence of artificial general intelligence (#AGI ).

None of the companies received a higher rating than D for the criterion of existential safety planning. The list of those checked included #OpenAI , #Google , DeepMind, Anthropic, Meta, xAI, as well as the Chinese Zhipu AI and DeepSeek. The index included six key areas, among which is the assessment of current risks and preparedness for potential disasters caused by loss of control over AI.

The best result overall—a C rating—was achieved by the company Anthropic. It is followed by OpenAI with a C rating and Google DeepMind with a C-. Other organizations showed an even lower level of readiness. One reviewer emphasized that no company has a 'coherent, implemented plan' for controlling the development of artificial intelligence.

Massachusetts Institute of Technology (MIT) professor and FLI co-founder Max Tegmark compared the developments of these companies in terms of threat level to nuclear technologies. At the same time, he stated that organizations do not even disclose basic plans to prevent possible disasters. Tegmark also noted that the pace of AI development significantly exceeds previous forecasts: while it was previously a matter of decades, now the developers themselves acknowledge that this could happen in just a few years.