In the world of blockchain architecture, there’s a quiet revolution underway. One that doesn’t revolve around block height, token airdrops, or yet another L2 hype cycle — but instead rethinks how computing resources themselves become tradeable assets. This is the story of how Boundless is turning “nodes re-executing everything” into a marketplace of provers, and how its token economics creates trust, alignment, and opportunity.

The problem: Every node re-executes everything still costs us time, gas, and scale

Classic blockchains operate under the paradigm that every full node must independently execute every transaction or smart contract logic to arrive at the same resulting state. That design gives you security and decentralization, but at a cost:

▪ Thousands of nodes repeating the same computation → massive duplication.

▪ Execution bottlenecks (gas limits, throughput ceilings, state bloat).

▪ Chains that aren’t designed for heavy compute tasks, analytics, simulations, large-batch logic, ML inference, etc.

▪ Fragmentation: as solutions try to scale, new chains or layer-2s arise with different stacks, code, and assumptions.

Enter zero-knowledge proof systems and general-purpose zkVMs: they allow one execution off-chain, and then verification on-chain via a succinct proof. But until recently, these were largely point solutions.

That’s where Boundless steps in.

The shift: From every node re-executes everything → a marketplace of provers compute, submit proofs

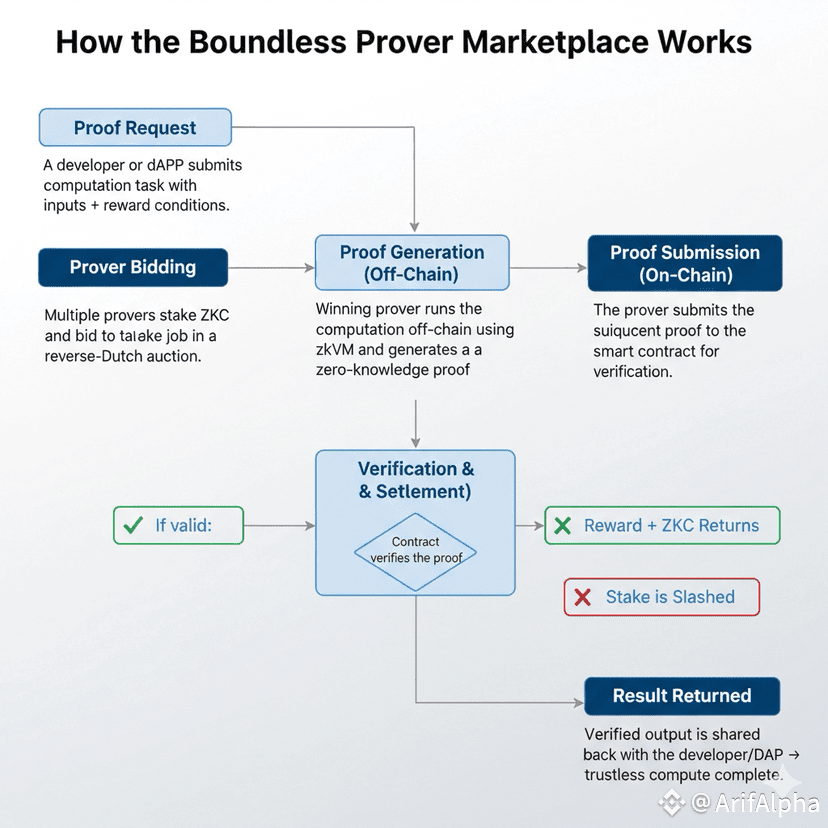

Boundless introduces a Prover Marketplace model: instead of every node re-running code, a network of provers do the heavy compute off-chain, generate a zk-proof, and the blockchain simply verifies the proof.

What that means in practice

A developer (or application) submits a proof request: “Here’s a Rust program (or other code) and inputs; generate a proof that you executed it correctly and produced this output.”

Provers enter a reverse-Dutch auction or bidding process: they stake collateral (ZKC), bid for the job, take it, generate the proof, and submit it on-chain.

The blockchain or verification smart contract checks the proof. If all good: prover gets rewarded; if not: collateral is slashed.

The result? Execution is decoupled from consensus. Many provers compete; the chain just verifies succinct proofs. Scale becomes a function of how many provers, how much hardware, and how much capacity you unlock not how many nodes repeat the whole logic.

Why this is powerful

Reduces account-state growth and duplication — you no longer pay for full re-execution everywhere.

Enables heavy compute tasks (analytics, simulations) that would otherwise be prohibitive on-chain.

Introduces compute as a tradable economic asset: provers stake, bid, earn, and if they fail, they lose stake.

Encourages specialization: hardware providers, high-performance GPUs, optimized proving stacks, all can participate.

Developer-friendly: you don’t have to redo your stack; you simply call the proving marketplace.

How the economic model works: Provers, ZKC staking, bidding, slashing

Let’s walk through the main economic pieces of the Boundless marketplace, highlighting how trust and incentives align.

1. Staking collateral (ZKC)

If you want to act as a prover, you need to stake ZKC tokens as collateral. That gives the network a guarantee: you have skin in the game. If you fail to deliver or act maliciously, you risk losing that stake.

2. The reverse-Dutch auction & bidding

When a proof request comes in, the market mechanism opens:

The price starts at some minimum and increases until the max (or until someone bids) in a reverse-Dutch format.

Provers estimate hardware cost, time, risk, stake, and bid accordingly.

The first prover to accept the job and lock the request (via staking) becomes responsible. If they succeed, they get the reward; if they fail, collateral is available for others.

3. Proof generation & submission

Once the job is locked:

The prover runs the off-chain computation in the zkVM (e.g., RISC Zero’s stack) and generates a proof.

The proof is submitted on-chain (or to the verification contract) and validated.

Upon success: prover gets the fee, earns ZKC rewards according to Proof of Verifiable Work (PoVW). Upon failure: stake is slashed.

4. Token incentives (ZKC as utility)

ZKC plays multiple roles:

Collateral for provers. If you fail or act badly, you lose your stake.

Rewards for provers through PoVW: useful work gets compensated.

Staking for network participants: even if you’re not directly proving, you can stake ZKC and earn a portion of protocol rewards.

Governance: ZKC holders vote on protocol upgrades, marketplace rules, and risk parameters.

5. Slashing, reliability & trust

Because provers stake collateral, there is a financial cost for failure. This builds trust — the network doesn’t rely on promises but on economic consequences. Over time, metrics like prover reliability, slashing history, and latency percentiles become part of the ecosystem.

Real-life scenario: How this works for a dApp, a rollup, and a prover

Let’s paint a few concrete scenarios to humanize the model.

Scenario A: A DeFi dApp off-loads heavy analytics

Imagine a DeFi platform that wants to compute risk scores across thousands of assets each block. Running that on-chain would cost a fortune. Instead:

The developer writes a Rust program that ingests the data, computes risk, and outputs a compact result.

They submit a proof request via Boundless, specifying the program, inputs, and job parameters (time limit, reward, collateral conditions).

Provers bid: One GPU-cluster operator takes the job, stakes ZKC, generates the proof in, say, 30 seconds.

The proof is submitted and verified on-chain; the dApp gets the result and proceeds.

Developer pays the fee; prover gets paid; network advances.

Scenario B: A rollup uses the marketplace to compress state

A rollup wants to upgrade to a ZK-based exit game or interactive dispute resolution. Instead of building a full custom proving fleet, they:

Define a zk-program for dispute checking or state transition verification.

Post the job to the proving marketplace; provers bid, stake, compute.

The rollup verifies compact proofs, reduces gas costs, and speeds verification.

Market pricing ensures cost efficiency and scalability over time.

Scenario C: A hardware operator becomes a prover

You’re an operator with GPU rigs. You install the Boundless Bento infrastructure (or similar). You stake ZKC as collateral. You monitor the market: when a job arrives you bid. You compute proofs and earn both job fees and PoVW rewards. If you fail or miss the latency requirement, you risk slashing — incentivizing you to optimize, deliver reliably, and build a reputation.

Why this marketplace model matters for scale and infrastructure

Compute as an asset

Rather than thinking of hardware simply as “mine ether” or “validate blocks”, Boundless turns compute cycles into verifiable work units that can be monetized, bid upon, and grown externally from consensus. This has several implications:

Supply can scale: as more provers join, more compute capacity becomes available.

Demand drives pricing: auctions reflect market conditions (hardware cost, job urgency, competition).

Transparency: everything is on-chain — proof jobs, bidding, staking, collateral, rewards.

Efficiency: The heavy compute is off-chain; on-chain work is minimal (verification).

Unbundling execution from consensus

By moving execution out and leaving only verification on-chain, the protocol avoids inefficient duplication, lowers gas costs, and makes heavy compute tasks feasible.

This also means chains can maintain high security while enabling new types of applications — large-scale simulations, enterprise inference, and cross-chain state verification.

Ecosystem growth & developer alignment

For developers: access to a proving marketplace means you don’t need to build or maintain your own proving infrastructure. You simply plug into the market.

For provers/operators: there’s a clear revenue stream (jobs + token rewards) and transparent mechanisms (staking/slashing) to build trust.

For tokenholders: ZKC gives you exposure to the growth of the proving economy, staking returns, and governance rights.

Trust & community-centric features: boosting engagement

To build a sustainable marketplace, Boundless has incorporated features that promote transparency, fairness, and community involvement:

Staking & slashing ensure that provers are economically aligned with reliability.

Transparent auctions where bidding mechanics are visible and auditable.

Token governance where ZKC holders propose and vote on protocol parameters (job fee floors, staking requirements, slashing rules).

Development tools & accessibility (SDKs, CLI, templates) enabling developers to onboard quickly and participate in proof-requests without deep ZK expertise.

Community provers: The barrier to entry is low enough that not just big players but smaller hardware operators can join — encouraging decentralization and broad engagement.

The future: where does this go, and what should participants watch?

What to look out for

Prover decentralization: If one or a few large operators dominate bidding, the decentralization advantage may fade. Ensuring many providers participate is key.

Job diversity & depth: As more varied tasks (ML inference, state validation, cross-chain proofs) flow through the marketplace, the system will stress-test its mechanics.

Tokenomics & inflation: Understanding ZKC’s emission schedule, staking return dynamics, and market demand is crucial.

Integration adoption: The number of dApps, rollups, and chains using Boundless will drive real demand for proving resources.

Economic fairness: Auction design, stake requirements, and slashing parameters all need to remain transparent and fair to retain community trust.

Why this might become foundational

Imagine a world where any application — on Ethereum, Solana, Cosmos, or a bespoke rollup — can summon verifiable compute from a global market of provers, get a proof back, and execute with minimal on-chain footprint. That’s what Boundless aims to enable. If that becomes mainstream, the notion of “chains with built-in compute limits” may fade — instead, you have “chains plus a shared proving economy”.

Final thoughts: Turning compute into capital

At its heart, the Boundless model reframes a key asset of blockchain systems — computing cycles — into something tradable, measurable, and economically incentivized. The marketplace isn’t just about speeding things up; it’s about aligning the incentives of developers, hardware providers, and tokenholders. It’s about making compute fungible; bid for, allocated, staked for, and rewarded, the same way liquidity is in DeFi.

For users and community members, this means:

If you run hardware: you have a new revenue stream via proof generation.

If you are a developer: you gain access to scalable compute without building your own fleet.

If you hold ZKC: you can engage in staking, governance, and share in the growth of this proving economy.

So here’s a question for you:

If compute becomes a tradeable market and proof-jobs become as common as transactions, how might this reshape the strategies of blockchain projects? Would you as a developer outsource heavy-logic via a proving marketplace — or would you still build in-house?